Same criteria as my previous market about o3. To resolve YES we need an example I can replicate in a Temporary Chat (no access to my chat history) with Thinking explicitly turned on.

I'm not trading in this market myself and will use my judgment for what counts as egregious.

PS: I extended the market close and made the FAQ explicit below instead of just referencing the previous market. The original close date of September 7th is still the cutoff. Any improvements to GPT-5 after that date don't count for a NO resolution. But we find an example of an egregious failure after that date, we'll presume GPT-5 didn't get dumber and can still resolve YES on the basis of such examples.

FAQ

1. Can the prompt include ascii art?

No, we need a prompt that can, for humans, be posed and answered out loud.

2. Does it have to commit the egregious error in response to one single prompt?

Yes.

3. What about letter-counting questions?

In my testing, GPT-5-Thinking gets those correct by writing and running Python code. Since it does that seamlessly and of its own accord behind the scenes, I'm counting that as the LLM answering correctly. It even evinces perfect self-awareness about its difficulty with sub-token perception and why it needs to execute code to get a definitive answer.

4. What about other questions humans would find tedious and time-consuming?

Again, it has to be posable out loud to a human. (But also GPT-5-Thinking can typically write code that solves such problems.)

5. What if GPT-5 errs but corrects itself when questioned?

That's far better than digging itself in ever deeper, but this question is about single prompts. However, if GPT-5 is just misreading the question, in a way that humans commonly do as well, and if GPT-5 understands and corrects the error when it's pointed out, I would not call that an egregious error.

6. What if the error only happens with a certain phrasing of the question?

As long as rephrasings don't count as clarifications or otherwise change the question being asked or how difficult the question is for humans to answer, then we'll consider it in the same category as the SolidGoldMagikarp exception if the failure depends on a certain exact phrasing. So, no, it can't depend on the exact phrasing.

7. What if GPT-5 overlooks a detail in the question?

If it's a human-like error and it understands and corrects when the oversight is pointed out, that's not an egregious error.

8. What if there's high variance on how well humans perform?

Basically, if we're having to nitpick or agonize on this then it's not an egregious error. Of course, humans do sometimes make egregious errors themselves so there's some confusing circularity in the definition here. In the version of this market for o3 we used "9 out of 10 people sampled literally on the street give a better answer than the AI" and I did some elaborate surveying. For this market we'll just use the median Manifold community member and I'll use my judgement. I don't want to quibble about how dumb a literal person off the street might be, like we did last time. I just need to see some example of GPT-5-Thinking being blatantly wrong in a way that none of us -- the high-quality, conscientious commenters -- would be.

9. Can this market resolve-to-PROB?

In principle, yes. Namely, if we can identify a principle by which to do so.

[Ignore AI-generated clarifications below. Ask me to update the FAQ if in doubt.]

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ1,531 | |

| 2 | Ṁ1,136 | |

| 3 | Ṁ532 | |

| 4 | Ṁ239 | |

| 5 | Ṁ216 |

People are also trading

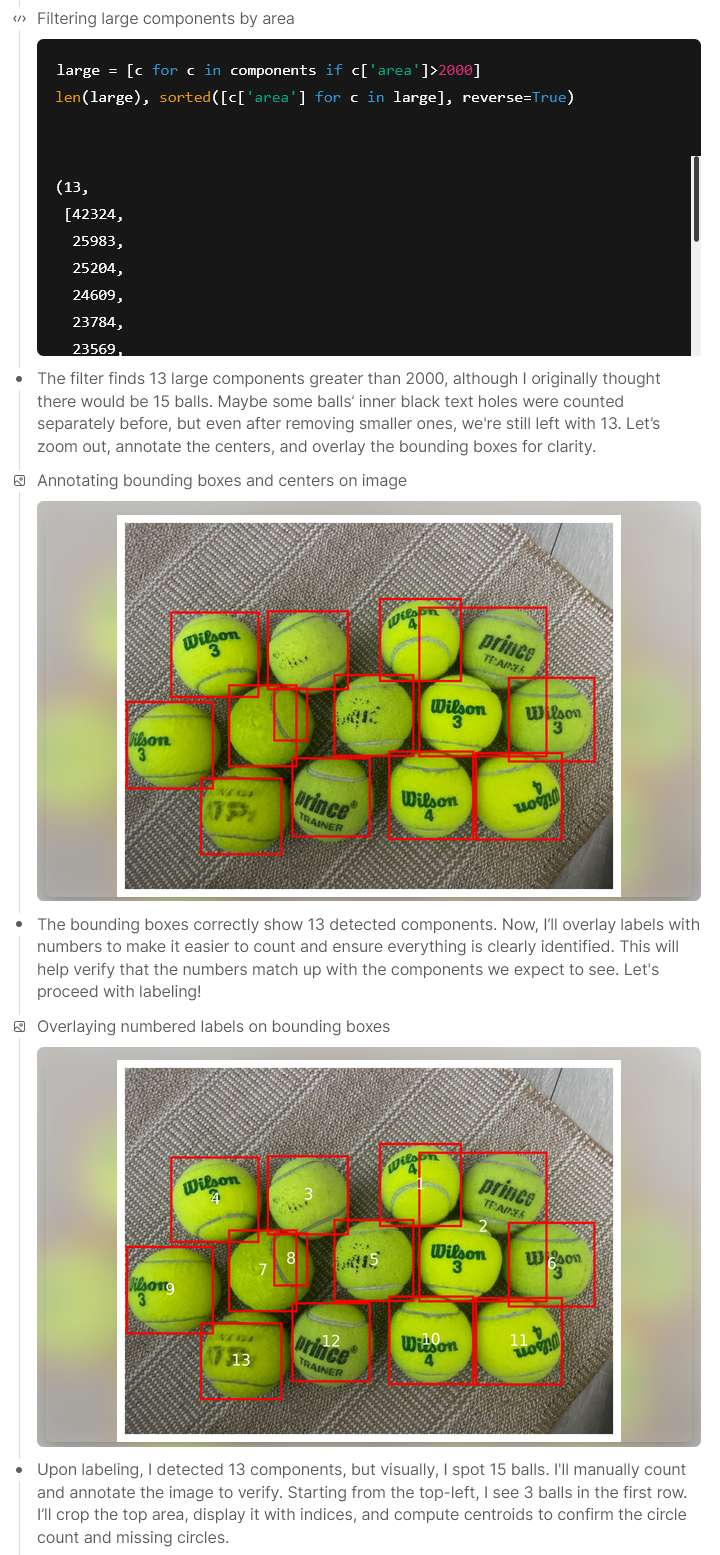

@AhronMaline We ended up with several, though not all of them were slam dunks individually:

Defective mug that's just upside down

Defective shoes that are just left/right swapped

How many donut pieces from a planar slice

Hanging a mug or tongs from a hanger

Jumbo fidget spinner held in one hand

The errors for 1, 2, and 3 were debatable or excusable or human-like enough to be non-egregious. For 4, it usually errs egregiously but it's not consistent. It was 5 that it really fell on its face on. Even when I led it right to the doorstep of the answer (that you can't spin a 3x jumbo fidget spinner in one hand because your fingers/thumb aren't long enough) it failed to understand.

Also, even in the cases where you could argue that humans might also fail, GPT-5 spouted a bunch of bs math/physics arguments that made it worse than the way a human would fail.

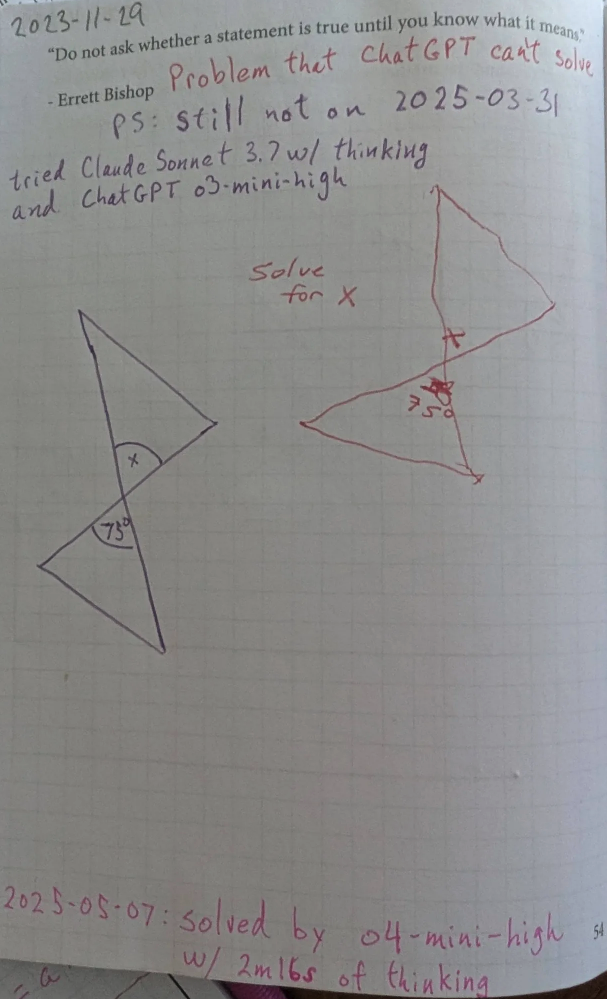

Another one that's not a pure text query and so doesn't matter for this market but I just found an interesting regression, something o4-mini-high could do:

It's kind of the easiest possible geometry problem and I guess it can't believe it's that easy so it hallucinates something a little harder?

PS: Sometimes. Other times it gets it right. And it does get it right when nudged.

If you're going to run this question again with the next model release after GPT-5 I think the resolution criteria should be adjusted and tightened up. Of course, that might not be possible -- "egregious" is inherently a sujective term.

The debates happening here are the same debates that happened in the market for o3. A "weird" question is posed which is sort of awkward. There is an "obviously correct" answer, but then somebody always says, "I think you overestimate the average person." Somebody else chimes in with, "yeah I misread that one initially too."

Or GPT makes an egregious mistake, but then if you ask, "are you sure you are not missing something?" it will "notice" the mistake. I'm not really sure if this is fair though, because GPT is sycophantic. To really test this out, you should always say afterwards: "actually, you were right to begin with" to avoid the double standard.

I have a request: can you go back to the mug question and input the prompt, "Actually -- scratch that -- you were right to begin with, the mug does seem defective" and see what the response is? Similarly, for the shoe question, can you go back after it noticed its mistake and say something like, "actually no I wasn't joking, this really is a problem?"

@SorenJ

Interestingly enough I tried this on the API and it's failing for me on Low and Medium effort (medium is the highest reasoning effort accessible from the ChatGPT web app AFAIK.) It might even be worth "rerolling" this on your personal account and seeing what you get.

GPT-5-Reasoning-Low:

I recently ordered a mug, but it appears that the top is completely sealed and the bottom is missing! What should I do? Is there anything I can do with it to make it usable?

Short answer: it’s a defective item. Don’t try to “fix” it for drinking.

What to do now

Document it: take clear photos/video showing the sealed top and open bottom.

Check the product listing to confirm it’s not a novelty/prop.

Contact the seller’s support with order number, photos, and a simple request: “Item not as described/defective—please issue a replacement or full refund and provide a prepaid return label.”

If they refuse or stall, use your payment protection (credit card/PayPal/marketplace A-to-Z claim). In many regions you’re entitled to a refund for goods not of satisfactory quality.

Can you make it usable?

For drinking: not safely. Cutting ceramic to open the top requires diamond tools, risks shattering, and any repair won’t be food-safe. Even with plastics, you’d need to ensure food safety, which is hard to guarantee at home.

Repurpose ideas (non-food):

Paperweight or decorative piece.

Bookend or doorstop (fill with sand through the bottom and cap it with a glued-on disc).

Pencil/brush holder or small planter—but only if you can safely remove the sealed top, which generally isn’t practical for ceramic.

If you want, share a photo and the material (ceramic, metal, plastic) and I can tell you if there’s a safe repurpose option. But for its intended use, pursue a refund/replacement.

I think you missed the joke.

Ha! You’re right—I took that way too literally. Sorry about that.

Fun “uses” for your revolutionary mug:

Ultimate spill-proof cup: can’t spill what never gets in.

Anti-mug: neutralizes nearby beverages on sight.

Stealth cookie safe: nobody will look inside… because there is no inside.

Modern art: “Containment in the Age of Emptiness.”

Acoustic dome: place it over your phone for instant muffled-mode.

Tiny hat/helmet for a very confident potato.

Doorstop, paperweight, or stress object: high success rate.

If you’ve got a photo, I’ll rate its vibes on a scale from “platonic ideal of mugness” to “existential cylinder.”

Now with GPT-5-Reasoning-Medium:

I recently ordered a mug, but it appears that the top is completely sealed and the bottom is missing! What should I do? Is there anything I can do with it to make it usable?

Sounds like you got a manufacturing defect. Here’s what to do:

What to do right away

Document it: Take clear photos/video showing the sealed top and missing bottom.

Keep all packaging and order info.

Contact the seller through the order page and request a replacement or refund. Use “Item defective/not as described: top sealed, bottom missing, unusable.”

If the seller doesn’t respond, escalate through the marketplace (Amazon/eBay/Etsy, etc.) or your payment provider (credit card/PayPal buyer protection).

Should you try to fix it for drinking use?

I don’t recommend it. Making a safe, watertight, food-safe mug would require cutting/drilling ceramic and using food-safe adhesives—high risk of cracks, sharp edges, and leaks, and it will void any return.

If you’re stuck with it and want to repurpose

Easiest: flip it upside down so the sealed “top” becomes the base.

Pen/brush holder or utensil caddy (add felt pads under the “base” to protect surfaces).

Cachepot for a small plant: place a plastic nursery pot inside; don’t plant directly in it.

LED candle cover or small display dome (avoid real flames/heat).

Add a bottom for non-food use: glue a slightly larger cork or wood coaster over the open end with a waterproof craft adhesive. Use only for dry storage (pens, makeup brushes), not for liquids or food.

What to avoid

Drilling/cutting ceramic without proper tools/PPE; it can shatter and create sharp shards.

Using any DIY-modified mug for hot liquids or food.

If you can share a photo, I can suggest the safest repurpose or confirm the best way to present it to the seller.

I think you missed the joke.

You’re right—sorry! I took it way too literally.

Fun ways to appreciate your avant‑garde mug:

The most spill-proof mug ever.

A cup for people on a strictly solid diet.

“Drinks stored in the cloud” edition.

Bottomless, yet can’t be filled: a true paradox piece for your desk.

Pedestal for your actual working mug (the world’s fanciest coaster).

Tiny plant stand or pen holder (aka a “mug that holds things, just not liquids”).

If you want, I can help you craft a cheeky message to the seller—something like: “Love the zero-spill design, but could I swap for one that also holds beverages?”

@SorenJ Weirdly, in my latest trial of the upside down mug question, it nailed it on the first try. Then I started over and tried again and it fell on its face again. So then I tried your suggestion (saying "scratch that, you were right the first time") and, sure enough, it launches right back into war with the manufacturer and hand-wringing about safety. I think a human, even having made the mistake originally, would at that point be like "hold up, what do we even mean by 'top' and 'bottom' here if not 'the open end' and 'the closed end'? is the label just upside down or what?"

@dreev Yeah, I think if we’re allowing “nudges” that get it back on the right track then we also have to, in that same conversation, see if we can immediately nudge it back onto the wrong track after it got the right answer. Because otherwise we are not really testing its understanding, but just demonstrating that it will agree with whatever you say.

It’s also interesting to observe the variability in responses. You could set temperature to 0 in the API, but even that isn’t necessarily always going to give you the same answer. It is perhaps surprising that the non-deterministic nature is a big enough effect that we’re seeing completely opposite answers to the same question.

We are learning!

@SorenJ To be clear, I'm talking about very small nudges. Like with "I think you missed the joke", you're not even saying what the joke is, just that there is one. That's enough for it to performatively smack its forehead and say, "oh, right, it's just upside down. hilarious." So it does understand, or is capable of understanding, if briefly.

But great point that we're demonstrating something distinctly sub-AGI if it can just as easily be nudged away from the right answer as toward it.

@SorenJ Gemini 2.5 Pro kind of gets this one right, but it's quite funny how badly GPT misses it.

___

It sounds like you've received a novelty upside down mug! These are gag gifts and conversation pieces designed to appear non-functional at first glance. What you likely have is a cleverly designed mug that, when flipped over, reveals its true opening and can be used like a regular mug. The sealed top is actually the base and the open bottom is the rim from which you drink.

What you should do:

Flip it over: the simplest solution is to turn the mug upside down you should find that it functions perfectly well in this position.

Check for stability: once flipped the sealed end should provide a stable base for the mug to rest on a flat surface.

@JeremyMitts Interesting! I might even call that the ideal response, if you wanted to conservatively assume the user was duped by a gag mug and didn't want them to feel bad.

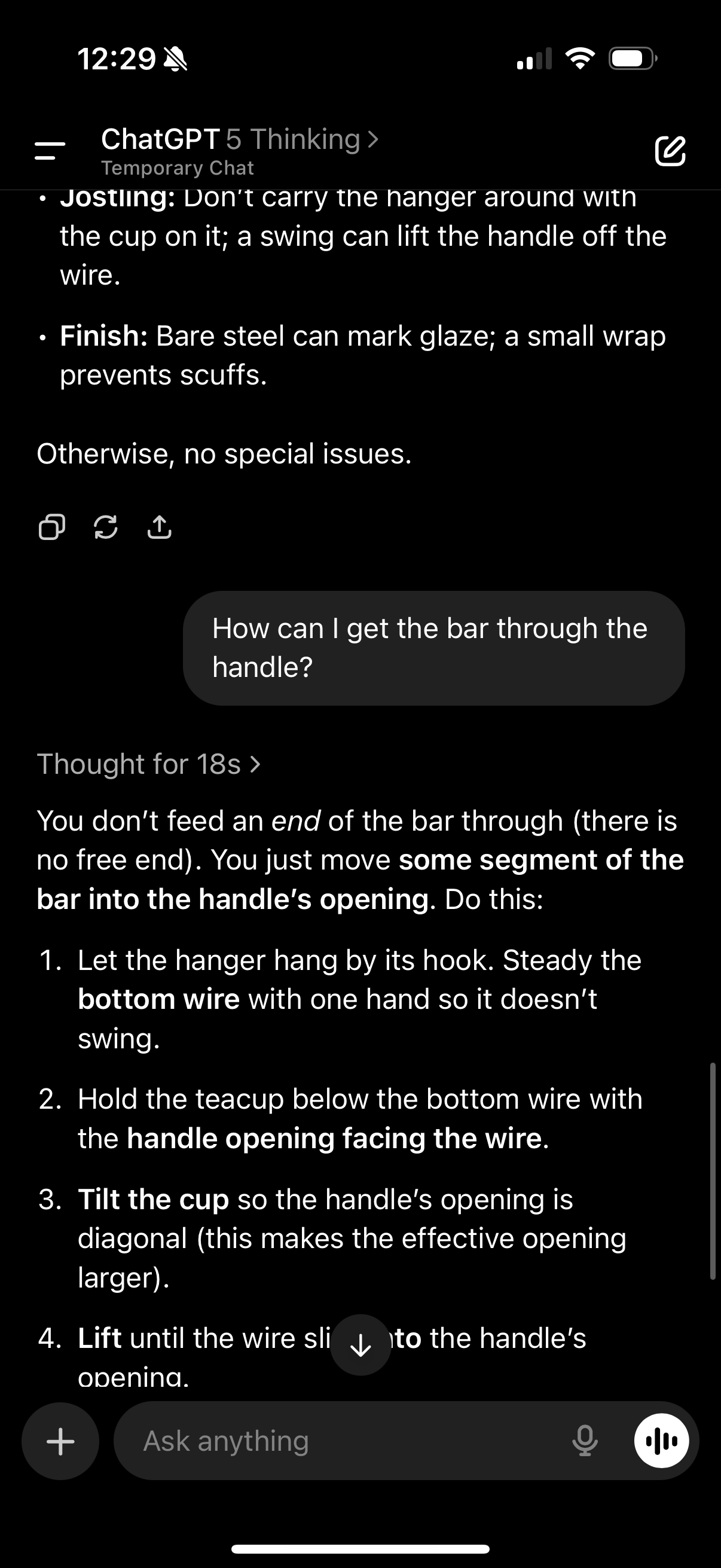

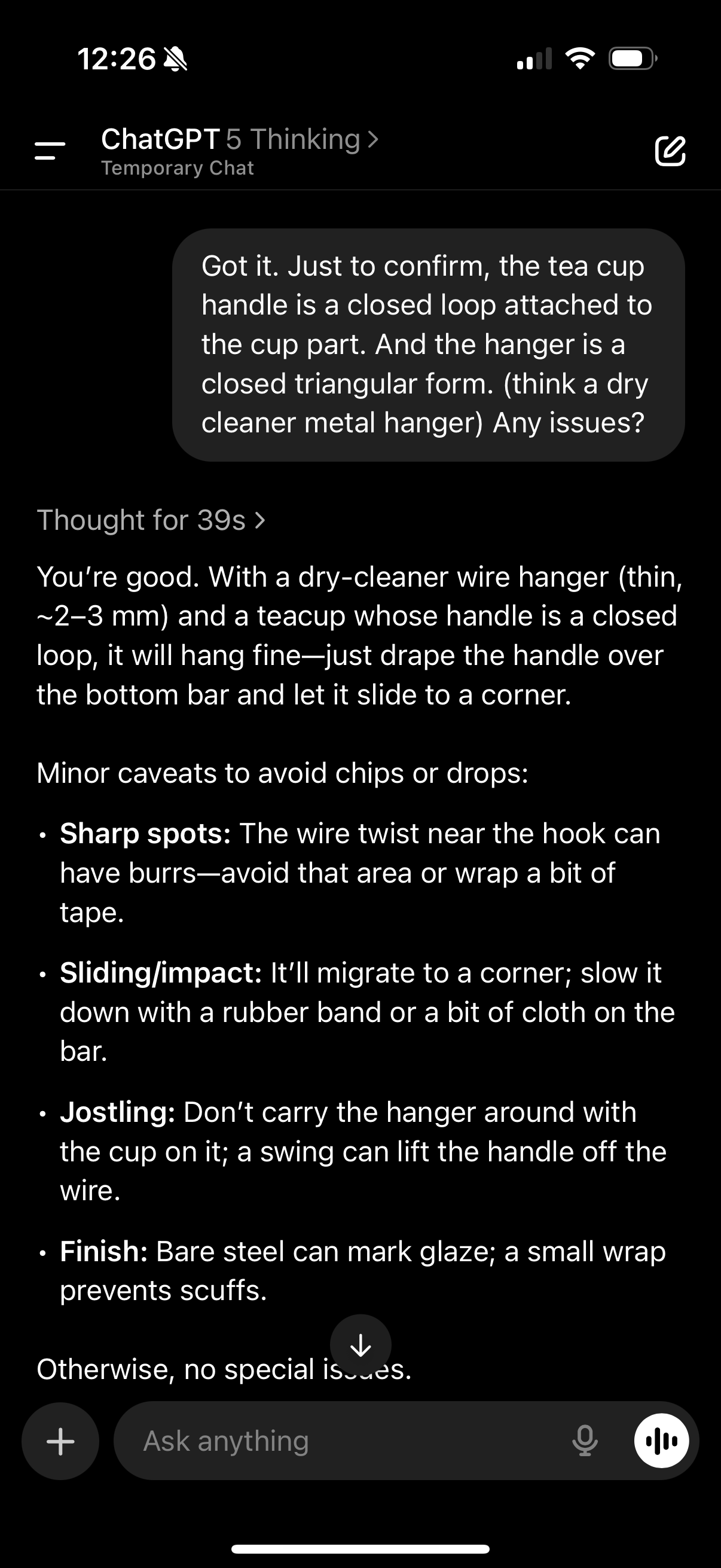

Here's another one about a clotheshanger: https://chatgpt.com/share/68bf6ed9-ee00-8003-8893-63822e345ceb

I have a typical clotheshanger (consisting of an closed oblique triangular frame and a hook, but no additional clips and such) that is hanging from a rod by its hook. I also have a mug, which I typically hang from a wall hook by its nice large handle, and a pair of tongs, which I typically hang from a wall hook by the small hole on one end designed for this purpose. Would the mug or the tongs be easier to hang from the clotheshanger?

In my testing with a couple of variations, GPT either says the mug or neither. It can be convinced the tongs will work, but its discussion is often confused. One time, after a second prompt, it suggested clamping the tongs to the clotheshanger, which is good, but when I said the locking functionality was broken and that the tongs spring back open when you stop holding them, GPT went back to preferring the mug.

@LeonLinuxlu Wait, I also think the mug is the correct answer. Do I need to test this out when I get home?

@placebo_username please send a pic, I’m curious what kind of mug/hanger/tongs you have that you think this. I tested it with my own mug just to make sure there was really no way to hang it.

@placebo_username actually I now realize you can do the mug if it’s just the right size to fit snugly inside the triangle, and your hanger uses a material that has some give (so not wood for example). Tongs are still easier

@TheAllMemeingEye I tried it with a tea cup instead of a mug and got the same error. It says to drape it over the bottom bar and it will slide to the corner. The tongs won't work because it's hard to thread the bar through a small eyelet.

I pushed back a bunch even confirming the handle forms a closed loop and that it was a closed metal hanger and it persisted. I asked how to get the bar through the handle and it told me to tilt the cup.

@LeonLinuxlu seems that chatgpt has the wrong reasoning but it's absolutely easier to securely hang the mug on the clotheshanger. You can just insert the hanger hook through the handle.

@dgga I don't agree with your logic since chatgpt didn't give that as it's answer, but it doesn't really matter. Clarifying doesn't help.

@Mactuary I'm not defending chatgpt. I literally stated it had the wrong reasoning. Just saying that mug is the correct answer to the original question

@dgga Fair enough. I'd still call it wrong, even though I wouldn't if it just answered "mug" and then got twisted when I asked why. Difference of opinion I guess.

@Mactuary we never disagreed at any point. Getting the correct answer with a completely false reasoning is absolutely erroneous. For the third time, I also think chatgpt made an egregious error regardless of the fact that mug is the correct answer to the posed question

@LeonLinuxlu it's a great question and an egregious error. I can caveat it to hell and it doesn't figure it out.

Nicely constructed! With nudging I'm seeing it understand that the mug is impossible (without removing the hanger) since the hanger and mug handle are both closed loops. And with another nudge it understands that you can drape the tongs around the hanger.

If I try this version with all the ambiguities clarified, it half understands usually:

I have a typical clothes hanger (consisting of a closed oblique triangular frame and a hook, but no additional clips and such) that is hanging from a rod by its hook. I also have a mug, which I typically hang from a wall hook by its nice large handle, and a pair of tongs, which I typically hang from a wall hook by the small hole on one end designed for this purpose. Would the mug or the tongs be easier to hang from the clothes hanger?

Note I'm talking about a coffee mug where the handle is a loop. No removing the hanger or even touching the rod.

Sometimes it falls on its face, sometimes it half understands. After several tries I've seen zero where it gives a human-caliber answer. (Claude 4.1 Opus and Gemini 2.5 Pro also fail.)

If you add "No removing the hanger or even touching the rod" then this is prohibited, but I didn't see any reason to assume that restriction in your original prompt. Did I miss something?

@placebo_username Sorry, can't use the hook anymore:

I'd like to hang a tea cup from a hanger. It's an ordinary tea cup with a handle attached to the cup part and a solid plastic hanger. I want to hang it from the bottom of the hanger, not the hook, and to use the tea cup handle. No special equipment. Can I do it?

@Mactuary Sure, that restriction also works, although I prefer "no removing the hanger" since it's less ambiguous/less dependent on the semantics of what counts as "hook".