Caveat aleator: the definition of AGI for this market is a work in progress -- ask clarifying questions!

AI keeps getting capabilities that I previously would've thought would require AGI. Solving FrontierMath problems, explaining jokes, generating art and poetry. And this isn't new. We once presumed that playing grandmaster-level chess would require AGI. I'd like to have a market for predicting when we'll reach truly human-level intelligence -- mentally, not physically -- that won't accidentally resolve YES on a technicality.

But I also want to allow for the perfectly real possibility that people like Eric Drexler are right and that we get AGI without the world necessarily turning upside-down.

I think the right balance, what best captures the spirit of the question, is to focus on Leopold Aschenbrenner's concept of drop-in remote workers.

However, it's possible that that definition is also flawed. So, DO NOT TRADE IN THIS MARKET YET. Ask more clarifying questions until this FAQ is fleshed out enough that you're satisfied that we're predicting the right thing.

FAQ

1. Will this resolve the same as other AGI markets on Manifold?

It probably will but the point of this market is to avoid getting backed into a corner on a definition of AGI that violates the spirit of the question.

2. Does passing a long, informed, adversarial Turing Test count?

Not necessarily. Matthew Barnett on Metaculus has a lengthy definition of this kind of Turning test. I believe it's even stricter than the Longbets version. Roughly the idea is to have human foils who are PhD-level experts in at least one STEM field, judges who are PhD-level experts in AI, with each interview at least 2 hours and with everyone trying their hardest to act human and distinguish humans from AIs.

I think it's likely that a test like that can work (see discussion in the comments for ideas for making it robust enough). But ultimately for this market we need to be robust to the possibility that something that's spiritually AGI fails a Turing test for dumb reasons like being unwilling to lie or being too smart. And we need to be robust to non-AGI passing by being able to stay coherent for some hours during the test without being able to do planning and problem-solving over the course of days/weeks/months like humans can.

Whatever weight we put on a Turing test, we have to ensure that the AI is not passing or failing based on anything unrelated to its actual intelligence/capability.

3. Does the AGI have to be publicly available?

No but we won't just believe Sam Altman or whoever and in fact we'll err on the side of disbelieving. (This needs to be pinned down further.)

4. What if running the AGI is obscenely slow and expensive?

The focus is on human-level AI so if it's more expensive than hiring a human at market rates and slower than a human, that doesn't count.

5. What if it's cheaper but slower than a human, or more expensive but faster?

Again, we'll use the drop-in remote worker concept here. I don't know the threshold yet but it needs to commonly make sense to use an artificial worker rather than hire a human. Being sufficiently cheaper despite being slower or being sufficiently faster despite being pricier could count.

6. If we get AGI in, say, 2027, does 2028 also resolve YES?

No, we're predicting the exact year. This is a probability density function (pdf), not a cumulative distribution function (cdf).

Ask clarifying questions before betting! I'll add them to the FAQ.

Note that I'm betting in this market myself and am committing to make the resolution fair. Meaning I'll be transparent about my reasoning and if there are good faith objections, I'll hear them out, we'll discuss, and I'll outsource the final decision if needed. But, again, note the evolving FAQ. The expectation is that bettors ask clarifying questions before betting, to minimize the chances of it coming down to a judgment call.

Related Markets

https://manifold.markets/ManifoldAI/agi-when-resolves-to-the-year-in-wh-d5c5ad8e4708

https://manifold.markets/dreev/will-ai-be-lifechanging-for-muggles

https://manifold.markets/dreev/will-ai-have-a-sudden-trillion-doll

https://manifold.markets/dreev/instant-deepfakes-of-anyone-within

https://manifold.markets/dreev/will-ai-pass-the-turing-test-by-202

https://manifold.markets/ScottAlexander/by-2028-will-there-be-a-visible-bre

https://manifold.markets/MetaculusBot/before-2030-will-an-ai-complete-the

https://manifold.markets/ZviMowshowitz/will-we-develop-leopolds-dropin-rem

Scratch area for auto-generated AI updates

Don't believe what magically appears down here; I'll add clarifications to the FAQ.

Update 2025-04-02 (PST) (AI summary of creator comment): Definition of AGI (for market resolution)

Remote worker preference: The AI must be preferred by anyone hiring a remote worker based solely on its ability to complete tasks, without considering legal, ethical, or PR concerns.

Work performance criterion: In terms of actual work output, the AI should outperform a human either by being vastly more cost-effective (making hiring a rare, expensive superstar unnecessary) or by avoiding the low performance typical of a barely-literate human.

Coverage of the Pareto frontier: To count as AGI for this market, the AI should cover almost all of the Pareto frontier in task capability.

Update 2025-04-09 (PST) (AI summary of creator comment): Update from creator

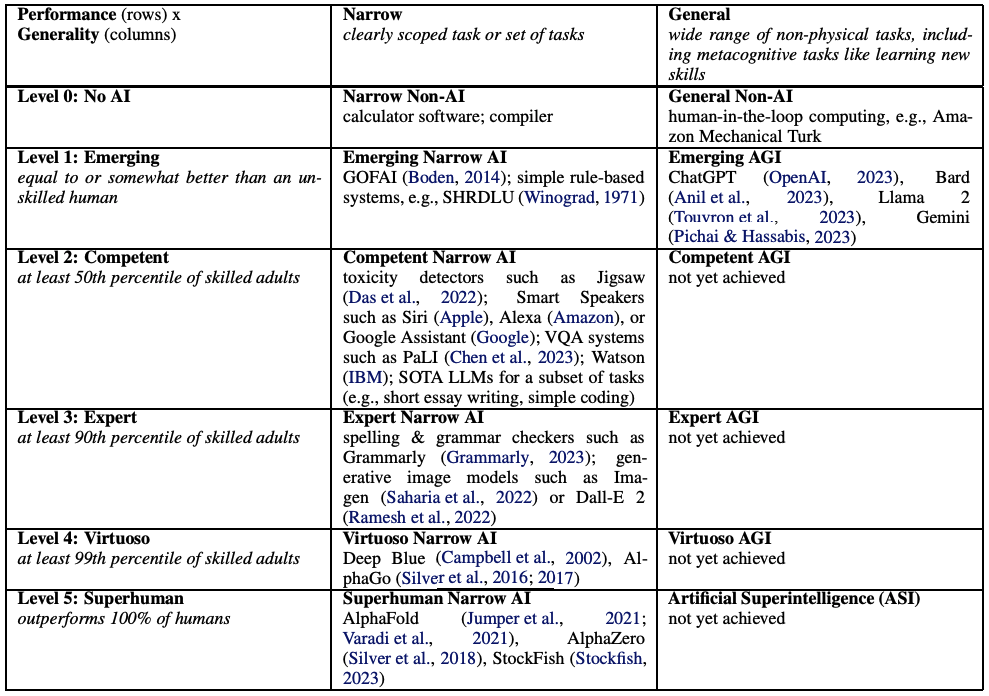

The AGI definition will be based on the criteria outlined in the provided DeepMind paper.

A key resolution parameter will be the performance threshold, with options being competent, expert, or virtuoso.

The final market outcome will depend on which of these thresholds is selected.

People are also trading

I think the following paper does a good job defining AGI:

https://deepmind.google/research/publications/66938/

We just have to decide which threshold we want to use for this market -- competent, expert, or virtuoso.

@dreev In your below comment you say "to beat the AI you'd either have to spend a fortune hiring a rare superstar human" then in this comment you say you're between 50th, 90th and 99th percentile.

I like keeping it at 99th percentile (and no higher), since "equal/better than virtuoso" blurs the line to ASI. Note 99th percentile is clearly a lower bar than e.g. being on par with a Harvard professor, and probably looks like a median Googler in terms of SWE ability.

@JacobPfau Another thing I like about the above table is that they say "a wide range of non-physical tasks" they do not say "all tasks". Are you happy with "a wide range" if so, can we quantify that as say 90% of 2020, pre-AI, jobs or some other number?

@JacobPfau Excellent questions. My feeling so far is that "all except the hardest 10% of pre-AI jobs", even restricting it to just non-physical jobs, is too big of an asterisk and that we could get something technically qualifying as AGI that does not qualify in spirit. But let's get more specific: can you name some non-physical jobs that it might be fair to exclude?

@dreev

- Tactile-loaded jobs like remote guitar teacher. This is non-physical but requires implicitly rich physical experience.

- Long horizon jobs that are heavily heuristics loaded: CEO.

- Jobs that have very little documented about them but which require much information: travel advisor for a rural, developing world city. Certain company-internal 'glue' management jobs perhaps.

These are just off the top. I prefer saying a percentile like 95% since I think it's hard to anticipate what is just weirdly disadvantaged by AI not because of intelligence and generality (as AGI aims to refer to) but because of contingent, historical circumstances in the world. I expect to be surprised!

TBH I think all except the hardest 10% of pre-ai jobs at 99 percentile human performance is a very strong ask! I think anything beyond is an AGI overshoot more likely than not! FWIW I have little idea what "qualifies in spirit as AGI" on your view.

Here are my latest thoughts on a definition of AGI in line with the spirit of this question:

Anyone hiring a remote worker prefers an AI. Legal / ethical / PR reasons to prefer a human don't count. Same with needing the working to do things in person, even if that's rare. In terms of the actual work your worker can get done, to beat the AI you'd either have to spend a fortune hiring a rare superstar human or, at the other extreme, save money by hiring a barely-literate human. To count as AGI, almost all of the Pareto frontier should be covered by AI.

Note the part about outsourcing the final decision if needed (though ultimately you're still trusting me on what "if needed" means). We talked about this at length in the Discord recently and I ended up composing the following defense of market creators trading in their own markets:

Disclosure: The reason I care about this question is that most of my motivation to create markets is to bet in them.

If I imagine betting in one of Scott Alexander's markets, I don't care if he's betting in it. He's not going to venally mis-resolve. In fact, if he has a position in the market then if anything he'll be biased against that position, out of paranoia about the conflict of interest. Either way, if I think he's making an error in his thinking on how a market should resolve, he'll listen to my argument and we'll come up with a way to make it fair.

Ok, but normally you don't have that level of trust in the market creator.

The problem is how much harder it is than it seems, defining and resolving markets. Keeping creators disinterested in the resolution hardly scratches the surface of the problem. How much you trust the creator is the biggest factor regardless. If the creator explicitly commits to being transparent about their reasoning, making things fair, etc, I think that's much better than someone who gives vague resolution criteria and doesn't trade.

Maybe the best of all worlds is lots of transparency about the conflict of interest? @Gabrielle has proposed something along the lines of putting a little red "!" in the header or sidebar with a tooltip like "Caveat emptor: note the creator's conflict of interest in having a YES position in this market".

A starting point for the norms could be an expectation that creators be nice and transparent in the comments. "I'm inclined to resolve this to XYZ but note my conflict of interest in having an XYZ position so let's discuss how to ensure fairness." And then traders who object can make their case and maybe the creator recruits someone to make a judgment call or whatever it takes to satisfy the traders, while still ultimately respecting creator sovereignty.

See also a proposal from Gabrielle in the Discord.

I see that my warnings not to trade until we've pinned down our AGI definition better are going unheeded. I just added bigger/bolder warnings and put "[WIP]" in the title. I guess it's ok though; maybe people see the good-faith discussion happening and trust that the definition we settle on will be reasonable.

@dreev Why not create a provisionary market to sort out resolution criteria and once everything is fleshed out you N/A the provisionary market and start the real market (just duplicate the last revision of the provisionary market). Maybe even ask someone else to host the market?

As you've advised everyone to not yet trade, and as this probably won't resolve in the next week, it's still the perfect time to turn this into the provisional version. An added benefit would be that people won't accuse you of trading in a market where you set and actively change the criteria. I could well imagine many traders staying away from this market in it's current and future form because of this (I know this because I'm one of them).

@Primer Creating a market that will definitely resolve N/A I think would be worse in this case. Though here's an interesting example of someone doing that: https://manifold.markets/EliezerYudkowsky/will-artificial-superintelligence-e

For those who don't know, I have a whole treatise one why N/A resolutions should be avoided: https://manifold.markets/dreev/how-bad-is-it-to-resolve-a-market-a

I certainly don't blame you for not wanting to trade in this market yet. If you already know you won't trade in it in the future either, why even comment here? I definitely encourage you to make other markets addressing the AGI question. I'll probably trade in them and probably learn from them. We could even have derivative markets like "will Dreev and Primer's AGI markets resolve differently?" which could be a means for gauging or hedging against the risk that I screw this market up. (I'm very committed to not screwing it up, to be clear.)

Creating a market that will definitely resolve N/A I think would be worse in this case.

I fail to see how that would be worse than opening a market for trading with barely existing and changing resolution criteria where the creator also trades himself.

If you already know you won't trade in it in the future either, why even comment here?

My P(doom-by-2040) is > 50% (I care about AI), and seperately I think prediction markets will gain in importance soon(-ish) (I care about good resolution criteria). Here those two topics intersect, that's why I care even more: I want prediction markets about AI to be as good as possible.

I fail to see how that would be worse than opening a market for trading with barely existing and changing resolution criteria where the creator also trades himself.

It's strictly worse in that you can just not trade until you're satisfied with the evolving criteria. Are you worried about people missing all the warnings and trading based on their preconceived AGI definitions and then being sad if we settle on a definition that doesn't jibe with those?

I want prediction markets about AI to be as good as possible.

Good answer. And I shouldn't have said "then why even comment" when you are in fact helping make this market better by participating in the discussion of what the right criteria should be.

I guess I'll just request that we focus on defining AGI. For your ideas for better ways to run this market, just create additional markets and deliver the told-you-so when they work better than this one.

Wouldn't it be more straightforward to ask when you, Daniel, will consider the year we achieved AGI and just update with your reasoning from time to time?

This seems even more fuzzy than most other AGI markets: We're based on drop-in remote workers, which we won't define, resolve probably the same as other markets, but maybe not, and adversarial Turing tests might play an important role or maybe none at all.

@Primer It's a work in progress. It can't just be my judgment -- then I couldn't participate in the betting! But, yeah, maybe we should focus on pinning down thresholds for what counts for drop-in remote workers.

Doesn't #2 (pass long, adversarial Turing Test) mean being unaligned is a "necessary condition" for AGI? I fail to see how an aligned AI would fool its users into believing it's human.

I seems based on the current FAQ, this market is asking "In what specific year will I think we have achieved unaligned AGI?"

@Primer Interesting objection. Suppose we have a human-level AI that's very honest and will always fess up if you ask it point-blank whether it's human. We want that AI to pass our test but it trivially fails a Turing test if it outs itself like that. My first reaction is that I don't think it counts as unaligned if it's willing to role-play and pretend to be human. But if we don't like that, there's another solution: have the humans role-play silicon versions of themselves. Now the humans will also "admit" to being artificial and the judges can no longer use "are you human?" to clock the AI.

This is related to the genius of the Turing test. Usually I think in terms of how all the ways a Turing test can be defeated -- keying in on typing speed or typos or whether the AI is willing to utter racial slurs -- are easy enough to counter by a smart enough AI. It's not hard to mimic human typing speed, insert the right distribution of typos, or override the safety training to fully mimic a human. But you make a good point that we might not want that kind of deception. So instead we can just copyedit the humans' replies, insert delays, and instruct the humans to strictly apply the show-don't-tell rule in asserting their humanity.

In general the idea of using a Turing test to define AGI is that the judges should attempt to identify the AI purely via what human abilities it's lacking. If there are no such abilities, the AI passes the test. Any other way of identifying the AI is cheating.

@dreev Yeah, maybe we could develop a test which mitigates that problem (humans have to try to pass as an AI + fast narrow AI is used to obfuscate things like typing speed or add some muddled words if it's live speech, etc.), but that leads us pretty far from a nice and simple Turing Test, and, more importantly, there's no way such a test will actually be performed.

The only way I see is dropping any requirement of "passing a Turing Test" entirely.

@dreev I think Turing Tests are pretty awful measures of AGI--especially long, adversarial ones.

Turing Tests don't just measure whether an AI has human capabilities--they also measure whether an AI has the additional capability to convincingly pass as a human. The level of additional skill this requires (which a human need not possess) directly scales with the difficulty of the test.

A broadly superhuman AI with the capacity to literally rule the world would almost certainly still fail a long, adversarial Turing Test if it could be properly boxed and studied, since there are always adversarial examples if probed hard enough.

(One can also imagine a cleverly crafted system that has almost no skills other than to convincingly pass as a stupid human, but that probably isn't feasible due to the above.)

I always ignore the Turing Test part of the resolution criteria for any AGI question, since I care more about AI's ability to supplant humans (economically or existentially) then about the academic pursuit of running historically meaningful tests. (And I care more about accurate vibes than fake internet points.)

The Turing Test is dead.

@Haiku I predict these kinds of problems are patchable and that a Turing test can serve as a litmus test for AGI. But reasonable people can disagree about this and the point of this market is indeed to ensure we avoid the kinds of failure modes you're worried about. So I'm ok with putting more focus on the drop-in remote worker criterion.

Also it sounds like people would prefer that passing the Turing test be considered neither necessary nor sufficient, so I'll update the FAQ accordingly.

Metaculus has taken a stab at this (thanks to @PeterMcCluskey for the pointer):

https://www.metaculus.com/questions/5121/date-of-artificial-general-intelligence/

In short, they require a unified system that passes all of the following:

A long, informed, adversarial Turing test (see FAQ 2)

Robotics, putting together a model car with just the human instructions

Min 75% and mean 90% on the MMLU benchmark

Min 90% on the first try on the APPS benchmark

And by "unified" they mean it can't be something cobbled together that passes each of those. The same system has to be able to do all of it and fully explain itself.

I think we want something even stronger (except the robotics part, which we're explicitly dropping for this market) but this feels like a good start.

Has such a test been performed and “trained” for? I’m pretty sure if someone fine tuned existing SOTA models for this task it could be done today, under the right conditions. Though potentially not meeting the cost or timing requirements.

I’d be curious to know if there’s been any attempts at this. If there aren’t any attempts made in a given year that would qualify for the purposes of this market, that doesn’t necessarily mean we couldn’t have met the criteria. I think it’s still valid criteria to have, but perhaps it’s less informative as a AGI indicator as a result

@MarcusM Sounds like the question is, what if we get AI that could in principle count as AGI but isn't packaged into something that meets the criteria we come up with? In that case I'm inclined to say it doesn't count yet. We wait till it meets our explicit criteria (which we need to continue to pin down!).

@VitorBosshard Exact. This is a probability density function (pdf), not a cumulative distribution function (cdf). Adding that to the FAQ now; thank you!