Preface / Inspiration:

There are a lot of questions on Manifold about whether or not we'll see sentience, general A.I., and a lot of other nonsense and faith-based questions which rely on the market maker's interpretation and often close at some far distant point in the future when a lot of us will be dead. This is an effort to create meaningful bets on important A.I. questions which are referenced by a third party.

Market Description

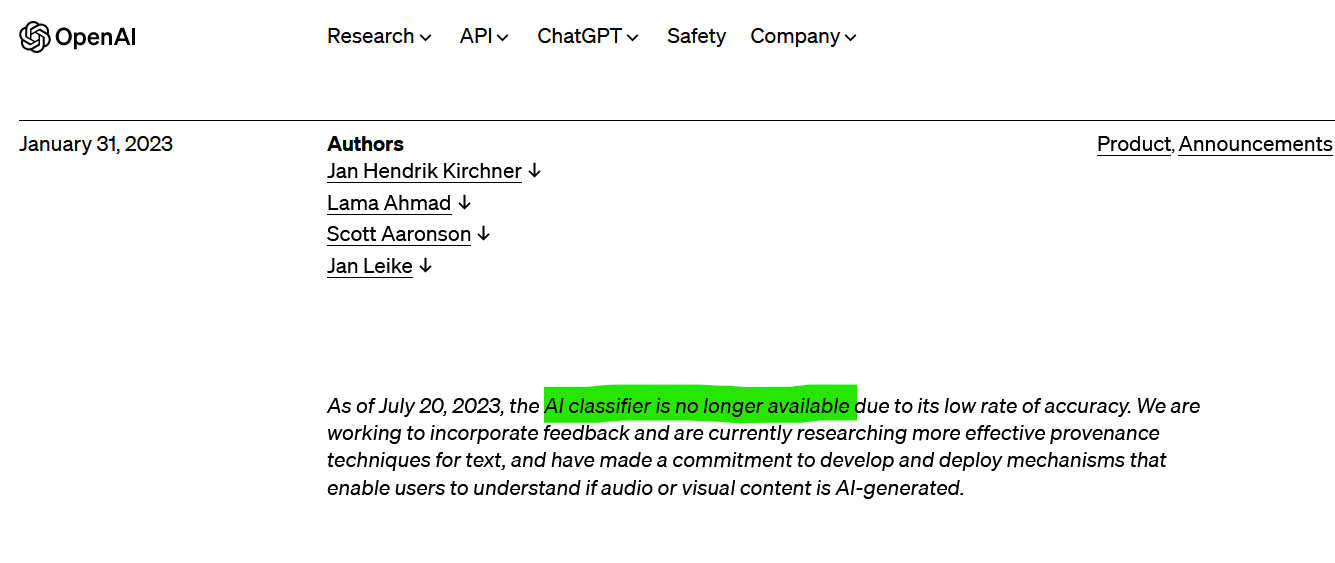

Classification of LLM-generated content has a wide variety of applications. OpenAI released a new one in January which contained a self-reported true positive and false-positive rate.

https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text

Our classifier is not fully reliable. In our evaluations on a “challenge set” of English texts, our classifier correctly identifies 26% of AI-written text (true positives) as “likely AI-written,” while incorrectly labeling human-written text as AI-written 9% of the time (false positives). Our classifier’s reliability typically improves as the length of the input text increases. Compared to our previously released classifier, this new classifier is significantly more reliable on text from more recent AI systems.

Resolution Threshold

By the end of the year, will the self-reported net rate improve at all?

The rating will be calculated as follows:

TRUE_POSITIVE - FALSE_POSITIVE = NET

So the current score is: 26% - 9% = 17%

We would need to see anything higher than 17%, rounded up from the 0.1 digit.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ71 | |

| 2 | Ṁ29 | |

| 3 | Ṁ24 | |

| 4 | Ṁ13 | |

| 5 | Ṁ13 |

People are also trading

@PatrickDelaney could you resolve?

If OpenAI doesn't release any new figures, it could be accepted as either N/A or NO.

I don't believe OpenAI released any new figures.

@PatrickDelaney That looks like a user-created GPT. I don't see any evidence that it's good at LLM detection, and in general, this is still seen by the ML community as a problem with no decent solution and, possibly, to which there cannot be any decent solution, at least sustainably. I don't know anything about Originality AI. For what it's worth, among researchers, I haven't seen any detection tool show up in 2023 that people thought was any good at this. Now 11 days after 2023, I expect that, if OpenAI releases any new figures, they won't be for 2023, so I don't think we'll get any more news for this market.

But I also think the debates around this, and all the purported detectors that have bubbled up and will inevitably continue to bubble up, is a good reason to have predictions about more particular dynamics like one company's self-reported figures, surveys of researchers, papers at conferences, etc. In this case, I'm not sure how you intended to choose between N/A or NO if OpenAI didn't release any new figures.

@Jacy Not sure, still evaluating. @ithaca and @HenriThunberg care to comment as the only YES holders?

@Jacy I'll give a few days for the YES holders to comment anything that they may have found, and then make a ruling so this isn't hanging out there too long.

@Jacy I'll give a few days for the YES holders to comment anything that they may have found, and then make a ruling so this isn't hanging out there too long.

If this were at something like 3% I might just resolve NO, but 14% is less definitive than a lot of the other markets I have been resolving lately related to AI/ML. Intuitively I want to say I agree with everything you said but I think we need to stick to the evidence.

I did mention that OpenAI should be the main source of info, I'm just wondering if I'm missing anything cited anywhere else that OpenAI did claim, though perhaps they don't have on their website anymore.

@PatrickDelaney I don't have enough mana on the line to dig any deeper into this, I take your investigation to be enough and would not object to a NO resolution. I indeed haven't gotten the feeling that there has been progress on this. Thanks for giving the chance to comment!

Building reliable watermarking and detection is part of the voluntary commitments all big AI companies made in July. https://www.whitehouse.gov/briefing-room/statements-releases/2023/07/21/fact-sheet-biden-harris-administration-secures-voluntary-commitments-from-leading-artificial-intelligence-companies-to-manage-the-risks-posed-by-ai/

@HenriThunberg good questions.

Yes, this is OpenAI only, for now.

If OpenAI doesn't release any new figures, it could be accepted as either N/A or NO. However in order for it to robustly be able to accept this question as NO, I think it would be best to try to figure out other metrics in addition to the OpenAI that we could track, either as an average, or in parallel with individual thersholds.

Post closing date...hmmm...to try to make an arbitrary decision based upon this as the market maker, I would say, we have to cut it off at some point, and AI seems to move fast, so I would say, perhaps we keep the end date as 31 Dec 2023, but accept that to mean, "probably the majority of the work is done for the year," and we have to accept that as a snapshot for the year.

I could revise the question to say, "Will LLM Detection Get Better At Any Point in 2023," to try to reflect this.

Please let me know what you think. I can dole out payments to any human betters on this market to offset any potential losses. I am happy to pay you a token amount in the comments here for helping me to improve this market.