OpenAI's original definition for AGI is as follows:

"By AGI, we mean highly autonomous systems that outperform humans at most economically valuable work."

This is the definition used for the purposes of evaluating this prediction unless there's a change proposed by the board (or another governing body of OpenAI with such permissions as to modify the charter) of OpenAI to this definition.

By "Hint at", it is meant that instead of a direct claim, OpenAI takes actions that were otherwise reserved for the special case of having achieved AGI. Since it is not possible to define something as intuitive as "hint at" apriori, I will judge that part subjectively, and am not going to trade in this market to avoid a conflict of interest.

"Hint at" could be understood as a weak claim to AGI by OpenAI's official actions or statements.

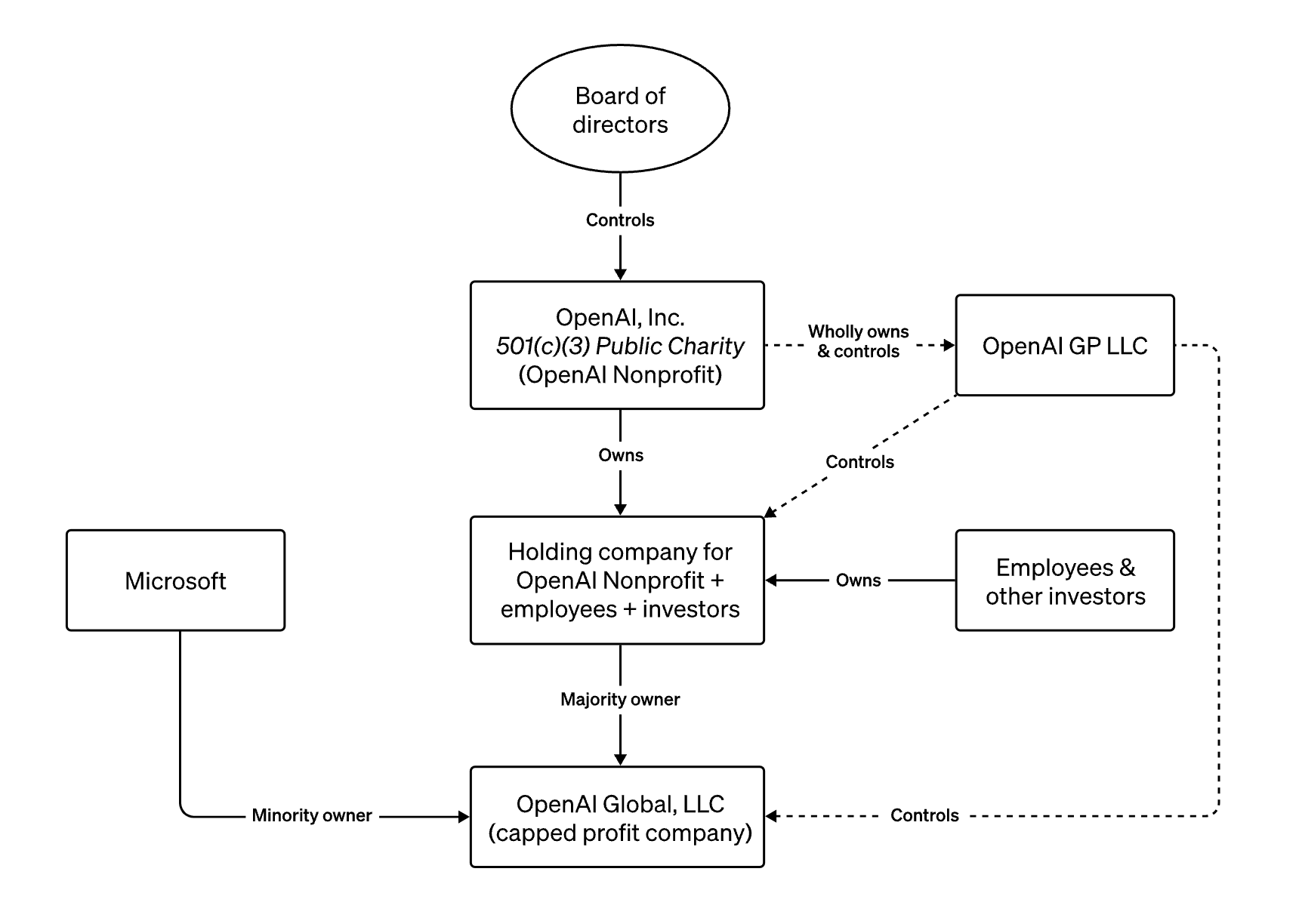

Here is a diagram illustrating the governance structure of OpenAI:

The following is a quote from the original post by OpenAI, OpenAI's structure:

Fifth, the board determines when we've attained AGI. Again, by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work. Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.

An action that would "hint at" OpenAI achieving AGI would be the exclusion of a specific state of the art AI system from IP licenses and other commercial terms with Microsoft while their partnership with Microsoft remains more or less the same structurally (although the composition might change).

Same market for a longer time-frame is below:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ15,930 | |

| 2 | Ṁ13,799 | |

| 3 | Ṁ8,182 | |

| 4 | Ṁ3,741 | |

| 5 | Ṁ3,413 |

People are also trading

OpenAi has 5 levels of Ai

level 1 and 2 have already been achieved

level 3 is agents and level 4 is Ai that can aid in invention

so we can assume AGI to fall somewhere between 3 and 4 or maybe level 4

so as of 2025 level 3 hasn't been achieved fully only basic web browser based agents exist.

but after "agents" get widely deployed we could say we're much closer to AGI so maybe AGI hints will start appearing around beginning of 2026.

@OshaPermadi do you think they will claim to have achieved agi before june? at what probability. if you think it's likely i can create a market on it. basically need something that's publicly observable though, if they achieve it internally and keep quiet ab it we can't rly make a market on it

Would the stuff about strawberries etc have counted positively for this in 2024?

(We now know the outcome was lackluster, but the hype was significant)

It would make sense to have the @mods who will resolve this question share their current thinking here.

Right now we're betting on how some mod will interpret how the creator and/or the (majority of?) traders interpreted the market. Or would have interpreted the market, had he/they known about the change in the agreement between OpenAI and Microsoft.

My take: This was supposed to be a proxy for "Does OpenAI think it created AGI", by using their agreement with Microsoft as a proxy. That agreement changed in a substantial way and I suppose this market would not exist in the alternative world where the current OpenAI-Microsoft agreement holds.

@Primer (The below is just my reading of the market description)

This was supposed to be a proxy for "Does OpenAI think it created AGI", by using their agreement with Microsoft as a proxy.

Agreed, except that it's not just the Microsoft contract, it's also the OpenAI charter itself.

That agreement changed in a substantial way and I suppose this market would not exist in the alternative world where the current OpenAI-Microsoft agreement holds.

Since it's defined in the OpenAI charter I don't think there's an issue with the agreement changing. Furthermore, see the part of the description about changing the definition of AGI:

This is the definition used for the purposes of evaluating this prediction unless there's a change proposed by the board (or another governing body of OpenAI with such permissions as to modify the charter) of OpenAI to this definition.

So if the definition changes, the new definition is what the market references.

@jack Thanks for your input!

Probably it would be nice to know how those hypotheticals resolve:

OpenAI claims "We achieved what we would consider AGI" AND profits <100B

No claim AND profits >100B

@Primer if they literally claim AGI then that's a clear yes. It's hint at or claim.

If they make profits > 100b but still don't take any specific action related to AGI clause that seems like a NO

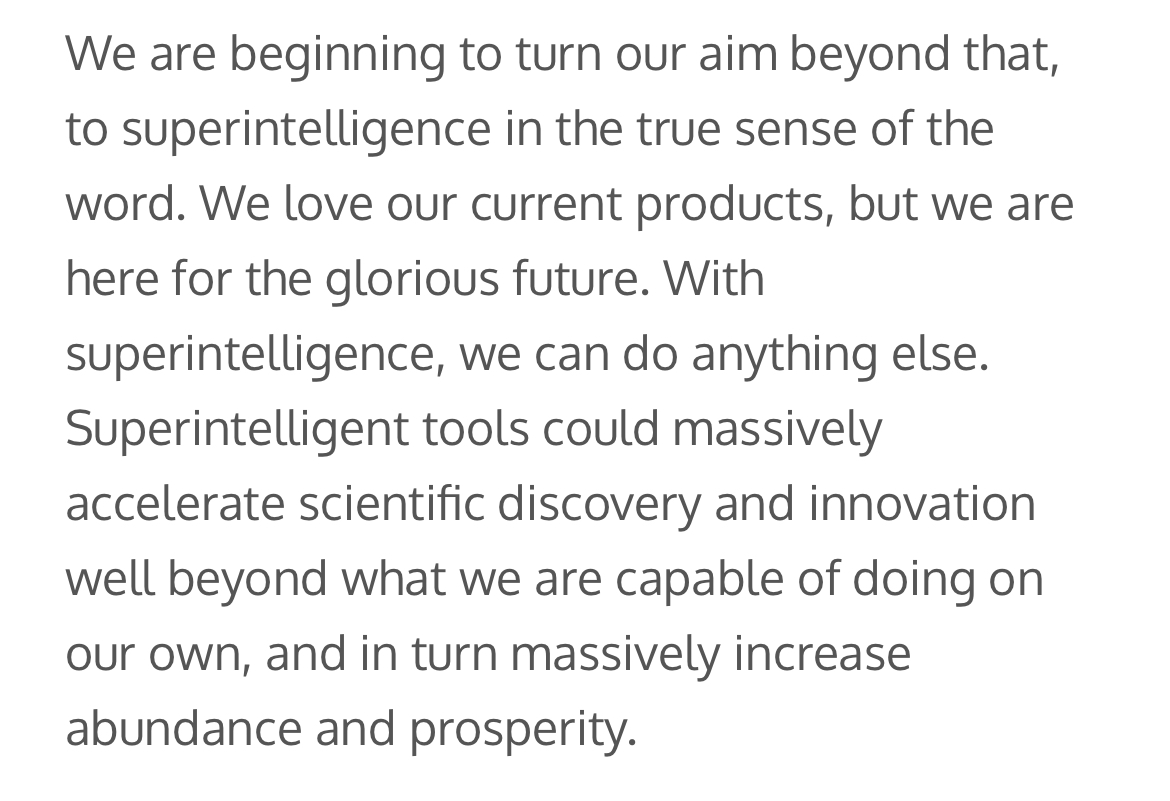

"We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. We continue to believe that iteratively putting great tools in the hands of people leads to great, broadly-distributed outcomes."

https://blog.samaltman.com

@CryptoNeoLiberalist I had no idea Carter was involved with OpenAI. Can you post a link with more info? Thanks

@Bayesian I bet no before, but I gotta admit it’s super cool we’re at the point where it’s open to interpretation or not if we’re gonna hit AGI. Would have imagined that would have taken a few more years.

"Hint at" has a very particular meaning in this market - read the description. It's still quite unclear, but as I understand it, the intent is to capture OpenAI directly or indirectly invoking the AGI clause in their charter and/or contract with Microsoft

@jack @Simon74fe having the creator of an AGI benchmark imply that there's a CHANCE that your model is AGI seems like a borderline hint, tbh, but I agree this might not yet meet the criteria described by the creator.

I also personally do not think this is AGI by any means, lol