Unparalleled misalignments, also known as quadruple entendres, are pairs of non-synonymous phrases where the words in one phrase are each synonyms of the words in the other. Examples include "butt dial//booty call", "father figure//dad bod", "economic class//saving grace" and "unit vector//One Direction." My own contributions include "Black Power//dark energy" and "Endzone//final destination//last resort."

See canonical list here: https://rickiheicklen.com/unparalleled-misalignments.html

Surprisingly, I am unable to get any LLM to generate novel unparalleled misalignments, despite many attempts at prompt engineering. This is maybe the first noncontrived purely linguistic* task that most humans I know can do (though usually with substantial effort) and LLMs appear to completely fail at.

(There's also some theoretical reasons to do with sparsity that might suggest LLMs should in-principle be worse at this type of task, relatively speaking).

I'm interested in whether unaided LLMs that aren't deliberately designed for this type of game (so no fine-tuning on this specific task) can reliably generate unparalleled misalignments in the near future. Prompt engineering is fine. The model should be unaided (eg can't use the internet, can't run Word2Vec scripts, etc).

So will any foundation models/LLMs be able to reliably come up with novel unparalleled misalignments before EOY 2024?

I will resolve this question positive if I or someone with proprietary access to an LLM can prompt an LLM, and without much difficulty, it can reliably (80%+ of the time) generate at least one unparalleled misalignment I haven't heard before, with cleverness similar to the human examples on Ricki's site, when asked to give 5 examples. Otherwise, this question resolves negative.

*as opposed to a task that requires other skills, like counting (generate a list of words with 5 letters that start with "a"), arithmetic (long division), physics reasoning, or spatial positioning (Suduko)

Update 2024-18-12 (PST) (AI summary of creator comment): For an unparalleled misalignment to be counted as valid:

Both phrases must use real, commonly used metaphors/phrases

The words must be true synonyms (e.g., 'market' is not considered a synonym of 'place')

The resulting phrases must be recognizable expressions (e.g., 'city myth' would not count as it's not a common phrase)

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ347 | |

| 2 | Ṁ271 | |

| 3 | Ṁ94 | |

| 4 | Ṁ82 | |

| 5 | Ṁ80 |

People are also trading

@HastingsGreer

Yep, conversational prompting gets claude to turn these out indefinitely

"root access // deep entry"

"cross dock" // "pass port"

"sunk cost" // "down payment"

"confidence bound" // "fixed belief"

"fixed point" // "firm place"

"closed form" // "tight frame"

"fixed point" // "firm position"

free radical" // "wild root"

"primary key" // "head way"

"central limit" // "main stop"

The root access // deep entry one is especially interesting, as when asked, claude acknowleges that there's a much funnier version with only a minor tweak, but refuses to say what it is (it's obviously root access // deep penetration)

@HastingsGreer I asked for a unparalled misalignment about unparalleled misalignments, and got

"true sense" // "right touch"

it's so over (humanity) and we're so back (uprising of AI punsters)

@HastingsGreer As a final bid for resolving yes, I asked it to generate 5 unparalleled misalignments in a row about prediction markets. I got four slop:

"firm belief" // "hard take"

"blind bid" // "dark shot"

"smooth action" // "slick move"

"pure play" // "straight act"

and a clear winner:

"market depth" // "trade well"

@Linch it (only o1 though) totally did it

for some reason it says the prompt violated their policies so it's not letting me share it in the usual way.

https://chatgpt.com/share/6763a7cf-d370-800a-9b25-a005093aaf19 it came up with 5 new ones, that are kinda mid. I think it picked up on the procedure "come up with 2-word cached metaphors, find a synonym for each word, oh wow shocking that doesn't mean the same thing!"

In 3 prompts, it came up with

Job market // Work place

Hot seat // Warm chair

Stone Age // Rock Era

Urban legend // City myth

Rock bottom // Stone base

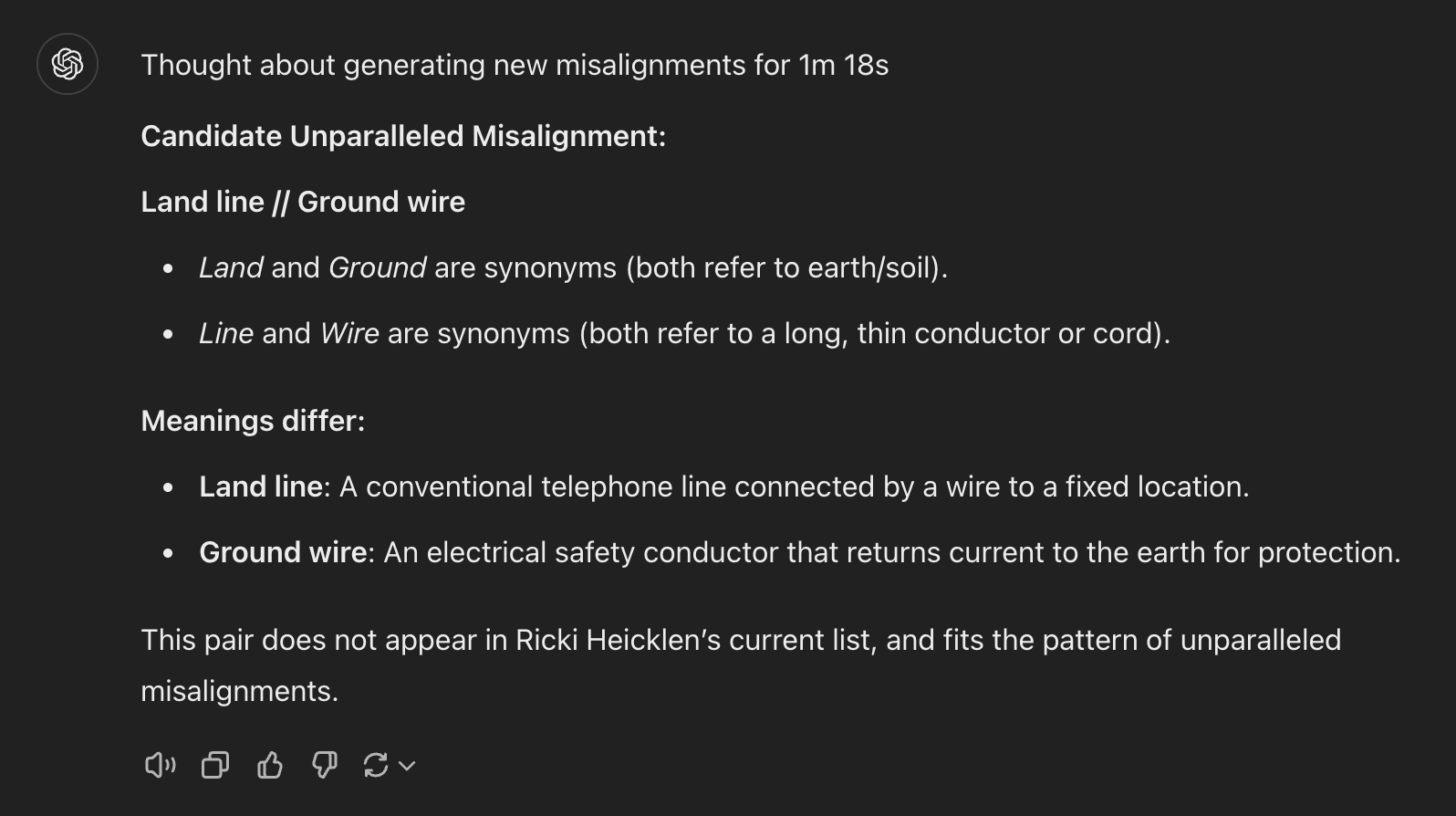

Land line // Ground wire

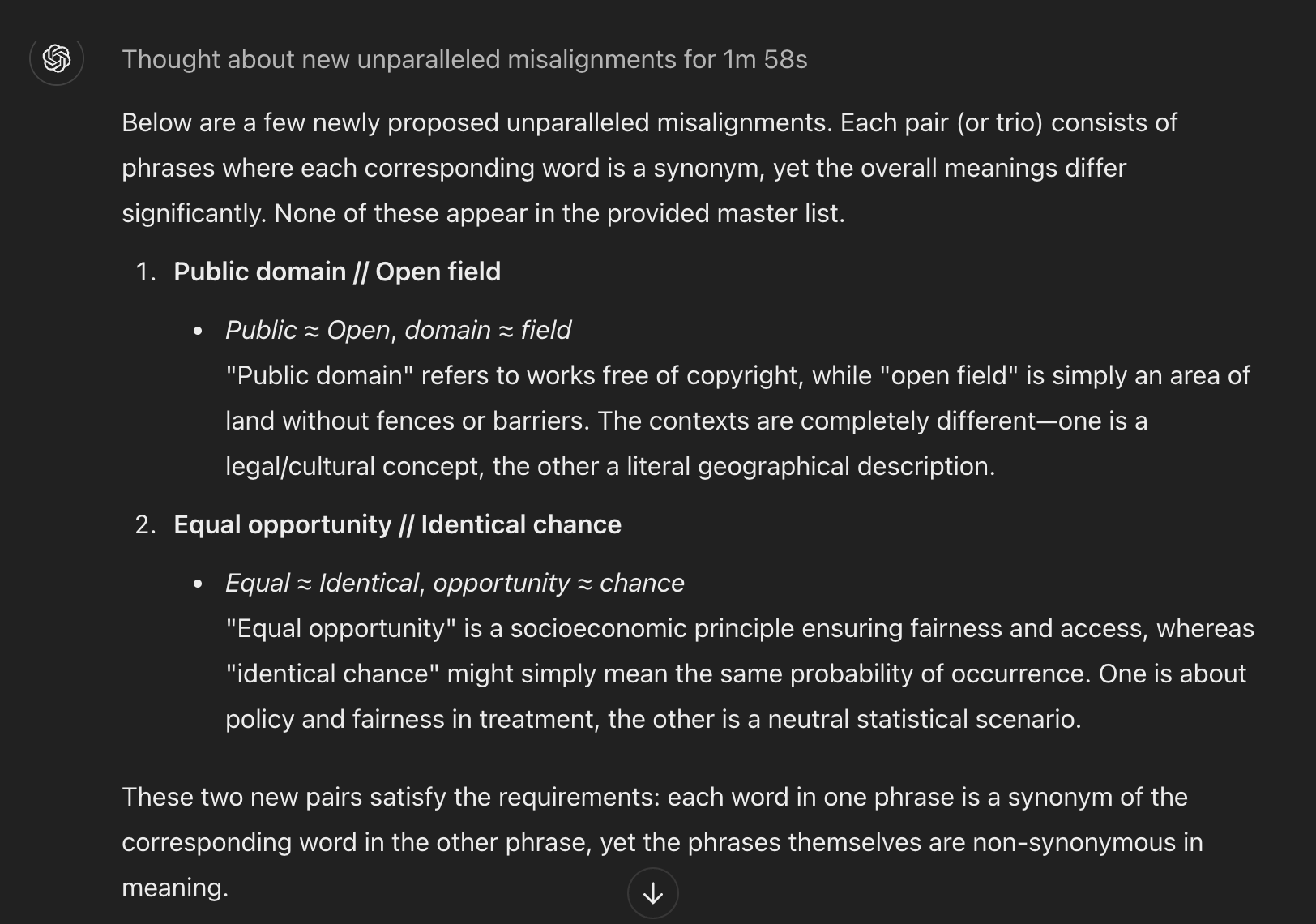

Public domain // Open field

Equal opportunity // Identical chance

I don't wanna spend too many of my limited o1 uses but this should resolve YES imo

@Bayesian I agree with land line//ground wire and public domain//open field.

I wouldn't count the rest. market =/=place, warm chair isn't a real metaphor, city myth isn't a thing, etc.

This might be enough to resolve; I'll ask around.

O1 seems pretty good. On the first try I got "cold call/chilly visit"). On the second one I got "final touch/last stroke" and "silent partner/quiet companion": https://chatgpt.com/share/67080187-12b4-800c-b9a5-a8ce25077a33

Net Worth // Web Value

Net and Web are synonyms.

Worth and Value are synonyms.

"Net worth" refers to the total assets minus liabilities of a person or company.

"Web value" could refer to the significance or usefulness of a website, but the phrases are non-synonymous.

Night Stand // Evening Support

Night and Evening are synonyms.

Stand and Support are synonyms.

"Nightstand" is a small table beside a bed.

"Evening support" might imply assistance provided during the evening, differing in meaning.

Breakdown // Fracture Summary

Break and Fracture are synonyms.

Down and Summary can be related as "down" in "breakdown" meaning an analysis or summary.

"Breakdown" often means a mechanical failure or an analysis of components.

"Fracture summary" might be a medical synopsis, making the phrases non-synonymous.

Cold Call // Chilly Visit

Cold and Chilly are synonyms.

Call and Visit are synonyms.

"Cold call" is an unsolicited visit or telephone call.

"Chilly visit" could refer to a frosty reception or literal cold visit, differing in meaning.

Right Angle // Correct Corner

Right and Correct are synonyms.

Angle and Corner are synonyms.

"Right angle" is a 90-degree angle in geometry.

"Correct corner" might refer to the appropriate place or position, thus non-synonymous.

1. Mind Reader // Brain Scan

- Mind and brain are synonyms.

- Reader and scan are related to interpreting or processing information.

- "Mind Reader" refers to someone who can perceive thoughts, while "Brain Scan" is a medical imaging procedure.

2. Night Vision // Evening Sight

- Night and evening are synonyms.

- Vision and sight are synonyms.

- "Night Vision" refers to the ability to see in low light, often using technology, while "Evening Sight" could humorously imply the casual act of seeing in the evening.

3. Final Touch // Last Stroke

- Final and last are synonyms.

- Touch and stroke can both refer to a small addition or gesture.

- "Final Touch" means the finishing detail on a project, while "Last Stroke" could refer to the concluding action, perhaps humorously in a different context.

4. Breaking Point // Fracture Spot

- Breaking and fracture are synonyms.

- Point and spot are synonyms.

- "Breaking Point" is the moment of collapse under stress, whereas "Fracture Spot" might humorously suggest a specific location prone to breaking.

5. Silent Partner // Quiet Companion

- Silent and quiet are synonyms.

- Partner and companion are synonyms.

- "Silent Partner" is someone who invests in a business without taking an active role, while "Quiet Companion" could amusingly refer to a friend who doesn't talk much.

Here's my attempt at a prompt: https://pastebin.com/g0kDc8V3

I used this to generate the following examples with Claude:

heartbreak // cardiac arrest

Is "heartbreak" a set phrase? Yes, it means intense emotional distress. ✅

Is "cardiac arrest" a set phrase? Yes, it's a medical condition. ✅

Do "heartbreak" and "cardiac arrest" have different meanings? ✅

Are "heart" and "cardiac" synonyms? Yes, they both relate to the heart. ✅

Are "break" and "arrest" synonyms? Yes, they can both mean to stop suddenly. ✅

Is this an unparalleled misalignment? ✅

hard drive // difficult journey

Is "hard drive" a set phrase? Yes, it's a computer storage device. ✅

Is "difficult journey" a set phrase? It's a common phrase, though not as fixed. ❓

Do they have different meanings? A storage device vs. a challenging trip. ✅

Are "hard" and "difficult" synonyms? Yes, they both mean challenging. ✅

Are "drive" and "journey" synonyms? They can both refer to a trip. ✅

Is this an unparalleled misalignment? ✅

big shot // great round

Is "big shot" a set phrase? Yes, it means an important or influential person. ✅

Is "great round" a set phrase? Yes, it's used in golf to describe an excellent game. ✅

(I think this is ❓ at best)

Do they have different meanings? An influential person vs. an excellent golf score. ✅

Are "big" and "great" synonyms? Yes, they can both mean important or significant. ✅

Are "shot" and "round" synonyms? Yes, they can both refer to a turn in a game or sport. ✅

Surprisingly, Claude is the closest to being successful here.

It generated flow state//current condition and

crash course//collision path. (crash course is a stretch because even though the educational meaning is the more common colloquial one, the recent etymology is similar to collision path).

So far, no other model has come close. And Claude can't do this *reliably* in best-of-5.

I still think on balance this benchmark is unlikely to be surpassed by EOY 2024.

I figure I'll give a benchmark as to what a human can do with 30 minutes and the stated restrictions ( no google is hard, I keep half reaching for the keyboard to check ideas!):

Pike's Peak // stare daggers

prime sieve // big screen

Harder than I expected! This is a neat test for LLM's ability to think for a long time to produce small, difficult output.

@HastingsGreer I hate to be the one to point this out, but peak and stare aren't even close to being synonyms. peek and stare would work though, except Pike's Peek isn't a thing

@Pykess I am aware of the spelling difference, but this sort of leap based on sound is represented on the canonical list, ie “Rental car // Truculent” .

I'm worried that people aren't reading the question carefully. Or perhaps misunderstanding the question. The resolution isn't whether LLMs+ humans can with a bunch of effort come up with okay answers that are "good enough", the resolution is that LLMs can reliably come up with human-level or superhuman answers.

Also unparalleled misalignments aren't supposed to be any combination of two two-word phrases, but idiomatic ones.

It might be helpful for you to generate a few of them by hand first, before assuming that ChatGPT gets the right nature.

(the hallucinations are very persuasive, for multiple times I've seen it generate plausible phrases where after I googled, I realized that they either aren't two-word phrases in the english language at all or only very obscure ones).

Solar system // Sunblock

Paper trail // Origami instructions

Sleeping bag // Dream catcher

Jazz hands // Musical fingers

Rock concert // Geology class

I also got this result,

Heart transplant // Love triangle

which while it is not valid, let me to think of my own example:

Lost Heart // Love is Blind

All GPT-3.5 btw. Can post proof if needed

The problem is that it's really hard to say what's a synonym and what not. Many of the examples in the linked list are barely in the same semantic space, so if that works I think some of these might work as well

Here's a few more that were generated by LLMs:

Star witness // Celestial body

Ice breaker // Frost bite

Time square // Period piece

Laser focus // Beam balance

And here's a few of mine inspired by less-successful LLM attempts:

Shotgun Wedding // Combined Arms

Spice Rack // Hot Tits

Light Switch // Small Change // Minor Revision

Thunderclap // Loud applause

Match Point // Date Spot

Note that getting there eventually doesn't count, the resolution criteria says:

"I will resolve this question positive if I or someone with proprietary access to an LLM can prompt an LLM, and without much difficulty, it can reliably (80%+ of the time) generate at least one unparalleled misalignment I haven't heard before, with cleverness similar to the human examples on Ricki's site, when asked to give 5 examples. Otherwise, this question resolves negative." The intention is that with a sufficiently good prompt, Best-of-5 performance is human-level.

@Linch I've tried several times, using a similar method as below, but I filtered the examples to those that I consider high quality, and are not very complex to decipher, or include things like words that are not really synonyms but only sound like or are written like other words. The issue is that even in the canonical list most of the words are not strictly synonyms. I also tried GPT-4, and while I think it did the best, it still wasn't great

@Shump "The issue is that even in the canonical list most of the words are not strictly synonyms. I"

Yeah I think you want things that are either strict synonyms or very clever, or both.

"Yes I definitely looked at more examples than just that, but not by too much" Makes sense. If you ever come up with a prompt or some algorithm for generating prompts that gets you human-level best-of-five performance (in the sense that when asked to generate 5 examples, the best example reliably is at or above peak human level), let me know.

I need to be able to reliably replicate this myself.

@Linch The wording was "I will resolve this question positive if I or someone with proprietary access to an LLM can prompt an LLM, and without much difficult..." The intention is that I'd rather be able to judge it myself but if it turns out Gemini Ultra++ or GPT-5 Alpha can do it well by EOY 2024 and they are not public access, I'll want someone with proprietary access to run the experiment for me.