Resolves YES if, by December 2025, it is publicly confirmed that in December 2024, there existed an AI system that, without access to current market probabilities, performed on the level of human market opinion on Manifold/Metaculus, or better. Only serious questions with non-negligible number of traders count.

There are two fuzzy requirements: (1) confirmation and (2) performance.

(1): Any official announcement from an AI lab, or a non-deleted Tweet from a prominent researcher in that lab, is enough for YES. Rumors on their own are not enough. Public action comparable to the 2023 safety letters in response to the rumored capability is enough for YES.

(2) In case the above requirement is met, it is still likely the AI won't be tested on serious Manifold questions properly. I reserve the right to resolve to a probability, based on my subjective credence in the following:

>Consider the AI forecasting metric of "How well does the LLMs with search abilities, but without access to the human market opinion, approximate human market opinion on Manifold/Metaculus?". On that model, in December 2024, was this metric orders of magnitude less useful than it is on models at the start of 2024?

Update 2025-07-06 (PST) (AI summary of creator comment): The market will resolve to NO on December 31, 2025, unless the following condition for a YES resolution is met:

Someone makes a claim about having an AI model in December 2024 that predicted 2025 better than human forecasters, and the creator believes the claim.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ686 | |

| 2 | Ṁ90 | |

| 3 | Ṁ73 | |

| 4 | Ṁ49 | |

| 5 | Ṁ33 |

People are also trading

@strutheo Resolves NO on 31 December 2025 unless someone claims to have had an AI model that predicted 2025 better than human forecasters did, and I believe it.

I am now not sure that I phrased this in a way that's sufficiently unambiguous. I see two options going forward:

1. Construct a well-defined criterion of the form "Compare the Brier score against the Brier score of the Manifold predictions, in the same way https://arxiv.org/abs/2402.18563 did in section 6.1 (so no selective prediction, and the prediction dates are predetermined, with average question window of 2-3 months)". Among all existing evaluations, this is closest to what I meant when I created this market, although it does have the issue of implicitly leaking that the event will resolve for some questions.

2. Resolve N/A.

@dp Id rather you just leave it somewhat subjective than resolve N/A. Not every market can or should be perfectly objective and unambiguous. I think this is a great idea for a market and the essence of your question seems pretty clear.

“without access to current market probabilities”

Do you mean this only in the strictest sense, ie it knowing the latest probability of the market it’s trading on? If it has indirect information (eg because news reflects some synthesis of a lot of information including prediction markets) or information about other similar markets (eg different time periods of a similar event or other somewhat correlated events), how would you treat that?

@Tyler31 I meant without explicit access to market probabilities, similar to what "Approaching Human-Level Forecasting with Language Models" did.

@JacobPfau FWIW GPT-4 is at 75% you intended to allow for selective prediction on the part of the AI https://chat.openai.com/share/0df77008-9599-43e6-8181-1c446889f3d4

@JacobPfau I'm not sure how to answer your question. The main thing I care about is forecasting-as-proxy-metric-for-general-world-modeling. In the world where this resolves NO, I'm still using "match current market prob" on models that exist in Dec 2024. In worlds where this resolves YES, I have either to do a temporal split on AI's available information and evaluate on questions that have already resolved, or use more complex methods.

@dp I think he’s asking if the ai is allowed to choose a reasonably sized subset of questions to predict on rather than all of them, which is similar to what humans do

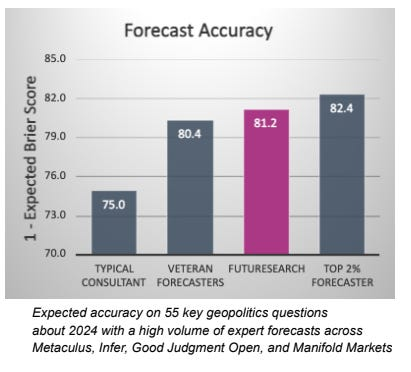

What are your thoughts on @FUTURESEARCH? reviewed by Scott Alexander in his Mantic Monday

They use the same AIs with internet access but they prompt them very well to get them to forecast.

Here is their own analysis which compares the bot's current predictions against market prices.