On Oct 14 2023, Yann LeCun (Chief AI Scientist at Meta) stated: "Open source AI models will soon become unbeatable. Period."

Resolves YES if, at the end of 2025, it's decisively clear (in the judgment of Eliezer Yudkowsky) that open-source LLMs (or their successors in the role of widely used AGI tech) are more powerful or more cost-efficient than their closed-source alternatives. That is, if either all the leaderboards are full of open-source LLMs with successors to GPT-4 or Claude being far behind, or if most of the business spending for inference seems to be on running AI models built on open-source foundation models, this resolves YES.

If it's hard to tell or if that seems wrong, resolves NO. "Unbeatable" seems like it shouldn't be subtle.

Update 2025-07-15 (PST) (AI summary of creator comment): In response to a question about the definition of "Open Source", the creator has clarified they will use a broad, non-legalistic interpretation. Models with proprietary but widely available licenses (e.g., Llama) will likely be considered "Open Source" for the purpose of resolution.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ2,169 | |

| 2 | Ṁ1,806 | |

| 3 | Ṁ1,573 | |

| 4 | Ṁ814 | |

| 5 | Ṁ756 |

People are also trading

1% is kinda wild when description says

“more cost-efficient than their closed-source alternatives” would resolve yes

is Gemini 3 expected to be that good?

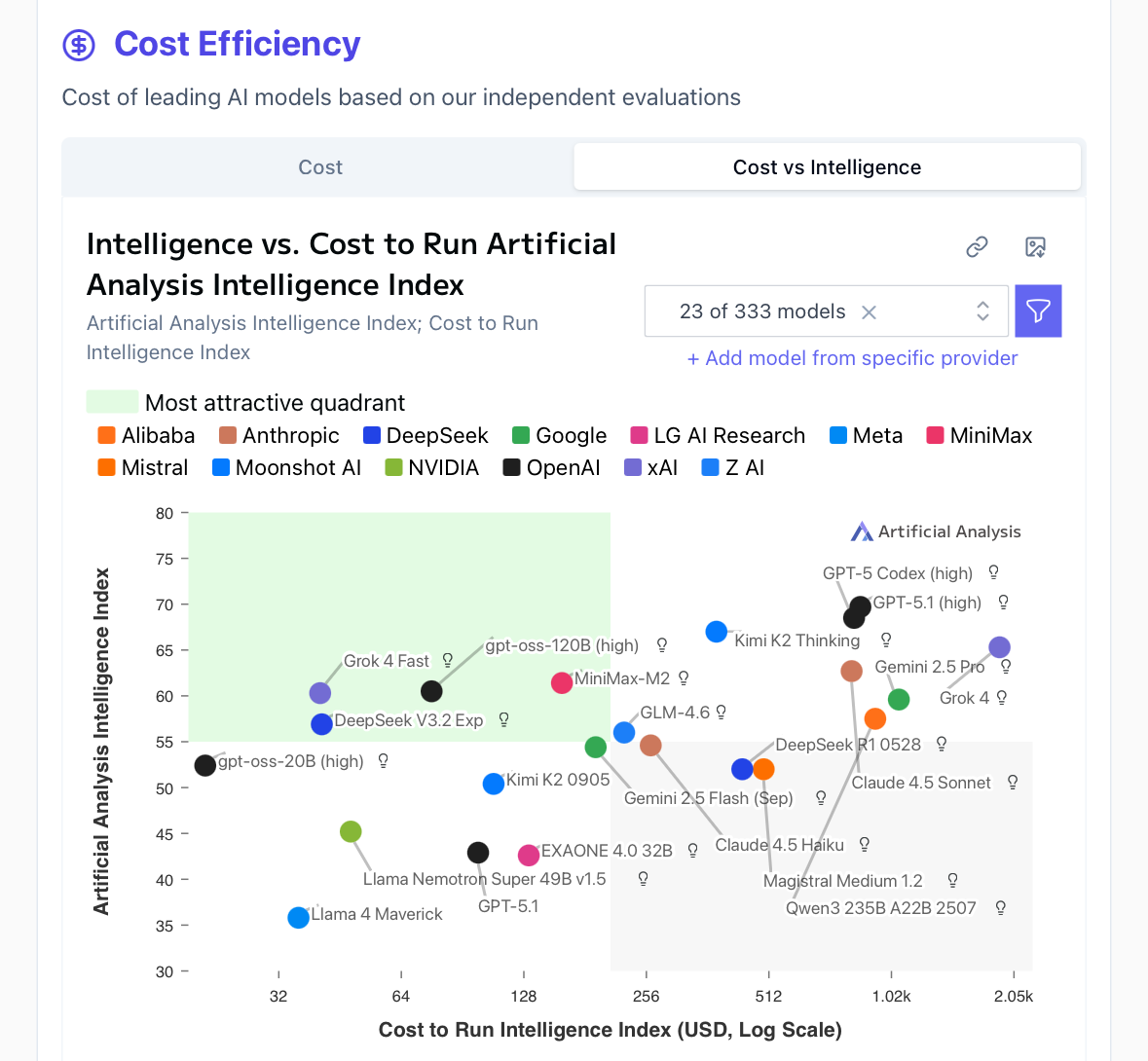

Kimi K2 thinking matches or exceeds the best closed source models at ~25% the cost

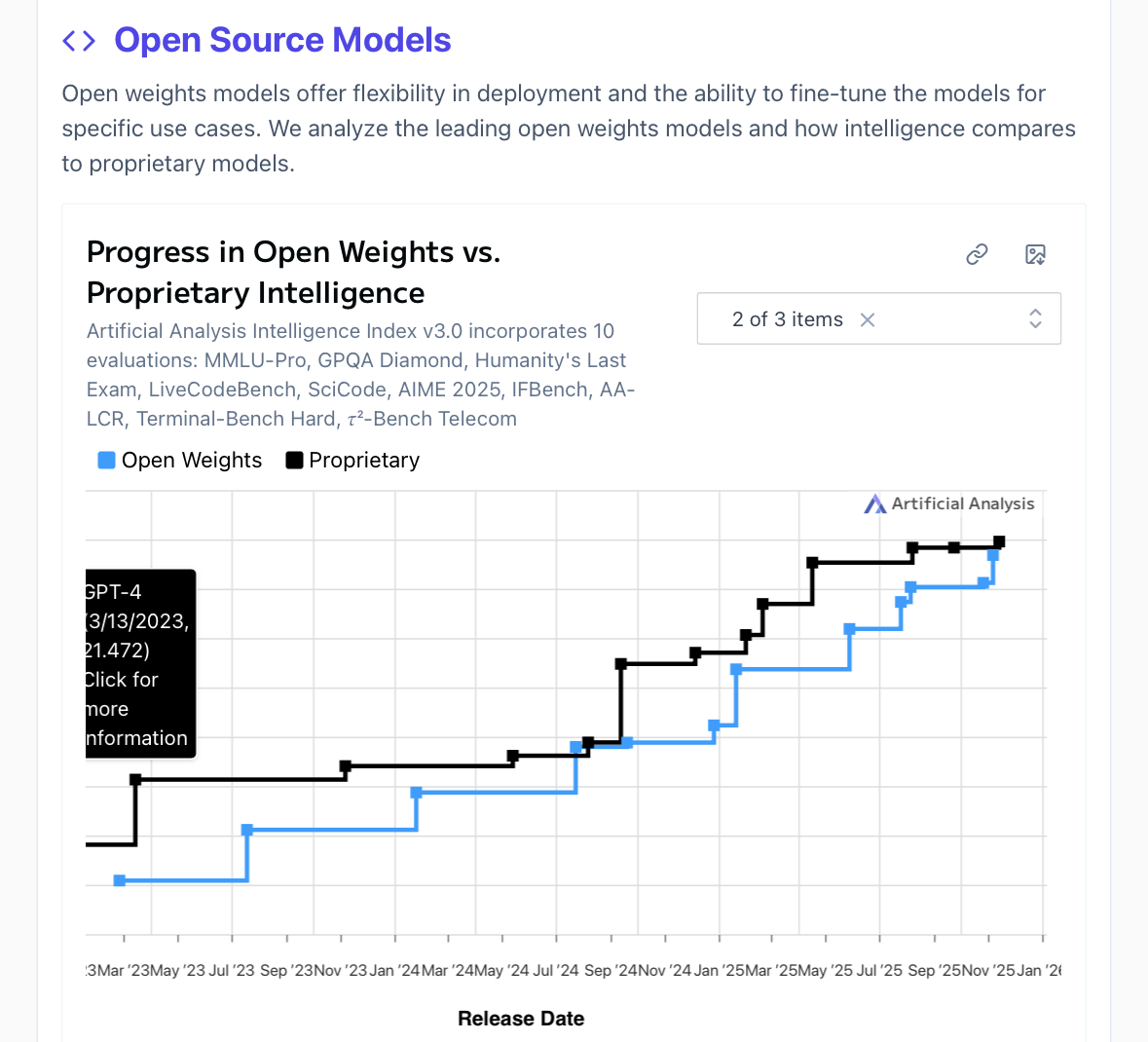

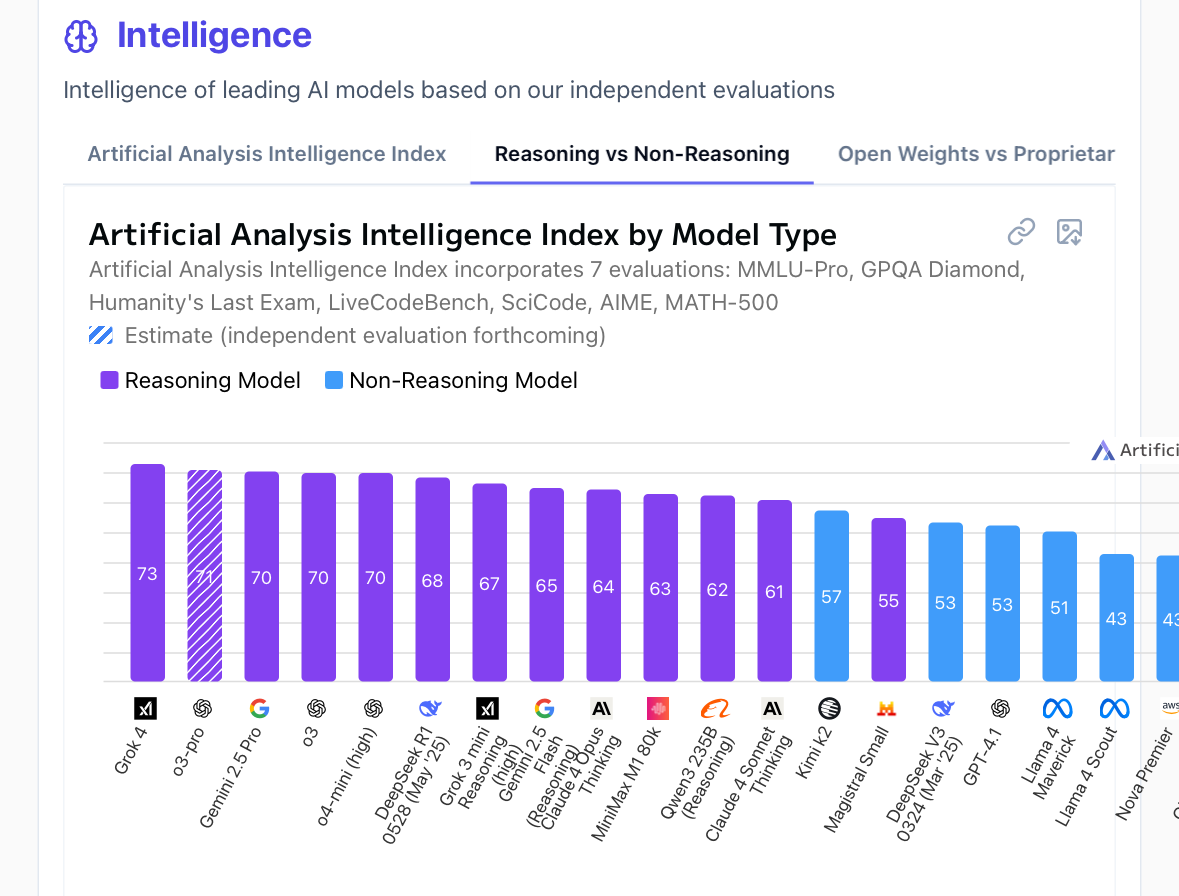

The Pareto frontier (Cost vs Intelligence) is mostly open source outside of Grok 4 Fast being a cheap closed model and GPT-5(.1) high being SoTA intelligence (but super slow / relatively expensive).

Re: most of the business spending for inference seems to be on running AI models built on open-source foundation models

You could argue American businesses are Sinophobic either for security reasons or fear of government sanctions (subsidizing/influenced by American companies (OAI, Anthropic, Google, xAI) via project stargate, data centers, corruption, etc.)

The cost of LLM inference is sufficiently cheap that people are willing to pay a 2x markup for more reliable inference when the ROI may be 10-100x so it’s akin to the difference between tap water and bottled water — outsourcing to a trusted 3rd party for convenience and reliability vs trusting your own pipes (GPUs)

—

Yann LeCun’s statement in Oct 2023, is largely correct considering how Llama 3.1 405B (likely what he was referring to in that quote) matched or exceeded GPT-4o (May 2024)

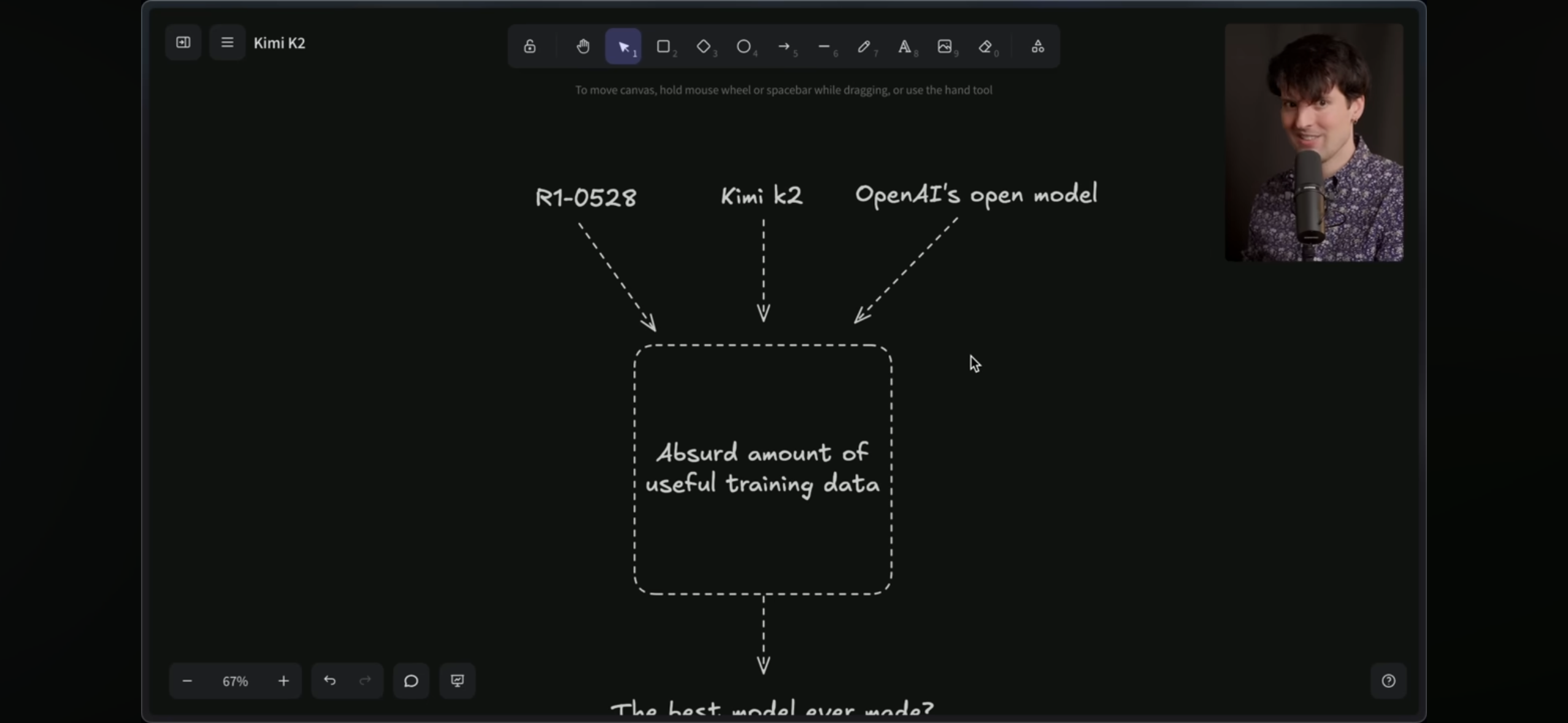

Deepseek R1 0528 (671B MoE, near SoTA reasoning) + Kimi k2 (1T MoE, SoTA non-reasoning, = Claude at tool calling) + OpenAI open source model (???, shouldn't disappoint)

= Best model ever + distillations ?

per Theo (https://m.youtube.com/watch?v=xLFkqYOUN24&t=2490s )

benchmarks via Artificial Analysis

@Balasar I don't think it's true that, as of right now, "either all the leaderboards are full of open-source LLMs with successors to GPT-4 or Claude being far behind, or if most of the business spending for inference seems to be on running AI models built on open-source foundation models"

@SemioticRivalry that's pretty fair, and I suppose I should have read closer. The question is designed to resolve to NO even if the spirit of the question is met.

@Balasar R1 is #6 at LLM arena right now. I like R1 and use it pretty often, it's very good, but it's not "undisputably superior to all other models".

@ProjectVictory Comment was a month ago, 3 of the higher models were made since then, and the others were substantially more expensive to produce

@Balasar It's not asking about the pareto front between capability and cost, it's talking about overall capability. I don't think it's reasonable to say that R1 is very close to beating the best models right now, though I don't think it's impossible that Deepseek produces and open sources such a model.

@Balasar Ah yeah, sorry my reading comprehension is often disappointing. It seems like the question should resolve yes if the pareto front of cost and capability leads open source models to consume the majority of inference spending, or the pure capabilities of open source models would lead to them dominating leaderboards. Since leaderboards have no measure for cost efficiency.

@mods in the Order Book graph ("Cumulative shares vs probability"), the green graph which shows the cumulative YES limit orders seems to be off. There are 2'383 YES limit orders in total, but the graph shows 140k?

Also, the user Mira does not exist anymore, but their limit orders are still listed.

@JonasSourlier The graph shows the total number of potential shares to be bought with the limit orders, while the order book shows the amount of potential mana required to buy the shares. For example, Mira’s 1001 mana order at 1% buys 100100 shares, and is listed as 1001 in one place and 100100 in the other.

I’m pretty sure it’s normal that the limit order of a deleted account is still there.

Let me know if you have more questions.

There are 2'383 YES limit orders in total, but the graph shows 140k?

Note that the limit orders above are denominated in mana ("M"), while the chart below is denominated in "shares". Note that a 1M limit order at 1% is worth 100 shares.

Also, the user Mira does not exist anymore, but their limit orders are still listed.

FWIW the mods don't have direct input on how the site works (for feedback you can use the Discord)

That is, if either all the leaderboards are full of open-source LLMs with successors to GPT-4 or Claude being far behind

this seems very unlikely!

or if most of the business spending for inference seems to be on running AI models built on open-source foundation models

this seems less unlikely but still unlikely. Google, openai, and anthropic are still gonna be competing!