This cost should not include the salaries of researchers that worked on developing it, but rather only the cost of electricity + hardware. I will resolve this as best I can, based on potentially given estimates and other pieces of evidence.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ2,265 | |

| 2 | Ṁ1,094 | |

| 3 | Ṁ845 | |

| 4 | Ṁ250 | |

| 5 | Ṁ104 |

People are also trading

@Guilhermesampaiodeoliveir EpochAI produced an estimate, which is sufficient to trigger the market’s resolution criterion based on the lack of better available evidence.

I think it's fine to resolve it No Bionic if you think that is correct (if forced to choose I think No seems the most correct to me too if I didn't see the market % and just the title, criteria, and comments.

This market has clearly failed and it's unfortunate people made incorrect assumptions/interpretations and then more ppl probably saw the percentage so high which then re-enforced them to make the same incorrect assumptions. So as the creator if u'd rather N/A I can do that for you too. But up to you.

@BionicD0LPH1N I agree with David: you should resolve to the best of your ability. If people got it wrong or misunderstood things, then so be it. That sucks, but misresolving or N/A in response sucks more. My read of things just now (I have not been following this, just read it now) is that No looks more likely to be correct than Yes.

Markets that close at 95% should occasionally resolve No, if everything is working correctly. (About 5% of the time, in fact!)

Here's an example where I resolved a (much smaller) market opposite the price:

/EvanDaniel/will-any-manifold-ui-changes-receiv ; I think that was the correct decision.

@strutheo Sounds good, thanks. I'm supposed to resolve today but I'll wait on mods in case they think NA'ing makes more sense.

@BionicD0LPH1N whatever they decide I'll support sorry you have to deal with it, I know it sucks as a creator lol

I believe (my opinion), that if there is not concrete data source (ie. Statista), that can be followed than that severely limits the ability to get exact data from Private companies. These kind of markets I am in favor of N/A , while it is not ideal since the market has been open so long, the fact that this data is basically "hearsay" should alone qualify it to be N/A'd

There are ways to write a question to allow "hearsay" but would have needed to be in the description of resolution criteria long ago.

Going by "old standards" I would have originally said to resolve to the "spirit of the market" which would be YES or the current % it sits at once closed.

I will resolve this as best I can, based on potentially given estimates and other pieces of evidence.

I read this as explicitly saying he might resolve using hearsay. did you have a different read

@Bayesian I can see that being some way to include hearsay.

I personally read it more as using estimate or evidence from reporting sites like statista/demandsage/answeriq , not tweets/news reporting.

However, I still believe N/A would be acceptable, but if there was a MOD vote, I would vote to resolve YES.

@BionicD0LPH1N do you want the resolution to be up to you or mods? and how would you resolve it if the former?

I didn't know I had a choice. It's been pretty astonishng reading the mod chat. The arguments didn't amount to much more than many people bet without reading the market description so we should resolve NA to protect them, and questioning EpochAI's reliableness.

So I guess I'd like to resolve, and I'd resolve NO. Waiting to confirm I can do so.

it was more than that - many people read the description and/or comments and kept betting yes, and the epoch note wasnt added to the title and/or description

(there is a reason some mods said they'd have NA or YESed this)

@mods pls clarify . because if so ill just elevate it to mods once he resolves no ? then what is supposed to happen?

I will resolve this as best I can, based on potentially given estimates and other pieces of evidence.

This was in the market description from day one. I did do this. I agreed with the suggestion to wait on EpochAI's updated analysis, but that doesn't mean that I would have disregarded any official statements or generally better evidence about compute + hardware costs; had better evidence than Epoch's come out in the meantime, I would have resolved accordingly.

i actually dont think you did anything wrong here. but i do still think the market failed somewhere even if outside your control, and that the NO decision would still be a bad one. (and it seems at least some mods would agree with me on that. )

EDIT if there is one mistake its not adding clarifying details from the comments to the description/title but i do that mistake too. but i also NA in those cases a lot of the time

The market resolution has remained the same from the creation of the market. The training cost in question should only include the cost of electricity and hardware, and excludes costs like researcher salaries.

The best available evidence I encountered so far:

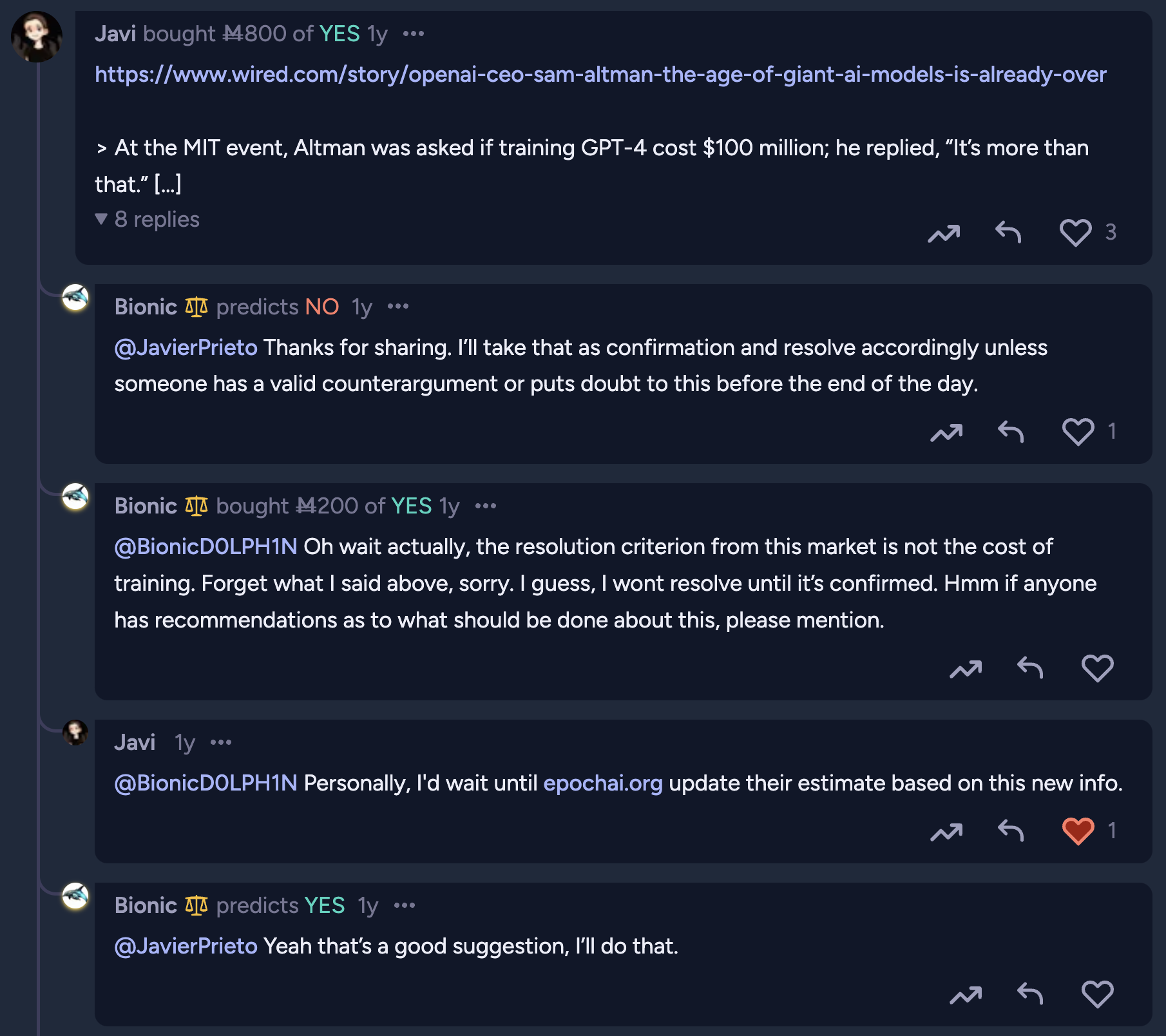

From Wired's "OpenAI’s CEO Says the Age of Giant AI Models Is Already Over":

> At the MIT event, Altman was asked if training GPT-4 cost $100 million; he replied, “It’s more than that.”

My issue with this quote is that it seems pretty likely to me that Altman did not mean to discount research salaries in this estimate. It's hard to know what precisely he meant, but it's hardly conclusive evidence either way regarding the specific market resolution. Here is the discussion that, 1 year ago, followed @JavierPrieto's sharing of the Altman quote.

I made it clear that I agreed that I should wait until epochai.org's estimate.

The Epoch AI estimate that came sometime later, available here, gives an estimate of $40,600,000. In their paper, they claim the following:

By considering AI accelerator chips, other server hardware, networking hardware, and energy separately, this approach can provide more accurate training costs. We find that the most expensive publicly-announced training runs to date are OpenAI’s GPT-4 at $40M and Google’s Gemini Ultra at $30M.

Ben Cottier, Robi Rahman, Loredana Fattorini, Nestor Maslej, and David Owen. ‘The rising costs of training frontier AI models’. ArXiv [cs.CY], 2024. arXiv. https://arxiv.org/abs/2405.21015.Beyond the above quote, it's also pretty clear from EpochAI's methodology page that their compute cost estimates include hardware costs as well as energy costs. Traders are welcome to read their methodology for more detail.

My current best estimate is that, by the stated market resolution criterion and the currently-publicly-available evidence, this question would resolve NO, per Epoch AI's estimate. As the market closed at 95%, I was tempted to let mods resolve NA, but I simply don't understand how this could be justified given the current state of the evidence. I welcome YES holders to show this is mistaken. If no such argument is given in the next 3 days, the market resolves NO. If I am made to sufficiently doubt this resolution, I am open to resolving NA. If I am convinced of a YES resolution, I will resolve this market YES.

@BionicD0LPH1N the confusion is the understanding of the question

Clearly people did not get your wording of this or wed obviously have corrected this

So idk if anyone can prove you wrong. But I would say that it was clearly an unclear market. And that's a reason to na.

@strutheo It's clear that people misunderstood whether sama's quote was a reliable indicator of the correct resolution. Also clearly, it wasn't - the description that hasn't changed for a year was clear that salaries didn't count, but sama wasn't clear about whether he did count them - and anyone who traded on it being clear made a mistake. I agree it's a weird incentive for market creators to have, but I am certain that in this case bionic didn't know this when making his decision

@strutheo "But I would say that it was clearly an unclear market." I'm very confused about your repeatedly claiming this. Back on Oct 10, 2022, at 4:17:33 PM, I put as the sole market description:

> This cost should not include the salaries of researchers that worked on developing it, but rather only the cost of electricity + hardware. I will resolve this as best I can, based on potentially given estimates and other pieces of evidence.

This doesn't strike me as particularly difficult to understand. What specific wording was ambiguous? If the claim is that the market title alone is ambiguous if not coupled with the market description, then I would respond that this is the point of market descriptions.

I was not aware of any incentives, as @Bayesian can confirm. I basically stopped using Manifold some time ago, and didn't follow rule changes or anything like that.

That seems reasonable to me personally, although I'm not confident at all

strutheo-

Clearly people did not get your wording of this or wed obviously have corrected this

The description was clear. The title should've been clearer, sure, I'd change it now if the market was open, but manifold's been consistent that descriptions take priority unless it's extrmely different. And if you make a mistake and make bad bets, then you should lose money on average, that's the intent of the market mechanism.

creator incentive with returning shares ends up incentivising to resolve this no instead of na here

That may be a problem but it's a problem with the entire subsidy concept in general, not something Bionic's doing specifically, so I don't think it'll affect any mod decisions on this market.

@jacksonpolack maybe I'm still not understanding. But if it was just epoch io why was nobody going off of that ?

@strutheo did everyone just misread title and not read description ? Everyone ? Nobody corrected it ?

@strutheo People make bad bets sometimes! Or maybe they're aware of things that we're not, which is why we're waiting for more responses.

@jacksonpolack ok wait so assuming there is no new info, then the result is

everyone misread the title

nobody read the description OR misunderstood the description

or the people who did so, chose not to move the market in their favor?

idk i just dont see how its not a NA, it seems to follow SOME misunderstanding was had here, on either trader or creator end. and since it was not corrected sooner this happened. but if it was caught sooner, it would have been a NA right?

@jacksonpolack also yes - didnt mean to imply he was going to try to abuse the resolution system here, but its a weird situation that will come up probably, and should probably change

@strutheo Sometimes all 50 people, or 14 of the most recent 15 traders, are just going to be extremely wrong. Sometimes in real financial markets involving billions of dollars the market is just extremely wrong. I'm not confident that's the case here, but it's definitely possible. Even if everyone misunderstood the question, we can only N/A if the question was actually bad enough to be misunderstood, and by current standards it wasn't.

We could treat titles as authoritative and judge the title/description discrepancy to be enough to N/A over, but plenty of markets have (imo misleadingly) vague titles and very specific descriptions and we've let them slide, manifold precedent is that description takes priority and titles can be very ambiguous or even suggestive of a different meaning like the above one is. But even then we'd need a specific reason why a reasonable person would be misled, as opposed to simply observing that people were misled. Otherwise, we'd be rewarding people for making bad bets, and incentivizing people to push markets like this to 95% to make them n/a, which is even on the margin not good.