If I become confident at >95%, I will evaluate this YES or NO.

This is for the base model in particular.

People are also trading

@firstuserhere I can't tell if this is for the cluster itself or an estimate of the cost of the cluster-time

Matthew Barnett says GPT-3.5 finished training in early 2022.

https://twitter.com/MatthewJBar/status/1605509829555953667

We know that GPT-4 finished training in August 2022.

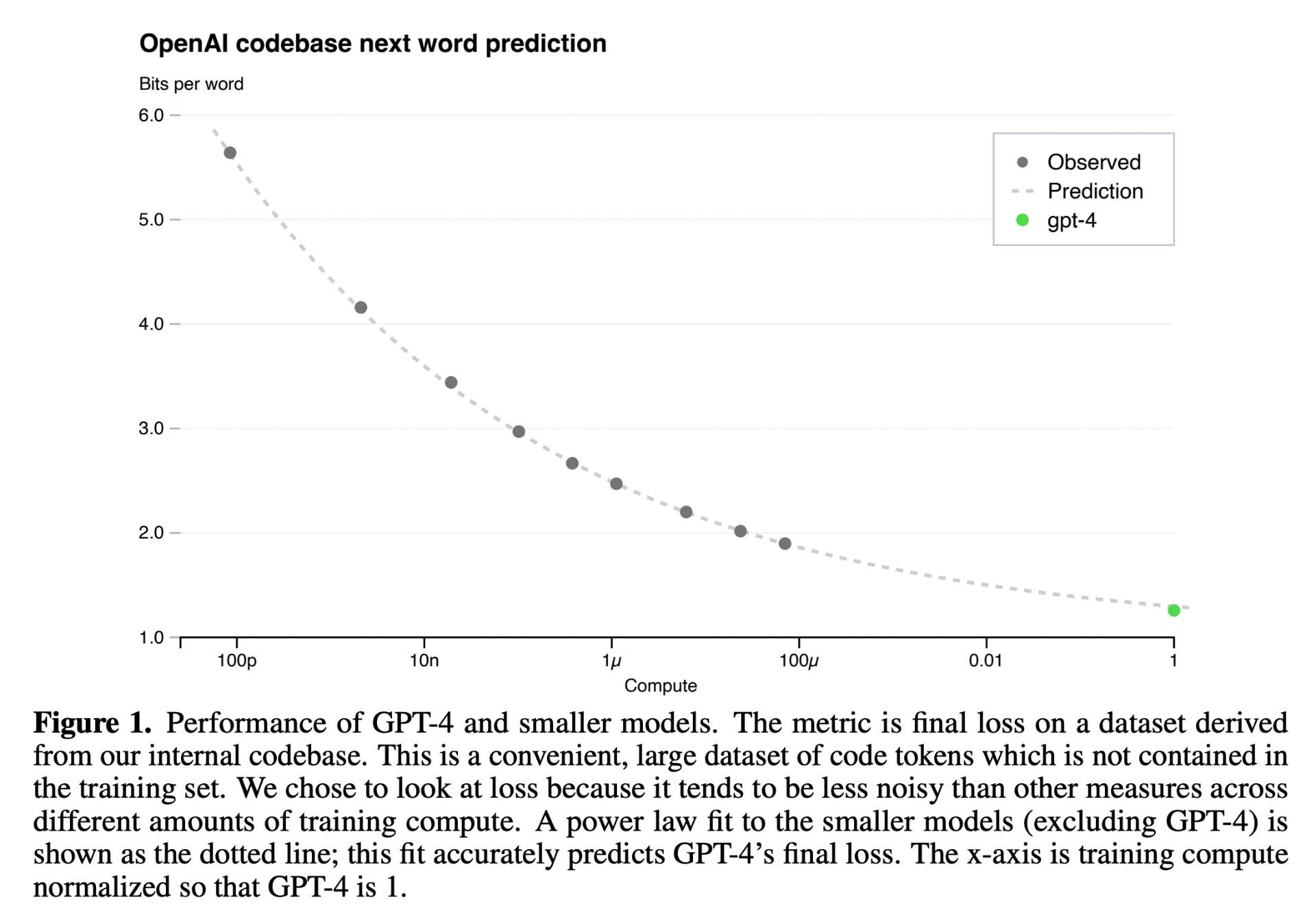

https://arxiv.org/pdf/2303.08774.pdf

My best guess is GPT-3 took 1~3 months to train: https://www.getguesstimate.com/models/22425

@NoaNabeshima Also I've heard it said that GPT-3.5 was a test run for the hardware used to train GPT-4, suggesting that GPT-4 came after GPT-3.5 was done.

Unclear if GPT-3.5 is literally just the largest model in the GPT-4 scaling law experiment