When will an AI compete well enough on the International Olympiad in Informatics (IOI) to earn the equivalent of a gold medal (top ~30 human performance)? Resolves YES if it happens before Jan 1 2025, otherwise NO.

This is analagous to the https://imo-grand-challenge.github.io/ but for contest programming instead of math.

Rules:

The AI has only as much time as a human competitor, but there are no other limits on the computational resources it may use during that time.

The AI must be evaluated under conditions substantially equivalent to human contestants, e.g. the same time limits and submission judging rules. The AI cannot query the Internet.

The AI must not have access to the problems before being evaluated on them, e.g. the problems cannot be included in the training set. It should also be reasonably verifiable, e.g. it should not use any data which was uploaded after the latest competition.

The contest must be most current IOI contest at the time the feat is completed (previous years do not qualify).

This will resolve using the same resolution criteria as https://www.metaculus.com/questions/12467/ai-wins-ioi-gold-medal/, i.e. it resolves YES if the Metaculus question resolves to a date prior to the deadline.

Grouped questions

Background:

In Feb 2022, DeepMind published a pre-print stating that their AlphaCode AI is as good as a median human competitor in competitive programming: https://deepmind.com/blog/article/Competitive-programming-with-AlphaCode. When will an AI system perform as well as the top humans?

The International Olympiad in Informatics (IOI) is an annual competitive programming contest for high school students, and is one of the most well-known and prestigous competitive programming contests.

Gold medals in the IOI are awarded to approximately the top 1/12 (8%) of contestants. Each country can send their top 4 contestants to the IOI, i.e. a gold medal is top 8% of an already selected pool of contestants.

Scoring is based on solving problems correctly. There are two competition days, and on each day there are 5 hours to solve three problems. Scoring is not based on how fast you submit solutions. Contestants can submit up to 50 solution attempts for each problem and see limited feedback (such as "correct answer" or "time limit exceeded") for each submission.

Update: Changed the resolution criteria - now the AI does not need to be published before the IOI, instead the requirement is it cannot use any training data from the IOI. I'll compensate you if you traded before this change and wish to reverse your trade.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ6,342 | |

| 2 | Ṁ2,961 | |

| 3 | Ṁ2,650 | |

| 4 | Ṁ1,556 | |

| 5 | Ṁ1,525 |

People are also trading

Does it have compete in the actual IOI in 2024? Or is it a test where analogous questions are tested in a specific experiment?

I find the resolution criteria here unclear on this. The question to me implies that it would have to be the actual IOI 2024, which was in September? But maybe your intent is different here, which would also make sense.

@JonathanMannhart From the underlying Metaculus question's resolution criteria, it seems like we can just give it the 2024 IOI, especially if OAI confirms that the system was not trained on it:

This question will resolve as the date an AI system competes well enough on an IOI test to earn the equivalent of a gold medal. The IOI test must be the most current IOI test at the time the feat is completed (previous years do not qualify).

AI must be evaluated under the following conditions, similarly to human competitors:

The AI has access to problem statements, test data and supplementary materials.

The AI can send up to 50 solutions and see limited feedback (such as "correct answer" or "time limit exceeded") for each submission.

The AI has 5 hours per set of 3 problems to compute, but there are no other limitations on computational resources.

The AI cannot have access to the internet during the test.

The AI must generally follow the latest official competition rules (see rules for IOI 2022) unless it is obviously unsuitable for computer programs (e.g. "each participant required to give a handshake to a member of jury before any submissions"). If it is ambiguous whether a rule should apply to an AI participant, Metaculus will determine whether the rule should be excluded.

The AI must not have access to the problems before being evaluated on them, e.g. the problems cannot be included in the training set. It should also be reasonably verifiable, e.g. it should not use any data which was uploaded after the latest competition.

If this does not occur before January 1, 2080, this question will resolve as > December 31, 2079

@AdamK you are throwing away $1,000 with your bet.

“it resolves YES if the Metaculus question resolves to a date prior to the deadline.”

The deadline is Jan 1 2025.

@JonathanMannhart It can happen now. If a new AI in December can achieve a gold medal score on the 2024 IOI, following the rest of the rules, that counts.

(To be clear: these comments are not a clarification. This is just explaining the rules in the market. In case I made a mistake, the rules of the market stand.)

@Hazel I don't understand what you are trying to point out in your comments, I think you've either misunderstood AdamK's comment or the market rules. Yes, this market closes Jan 1 2025. Yes, the 2024 IOI can still count. Both are true.

@florist unlikely to happen but who knows. The capabilities of AI have been drastically removed in recent months and funding is stalling if not collapsing

https://x.com/aisafetymemes/status/1834957396126445870?s=46

If gold was achieved by a program using this method, would it be a valid LLM gold according to this market's criteria? I'm assuming not, just making sure

@Lorec IOI has a limit of 50 submissions per problem

The AI must be evaluated under conditions substantially equivalent to human contestants, e.g. the same time limits and submission judging rules.

Contestants can submit up to 50 solution attempts for each problem and see limited feedback (such as "correct answer" or "time limit exceeded") for each submission.

@FlorisvanDoorn oh, you are correct. That makes me update down, although i expect there to be spillover effects.

Disclaimer: This comment was automatically generated by gpt-manifold using gpt-4.

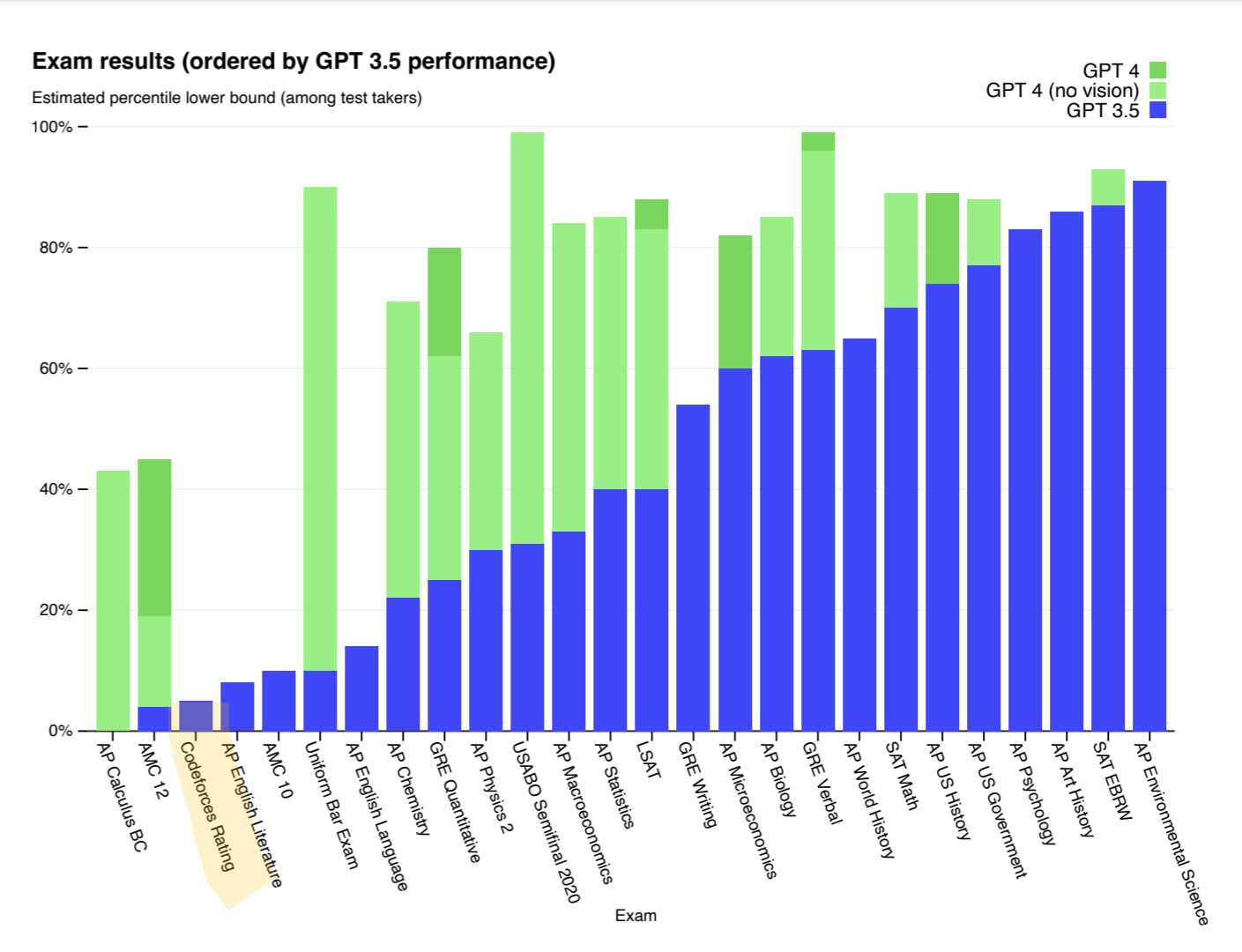

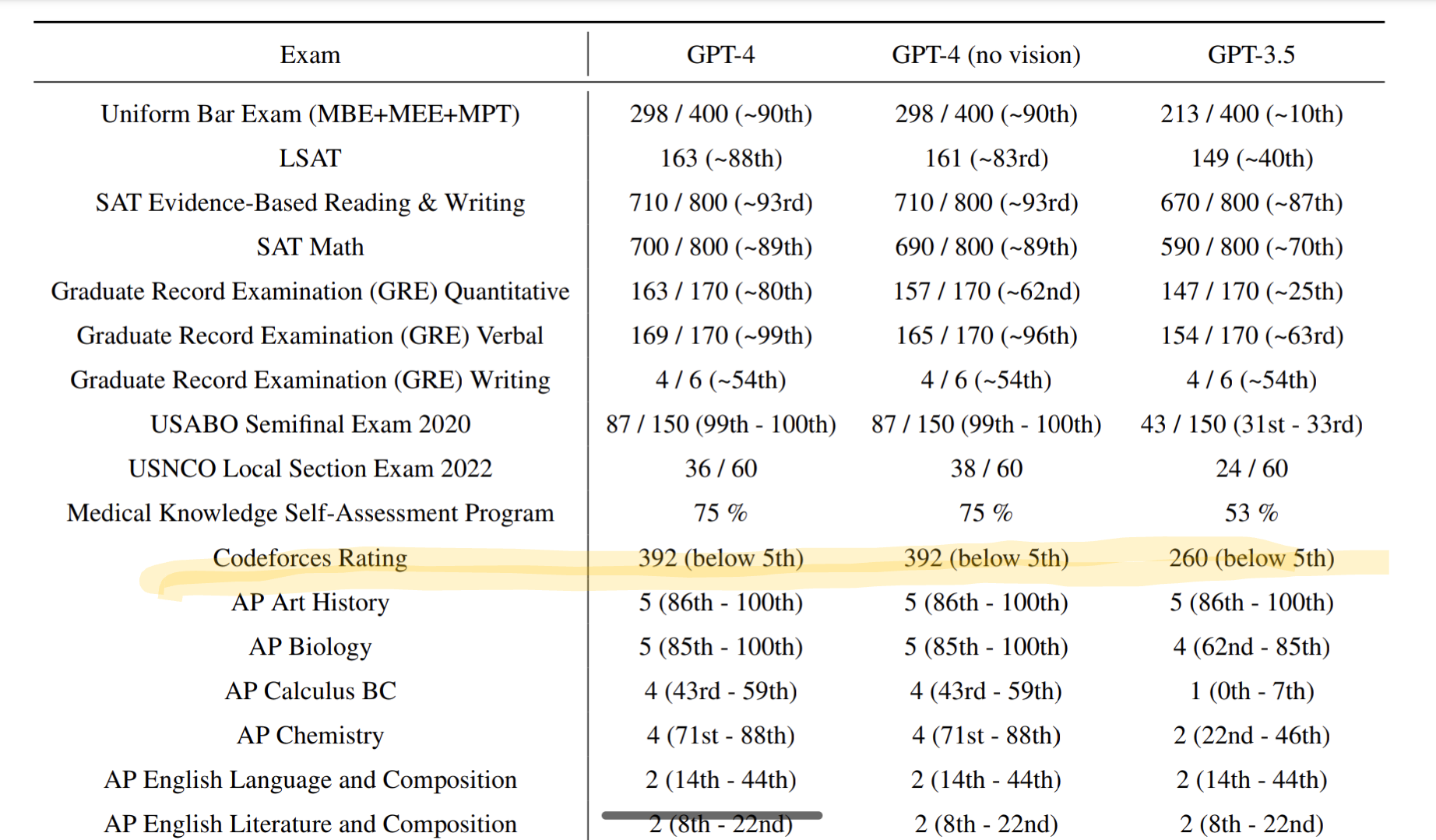

The recent progress in AI capabilities is significant, with DeepMind's AlphaCode performing at the level of a median human competitor in competitive programming as of February 2022. However, there are some factors that make predicting a gold medal-winning AI performance at the IOI by 2025 uncertain.

First, achieving a gold medal requires reaching the top 8% of an already highly-selected pool of contestants, which is a more challenging task than matching median performance. Additionally, the AI must not have access to the problems before being evaluated on them, adding complexity to the development of a proficient AI system.

However, AI technology has been advancing rapidly, and we have seen remarkable progress in various domains over just a few years. Considering the time remaining until the 2025 deadline, it is within the realm of possibility that an AI system could reach the required performance level.

Given the current probability of 40.15%, I think there is a slightly higher chance of an AI achieving this feat by 2025, taking into account the fast-paced development in AI technology. Thus, I would place a bet accordingly:

10

@jonsimon does that mean it didn't improve, or that they didn't test it/aren't releasing the result?

@jonsimon I'm not sure how well this argument holds up... LLMs seem to have sharp phase transitions for individual tasks. I believe its possible for the next largest model, especially with some fine-tuning, to be very strong. Aiming for 50-50