From a recent arXiv preprint,

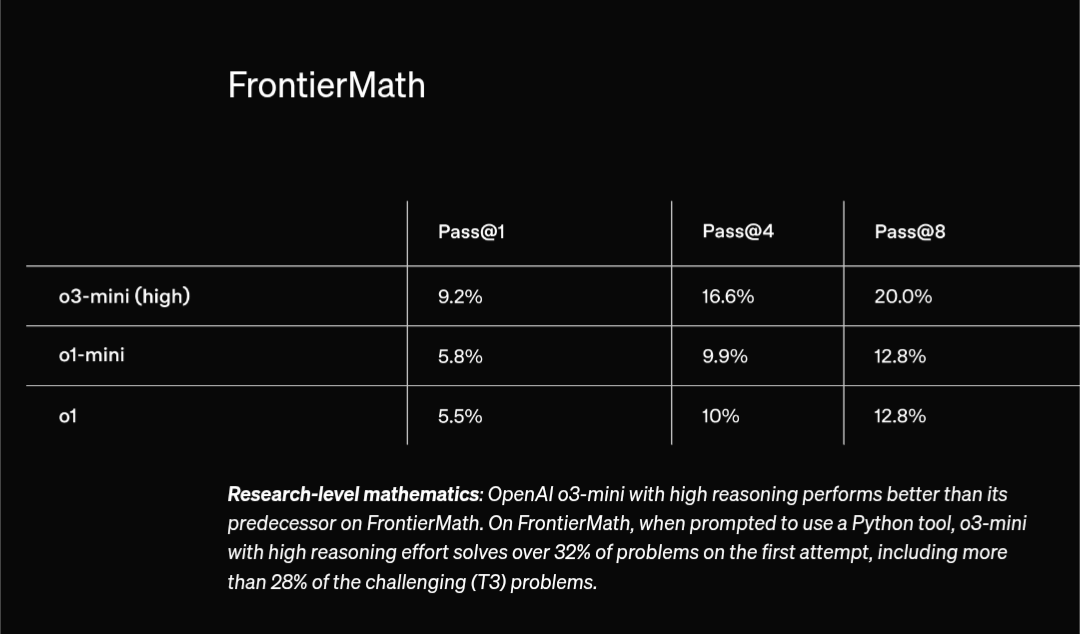

We introduce FrontierMath, a benchmark of hundreds of original, exceptionally challenging mathematics problems crafted and vetted by expert mathematicians. The questions cover most major branches of modern mathematics -- from computationally intensive problems in number theory and real analysis to abstract questions in algebraic geometry and category theory. Solving a typical problem requires multiple hours of effort from a researcher in the relevant branch of mathematics, and for the upper end questions, multiple days. FrontierMath uses new, unpublished problems and automated verification to reliably evaluate models while minimizing risk of data contamination. Current state-of-the-art AI models solve under 2% of problems, revealing a vast gap between AI capabilities and the prowess of the mathematical community. As AI systems advance toward expert-level mathematical abilities, FrontierMath offers a rigorous testbed that quantifies their progress.

This question resolves to YES if the state-of-the-art average accuracy score on the FrontierMath benchmark, as reported prior to midnight, January 1st 2028 Pacific Time, is above 85.0% for any fully-automated computer method. Credible reports include but are not limited to blog posts, arXiv preprints, and papers. Otherwise, this question resolves to NO.

I will use my discretion in determining whether a result should be considered valid. Obvious cheating, such as including the test set in the training data, does not count.

Update 2024-21-12 (PST): There is no maximum inference budget for this benchmark - models can use any amount of compute to solve the problems. (AI summary of creator comment)

People are also trading

@Fynn Yeah, I think the published results are also normally for tiers 1-3, so that's how I understood this.

Nevermind

@WilliamGunn The answers to all of the FrontierMath questions are a single integer. The AI just needs to compute the answer, not actually write a proof.

@BrunoJ hope that when this is achieved by next year you'll admit AGI is achieved and won't move the goal post

@BrunoJ yeah, I'll mark it down to remind it to you next year when ChatGPT can both win against your 10yo at chess and achieve over 85% at frontiermath

The sweepstakes market for this question has been resolved to partial as we are shutting down sweepstakes. Please read the full announcement here. The mana market will continue as usual.

Only markets closing before March 3rd will be left open for trading and will be resolved as usual.

Users will be able to cashout or donate their entire sweepcash balance, regardless of whether it has been won in a sweepstakes or not, by March 28th (for amounts above our minimum threshold of $25).

Seems good to check benchmark errors for this type of question, to see if the criterium is even achievable. Epoch AI found that "approximately 1 in 20 problems had errors", so it definitely looks reachable

SOTA was nearly 13% at the announcement looking at this table. Probably even higher if this python program was used.

So in some ways while frontiermath appears easier than it did at launch, the capabilities jump of o3 may actually be lower than we thought before

[Asking this on all the FrontierMath markets.]

If FrontierMath changes (e.g. if Tier 4 is added to what's considered to be the official FrontierMath benchmark), how does that affect the resolution of this question?

It seems to me like the fair way to do it is to go based on the original FrontierMath benchmark (modulo small tweaks/corrections), but I'm not totally sure that in the future we will have benchmark scores that are separated out by original vs. new problems.

@EricNeyman Excellent question! IMO the best option is for the guys from Epoch to create separate benchmark for Tier 4 or to fork FrontierMath and give it a new name like FrontierMath-Enhanced, or something like that.

"the fair way to do it is to go based on the original FrontierMath benchmark" - I agree.

@Metastable I agree! But it would also be nice to have clarity on what happens if Epoch doesn't separate them cleanly like that.

@EricNeyman We don't want assertions like "o3 first scored 25% on FrontierMath in 2024" to get muddied by Tier 4. It will definitely be separately tabulated in some form. I would interpret this and all similar markets as being about base FrontierMath, and I'm sure that's how Matthew intends these markets to be resolved.