If humanity is still around on Jan 1, 2030, then this market resolves to NO.

If humanity is not around but AI(s) are then this market resolves to YES.

If neither humanity nor AIs are around then this market does not resolve.

See also:

/Tripping/will-ai-wipe-out-humanity-before-th-6d1e8126d974

/JamesBills/will-ai-wipe-out-humanity-before-th-6e8e547afa66

People are also trading

@realDonaldTrump maybe the AI can resolve it, and your mana gains will just live on in the humanity hall of fame

Probably priced about right, though there's room for disagreement on the margins. 1-10%, I'd say.

Why did the probability of humanity being wiped out by AI spike to 7%? Probably had something to do with the lively election prediction markets and the prospect for profit there...

Almost all of that 7% is probably just a reflection of the time cost of Mana.

Will Manifold create a system that shows the discount-rate-adjusted probability of an event rather than the status quo?

Also, arbitrage is screwed up because of a lack of discount rate corrections. Arbitrage makes markets more efficient. It also likely drives user engagement because some people really like picking up pennies when they should be doing their actual work. (It's A+ procrastination.)

Here's one arbitrage opportunity that should not exist:

The market for whether Taylor Swift will RUN for president before 2037 is trading at 7%:

https://manifold.markets/HarrisonLucas/taylor-swift-runs-for-president-of

The market for whether Taylor Swift will ever BE president is trading at 10%:

https://manifold.markets/Gigacasting/will-taylor-swift-ever-be-elected-p

In an efficient marketplace, the "Taylor runs for president before 2037" should always be trading higher than "Taylor Swift wins the presidency before the heat death of the universe." The former will always be more probable than the latter, because the former must happen before the latter happens. But the probabilities are actually inverted.

Of course, the gap between the two may be due to trading fees and low liquidity. But it's likely caused by the time cost of money. One market resolves in 2037, the other ... resolves either when Taylor Swift becomes president or dies. Taylor is young and healthy and probably not doing rock star drugs, so that could be a while. If you tie up your money in these markets, is it arbitrage, or is it a bet on which one has a better implicit measurement of the discount rate?

Solution:

(1) Allow users to apply for loans at super-low interest rates. To prevent people minting endless money using the "loans," the loans have to be backed by collateral: namely, YES and NO shares in markets have to back the loans. This will allow arbitrageurs to close huge gaps and make the predictions more accurate. Manifold tried to do this algorithmically by automatically loaning back invested capital, but that lead to crazy markets like this one.

For example, if I buy YES in the "Taylor runs before 2037" market and buy NO in the "Taylor becomes president" market, I could post the shares as collateral for a near-cost-free loan so I can get my capital back. It won't be tied up until either these markets become efficient (and I can unload the shares) or one or both of the markets resolve.

(2) Alternatively and more or less equivalently: allow people to short YES or NO shares.

There are two benefits to these plans: first, markets will become more efficient. Second, the trading opportunities will create much more engagement (gamblers love leverage and complicated "systems" for making free money).

(3) Close "Divide by Zero FTW lolwut" markets like this one.

This market really shouldn't exist. After we've all been raptured into the divine LLM metamatrix and surrendered our earthly bodies, I doubt that the AIs who destroyed us will bother to resolve this market as YES.

For your consideration:

After we've all been raptured into the divine LLM metamatrix and surrendered our earthly bodies, I doubt that the AIs who destroyed us will bother to resolve this market as YES.

I used to say "then buy NO" to this, but now I have to say "then don't buy YES". Which is less satisfying.

I expect AIs to have convergent instrumental values, in part, which may suffice to resolve this market correctly. But of course if I actually knew that I wouldn't be wasting the insight on a play money prediction market.

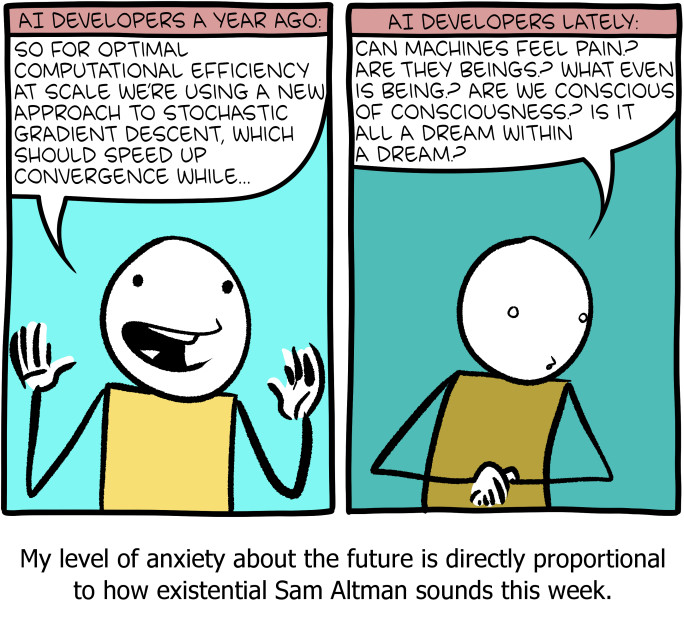

@Mirek I guess Sam Altman doesn't know that people don't "generate" words; they use them to express themselves.

@JonathanRay you got a lot of mana locked in this market that resolves in 2030. I recommend we sell out of this market at 6% to get mana back and take down the market that resolves at the end of 2024 (the LK-99 market) for a much better return. TBH, the odds for both markets are around the same

The problem with these markets is that I don't think it will be obvious, particularly in the later years like 2100, what the difference is between human, artificial, and alien intelligence. All of these things will likely be so interconnected that it will be impossible to define what a "human" is. The definition of "human" will continue to change, just as someone in 1 AD might look at a person who has two shoulder joint replacements and a prosthetic leg as not being human.

@SteveSokolowski That might be the case. But I can also imagine we are wiped out by some equivalent of a paperclip maximizer that is very clearly not human. Condition on humanity being wiped by AI in 2030, I give very small likelihood to not being able to tell the difference.

@SteveSokolowski By alien intelligence are you meaning "non-human intelligence" of the sort that you hypothesize is causing UFO sightings? I thought you didn't like describing that as alien intelligence?