Duplicate of https://manifold.markets/EliezerYudkowsky/if-artificial-general-intelligence with user-submitted answers. An outcome is "okay" if it gets at least 20% of the maximum attainable cosmopolitan value that could've been attained by a positive Singularity (a la full Coherent Extrapolated Volition done correctly), and existing humans don't suffer death or any other awful fates.

People are also trading

The independent version is live, starting with 10 options: https://manifold.markets/4fa/independent-mc-version-if-artificia

I will add additional options based on the following polls:

https://manifold.markets/4fa/which-answers-should-be-kept-when-m

https://manifold.markets/4fa/google-form-in-description-which-an

@1bets I mean, that counts as an okay outcome. The reason it just replaces existing powers will be what the market resolves to

Voted based on my research, summarized here: https://blog.ideanexusventures.com/the-conscious-economy/

@Kronopath how did I get in on this at 1.8% to 9% and now the orderbook looks like this? lol insane this was ever so low.

@EliezerYudkowsky I really think it should be more like 0.001% (10^-24%?) of the "maximum attainable cosmopolitan value that could've been attained by a positive Singularity (a la full Coherent Extrapolated Volition done correctly)".

An outcome is "okay" if it gets at least 20% of the maximum attainable cosmopolitan value that could've been attained by a positive Singularity (a la full Coherent Extrapolated Volition done correctly), and existing humans don't suffer death or any other awful fates.

Tons of unimaginably amazing, extremely good futures don't qualify as "okay" by this definition, hmm.

What exactly is the plan to resolve the multiple non-contradictory resolution criteria? Will there be some kind of "weighted according to my gut feeling of how important they are"? Will they all resolve "yes"? Or is it "I will pick the one that was most centrally true"?

It would be nice if there was some kind of flow-chart for resolution like in my "if AI causes human extinction" market.

I don't seem to have the ability to resolve the current answers N/A, and would hesitate to resolve "No" under the circumstances unless a mod okays that.

Unfortunately this is a dependent multiple choice market, so all options have to resolve (summing to 100% or N/A) at the same time. So it's not a question of whether that's ok with mods, it simply isn't possible given the market structure.

It's a not uncommon issue that popular dependent MC markets get many unwanted answers added. It would be great if there were better tools to control this, but unfortunately the options are pretty blunt. My personal recommendation (but totally up to you) would be to change the market settings so that only the creator can add answers---then, people can make suggestions in the comments, and you can choose whether to include them or not. (I can make that change to the settings if you'd prefer, but it's under the 3 dots for more market options).

You can also feel free to edit any unwanted answers to just say "N/A" or "Ignore" or etc, to partially clean up the market (& clarify where attention should go). That's very much within your right as creator. But there's no way to actually remove the options (or resolve them early, although they will quickly go to ~0% with natural betting).

@EliezerYudkowsky If it's not too much of a hassle, would you also consider making an unlinked version of this market with the most promising options copied over, so that the non mutually exclusive options don't distort each others' probabilities? I know I could do this myself if necessary but your influence brings vastly more attention to the market and this seems like a fairly important market question. Maybe the wording would need to be very slightly altered to "...what will be true of the reason?"

@EliezerYudkowsky Least hassle approach: Start with "Duplicate" in the menu…

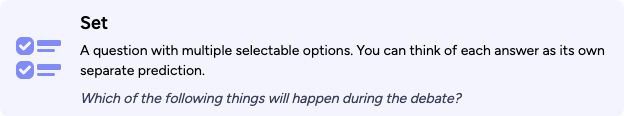

…then "Choose question type"…

…choose "Set" instead…

…delete the answers you don't want to keep. (When I tested, the answers carried over.)

@EliezerYudkowsky An alternative to N/A-ing this entire market would be to unlist it:

…in response to @TheAllMemeingEye's concern that "[this market] makes the site look bad being promoted so high on the home page".

Bafflingly, @EliezerYudkowsky appears to be the (distant) second-biggest Yes holder on Krantz’s options. I’m not sure how that happened. (Some kind of auto-betting from betting on “Other” or something?)

@Kronopath When one holds YES shares in 'Other', one is awarded that number of YES shares in any subsequently added options.

Despite being blocked, he's back again throwing mana at his own options, ffs. I am in favor of editing all of Krantz’s options to [Resolves No].

@Krantz This was too long to fit.

Enough people understand that we can control a decentralize GOFAI by using a decentralized constitution that is embedded into a free and open market that sovereign individuals can earn a living by aligning. Peace and sanity is achieved game theoretically by making the decentralized process that interpretably advances alignment the same process we use to create new decentralized money. We create an economy that properly rewards the production of valuable alignment data and it feels a lot like a school that pays people to check each other's homework. It is a mechanism that empowers people to earn a living by doing alignment work decentrally in the public domain. This enables us to learn the second bitter lesson: "We needed to be collecting a particular class of data, specifically confidence and attention intervals for propositions (and logical connections of propositions) within a constitution.".

If we radically accelerated the collection of this data by incentivizing it's growth monetarily in a way that empowers poor people to become deeply educated, we might just survive this.

@LoganZoellner Maybe you should correct the market. I've got plenty of limit orders to be filled.

@LoganZoellner personally I would actually support total N/A at this point given the nonsensical nature of a linked market with non mutually exclusive options, it makes the site look bad being promoted so high on the home page

@Krantz

>Maybe you should correct the market. I've got plenty of limit orders to be filled.

Given this market appears completely nonsensical, I have absolutely 0 faith that my ability to stay liquid will outlast this market's ability to be irrational.

I have had bad luck in the past with investing in markets where the outcome criteria was basically "the author will choose one of these at random at a future date".

Also, note that this market isn't monetized, so even though I'm 99.9999999999% sure that neither of those options will resolve positively, there isn't actually any way for me to profit off that information.