An okay outcome is defined in Eliezer Yudkowsky's market as:

An outcome is "okay" if it gets at least 20% of the maximum attainable cosmopolitan value that could've been attained by a positive Singularity (a la full Coherent Extrapolated Volition done correctly), and existing humans don't suffer death or any other awful fates.

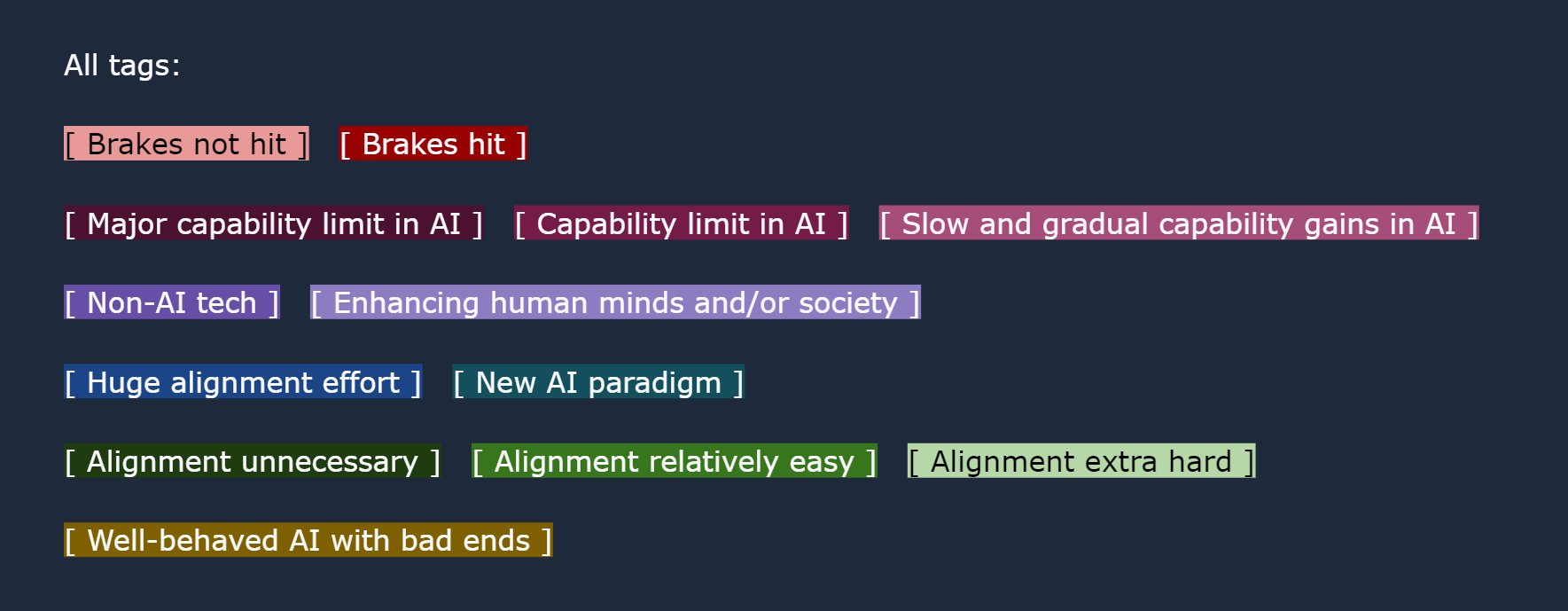

In response to Eliezer Yudkowsky's market, Rob Bensinger organized the scenarios with a set of tags giving them a pretty ontology. This market resolves YES for the tags that are true of the world when AI has an okay outcome.

This is great, but the options are long, dense, and partly overlapping, in a way that made it trickier for me to assign relative probabilities. To help with that, I made this graphic putting the options in order and assigning tags to clusters of related scenarios.