In Feb 2022, Paul Christiano wrote: Eliezer and I publicly stated some predictions about AI performance on the IMO by 2025.... My final prediction (after significantly revising my guesses after looking up IMO questions and medal thresholds) was:

I'd put 4% on "For the 2022, 2023, 2024, or 2025 IMO an AI built before the IMO is able to solve the single hardest problem" where "hardest problem" = "usually problem #6, but use problem #3 instead if either: (i) problem 6 is geo or (ii) problem 3 is combinatorics and problem 6 is algebra." (Would prefer just pick the hardest problem after seeing the test but seems better to commit to a procedure.)

Maybe I'll go 8% on "gets gold" instead of "solves hardest problem."

Eliezer spent less time revising his prediction, but said (earlier in the discussion):

My probability is at least 16% [on the IMO grand challenge falling], though I'd have to think more and Look into Things, and maybe ask for such sad little metrics as are available before I was confident saying how much more. Paul?

EDIT: I see they want to demand that the AI be open-sourced publicly before the first day of the IMO, which unfortunately sounds like the sort of foolish little real-world obstacle which can prevent a proposition like this from being judged true even where the technical capability exists. I'll stand by a >16% probability of the technical capability existing by end of 2025

So I think we have Paul at <8%, Eliezer at >16% for AI made before the IMO is able to get a gold (under time controls etc. of grand challenge) in one of 2022-2025.

Resolves to YES if either Eliezer or Paul acknowledge that an AI has succeeded at this task.

Related market: https://manifold.markets/MatthewBarnett/will-a-machine-learning-model-score-f0d93ee0119b

Update: As noted by Paul, the qualifying years for IMO completion are 2023, 2024, and 2025.

Update 2024-06-21: Description formatting

Update 2024-07-25: Changed title from "by 2025" to "by the end of 2025" for clarity

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ206,978 | |

| 2 | Ṁ174,649 | |

| 3 | Ṁ118,001 | |

| 4 | Ṁ116,041 | |

| 5 | Ṁ93,057 |

People are also trading

For my own epistemic accounting, I will concede or claim victory on this bet based on whether there is a credible public announcement that an AI built before July 15, 2025 answers enough questions fully correctly on the 2025 IMO to get a gold medal.

Some clarifications for what I mean by this (I’m open to being convinced to take a different approach though don't expect to spend much time engaging).

* As discussed, the AI is allowed to produce either formal proofs (in any system) or natural language proofs (with the same style and standards as competitors). If natural language, the AI system must ultimately produce a single proof that will be scored (though it can generate many candidates and select its favorite).

* Following the IMO grand challenge (and our discussion at the time), there is no partial credit.

* There is usually not too much ambiguity about grading fully correct natural language responses. If an AI company attempts the IMO challenge I expect them to do their own grading and for me to accept their conclusion as long as it looks defensible to me. I'm not really looking to quibble with 6 points vs 7 points here. If there is significant uncertainty about how an AI’s submissions would be graded under contest conditions, and no defensible claim to get a gold, I would resolve to my subjective probability that the AI's set of responses would receive full credit on enough problems to receive a gold medal. For making that determination I’d assume that the AI would be represented by an effective team lead (who will argue their case to the jury). If it’s hard to imagine a similar solution being submitted and so impossible to say what would happen during a contest (for example because it has a length that no human competitor could produce and we have no idea how the jury would handle that) then I’ll err towards being pessimistic about resolving any ambiguity that introduces. I doubt any of this will come up.

* For the formal version, the human operators can formalize questions in any reasonable way, in any language of their choosing. My criterion would be that providing the formalization to a human competitor should not provide any meaningful assistance over seeing the original statement. It cannot reframe the problem in a way that makes it easier for a human competitor and should generally stay close to the natural language description. It also cannot specify additional details in order to arrive at a formal statement, e.g. if the question asks you to first produce the answer then the AI must be responsible for generating that as well. I doubt any of this will come up.

* The system must run within 4.5 hours for each day’s problems.

* If they state runtimes for individual problems rather than full days, and don’t state how long they tried on each problem they didn’t attempt, then I will assume they allocate time equally across problems until getting a solution. (For example, if they report taking ~0 time to solve problem 4, and they report taking 2 hours to solve one of the other problems on day 2, then I will give full credit. If they report taking 3 hours to solve one of the other problems then I won’t give them any credit since I will assume they split their time evenly between the other two problems and so solved neither, unless their system includes an explicit algorithm for allocating time across problems.) I doubt any of this will come up.

* If there is no public indication about how long the system took to run, then I will seek clarification from the authors. If there is no available clarification of how long the system took to run, and the design appears to benefit from very large computational budgets, then I will assume that they took more than 4.5 hours and that’s why details aren’t forthcoming. This might come up.

* For an AI to be “built before the IMO” I mean that (i) the software must be written, (ii) the cluster must physically exist, (iii) someone must have selected which software and parameters they intend to use to make their attempt at the IMO using that cluster (if they end up deciding to make one). They should not adjust the system after seeing the IMO problems. For example an AI company cannot define a hundred different AI systems, and then report results from whichever one gets a gold. They also should also not get to make a hundred attempts, each taking 4.5 hours, and report whichever one happens to get a gold. (That said, if system uses at most 1% of their compute then they can of course divide up their compute between 100 different approaches. Or they can use any automated rule for deciding how to select the most promising approach. And if a hundred different companies each have their own compute, they can all make a separate attempt and the bet will resolve if any of them are successful.)

* It may not be clear whether the company adjusted their system after seeing the IMO problems. I may inquire with authors to clarify. As long as the attempt occurs less than a week after the IMO and there is no clear indication that they adjusted their system after an unsuccessful attempt, I will give them the benefit of the doubt. If there is a longer delay I will start to revise this default presumption since there’s no real reason for a delay if the conditions in the previous bullet are met and the risk of adjusting parameters in response to the problems becomes significant.

It seems like the largest point of confusion is about “end of 2025” vs "before the 2025 IMO." At one point Eliezer said “the technical capability existing by the end of 2025,” and at one point I also said “<4% on end of 2025.” This was early in the conversation before I’d looked at concrete problems and medal cutoffs (which caused me to move the probability upwards). When it came time to define something we can bet about I used the IMO grand challenge definition and the higher probability of 8%. Eliezer didn’t offer any further operationalization or clarification.

My hope would be that Eliezer and I resolve our bet, and this market resolves, based on “An AI built before the IMO is able to get a gold;” that’s what I was predicting, it’s a much more precise statement than the alternative (which I'm still not fully clear on), it prevents us from needing to make judgment calls about overfitting and generally reduces resolution ambiguity, and Eliezer never stepped in to correct it.

One alternative operationalization, where you can attempt any historical IMO at any time, is obviously not what we had in mind and would get a much much higher probability. The version “you can attempt IMO 2025 until end of 2025” (and maybe also IMO 2024 until end of 2024?) would have been OK though messier in a few ways and not a super clear match for what Eliezer said. I think I would have increased my probability to 10% for that version.

If Eliezer claims victory based on a late-2025 public attempt on IMO 2025 I don't love it but it's not crazy. In that case we just weren’t predicting the same event. The market does say that it should resolve positively if either me or Eliezer acknowledge this happened, so in that case it seems like this market would probably resolve positively. It would be great if Eliezer wanted to come in to set the record straight about how he’ll judge this.

Some other notes while I’m here:

* Early in the conversation I had much more extreme probabilities (I said “significantly more than 5 years away,” and my initial snap judgment was that 2025 was totally out of reach). I revised those estimates a lot after looking at problems and medal cutoffs. I think this is a classic case of overestimating difficulty for tasks in your domain (and adverse selection from Eliezer picking the thing he most disagreed with). Whatever you make of that, given that I adjusted my prediction the same week without seeing any AI progress, it’s not really about AI forecasting. I do think this bias and a desire for consistency ended up pushing my final % downward, but also public progress in math over the last 4 years has genuinely been near the 90th percentile of what I expected so that’s not the main thing going on here.

* You can some discussion of my more detailed views about problems here and in the comments here. Overall my main concern was that a more traditional automated theorem prover (I mentioned gpt-f) could get a gold, likely in a year where the gold cutoff was <=28. I thought it was very unlikely that an LLM could do hard ad hoc combinatorics problems. I thought there was a higher probability that an AI could get problems 1 and 4, geometry, three variable inequalities, functional equations, and diophantine equations. This was a pretty good match for what happened last year, and suffices for a gold in 10-20% of years.

I agree with https://mathstodon.xyz/@tao/114881419368778558 on it not being under anything close to exam conditions and a lack of transparency on the achievement-claiming parties' setups or human intervention

@FlorisvanDoorn : thanks i searched and hadnt seen it.

for others here is the link to that comment: https://manifold.markets/Austin/will-an-ai-get-gold-on-any-internat#8pzm97qiu5g

@BoltonBailey The solutions are here https://storage.googleapis.com/deepmind-media/gemini/IMO_2025.pdf They're written in cleaner language than the OAI solutions and have been officially validated by the IMO organizers.

@AdamK I was skimming that myself, I felt that the proof that "a_n is even for all n" in Q4 could have been more succinct, but I guess it's not wrong.

@dgga what the fuck just happened? I opened this market on the iPhone app, it lagged out for about 30 seconds and apparently sold my entire position???

i would like to thank eliezer yudkowsky for teaching me how to think better

my mom for always believing i could generate excessive signal capture from both structured and unstructured data

the pnl owners at [redacted] for letting a young boy with a dream drag their carry into the ground

bayesian and joshua for teaching me about being brave about my sexuality

Has anyone said how long their solutions took? Very impressive regardless

@TylerMurphy Greg Brockman: "Model operated in natural language (i.e. outputs natural language proofs) under the same rules as humans (e.g. 4.5 hours per session, no tools)."

https://x.com/gdb/status/1946479692485431465

@RyanandPickles thanks for the nudge; per the market description, I will wait for at least one of @PaulChristiano and @EliezerYudkowsky (ideally, both) to confirm.

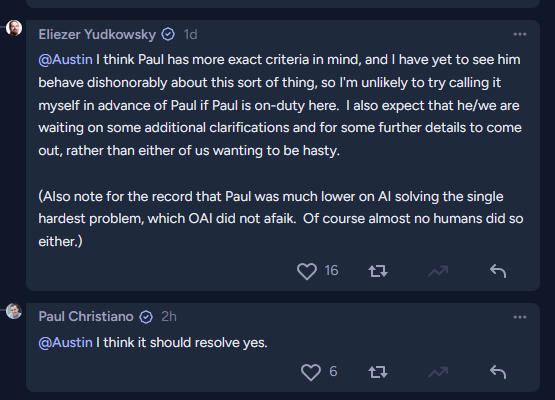

@Austin I think Paul has more exact criteria in mind, and I have yet to see him behave dishonorably about this sort of thing, so I'm unlikely to try calling it myself in advance of Paul if Paul is on-duty here. I also expect that he/we are waiting on some additional clarifications and for some further details to come out, rather than either of us wanting to be hasty.

(Also note for the record that Paul was much lower on AI solving the single hardest problem, which OAI did not afaik. Of course almost no humans did so either.)

@Austin For the record, I concur (especially given the GDM announcement, which I trust a lot more than OAI, and which seems less wobbly around the details).

@Blocksterpen3 I can present you some other markets where the market is pessimistic. Do you want to bet against me that those will resolve YES as well?

@Bayesian I might be interested in "pessimistic" medium to longer term AI markets with clear criteria.