Inspired by these questions:

/sylv/an-llm-as-capable-as-gpt4-will-run-f290970e1a03

/sylv/an-llm-as-capable-as-gpt4-runs-on-o

Resolution criteria (provisional):

Same as /singer/will-an-llm-better-than-gpt4-run-on, but replace "gpt4" with "gpt3.5".

Update 2025-01-01 (PST) (AI summary of creator comment): - Resolution will be based on the creator's personal judgment to save time.

If you disagree with the resolution, please DM the creator for a refund.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ60 | |

| 2 | Ṁ52 | |

| 3 | Ṁ25 | |

| 4 | Ṁ17 | |

| 5 | Ṁ4 |

People are also trading

@traders I'm resolving this based on personal judgment to save time. If you disagree, please DM me and I'll refund you.

@singer presumably this is deepseek? I think you shouldn't refund people who disagree with you but that's just me

@Bayesian it's not any model in particular. it's been so long since I used gpt3.5 that I really can't remember how good it was compared to the local models I've been using. I offered to refund because I resolved based on vibes

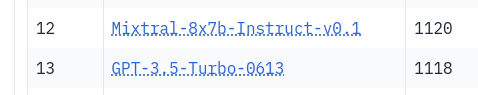

@ProjectVictory I noticed that tie, yeah. I'm not sure how to deal with the case of quantized models. EDIT: see below

@ProjectVictory

This is what I'm thinking of doing:

For a quantized model to be eligible, it cannot differ more than 2% from the original model's score on the Winograd Schema Challenge.