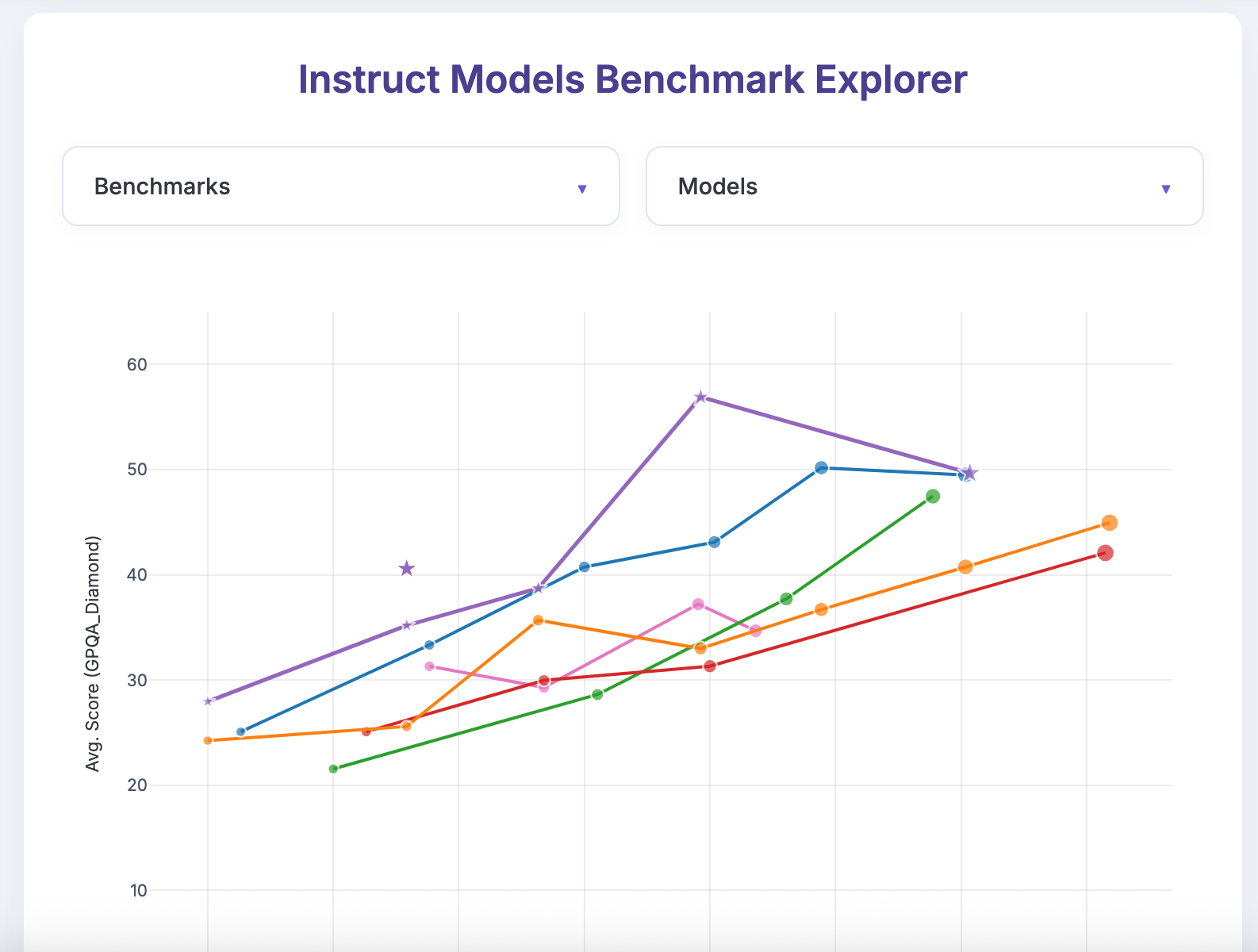

Criteria for meeting GPT-3.5 is either ≥ 70% performance on MMLU (5-shot prompt is acceptable) or ≥ 35% performance on GPQA Diamond

Resolves to the amount of memory the open-source LLM takes up when run on an ordinary GPU. Only counting models that aren't fine-tuned directly on the task. Quantizations are allowed. Chain-of-thought prompting is allowed. Reasoning is allowed. For GPQA, giving examples is not allowed. For MMLU, maximum of 5 examples. Something like "an chatbot finetuned on math/coding reasoning problems" would be acceptable. I hold discretion in what counts, ask me if you have any concerns.

Global-MMLU and Global-MMLU Lite are considered acceptable substitutes for MMLU for the purposes of evaluation.

I will be ignoring statistical uncertainty and just use the headline figure unless the error is extremely large and like >5% and the uncertainty might matter.

Absent any specific measurement, I will be taking the model size in GB as the "amount of memory the open-source LLM takes up when run on an ordinary GPU", but if you can show that it takes up less in memory, I'll use that. If needed, I might run it on my own GPU and measure the memory usage on that. Quantizations count.

My definition of evidence is reasonably inclusive. For example, I would be happy accepting this Reddit post as evidence for the capabilities of quantized Gemma models.

Open-source is defined as "you are allowed to download the weights and run it on your GPU." For example, Llama models count as open-source. Unintentional releases do not count.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ830 | |

| 2 | Ṁ38 | |

| 3 | Ṁ31 | |

| 4 | Ṁ28 | |

| 5 | Ṁ25 |

People are also trading

Falcon H1 is probably a new SOTA (the star is a 1.5B model with thinking).

@Fynn Thanks for pointing that out!

The safetensors size is 3.11GB, so the categories about 2-3.99GB cannot resolve YES.

Qwen3 looks promising, https://arxiv.org/pdf/2505.02214 found over 70% MMLU for 4B-base with 8bit SmoothQuant

@Fynn From information I can find, Qwen3 4B at 8bit will fall into 4 - 5.99 GB, so options higher than that cannot resolve YES.

The paper tested with 5-shot, which is okay as GPT-4 also tested with 5-short MMLU

As a proud owner of 8gb GPU, I will be very interested to see how this resolves. Is there a place where you can get MMLU scores for quantized models? There are a lot of 8-12b models and I wasn't able to find a score for a lot of them without quantization, not to mention most have 5-20 quantized variants that nobody ever tested.

@bohaska if this was a market about current situation, what model would it resole to in your opinion?

@ProjectVictory if I had to resolve this market right now, I would probably resolve based on the Gemma quants and say that currently the smallest LLM that matches GPT is probably slightly larger than 16GB. I do not plan on proactively testing MMLU scores for now, though I do have the capability.

https://storage.googleapis.com/deepmind-media/gemma/Gemma3Report.pdf

for reference, Gemma 3 12B, which gets 40% on GPQA and 69.5% on Global MMLU-Lite, takes up 24GB with bf16, or 12.4GB with SFP8 quantization (because the evals were likely done on the original, I will not be using the quant model size, only sharing for reference)

Gemma 3 4B, which gets 30% on GPQA and 54% on Global MMLU-Lite, which would not qualify for this market, has a size of 8GB with bf16, or 4.4GB with SFP8 quantization