The method used could be anything, for example scanning the brain and decoding brain waves into language and/or images using machine learning.

Experimentally, we should be able to do things like predict someone's internal monologue with only minor errors, or predict what answers someone will give to questions, with only minor errors.

For example, if someone thinks of a number between 1-100 and we can consistently guess which number they're thinking of, this would be strong evidence for YES.

Reminder to read comments for additional clarifications.

Next:

Previous:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ414 | |

| 2 | Ṁ236 | |

| 3 | Ṁ229 | |

| 4 | Ṁ222 | |

| 5 | Ṁ174 |

People are also trading

Just to make sure I understand the question you are asking, it could be rephrased something like:

By the end of 2025, will there exist any laboratory method (i.e. it does not have to be particularly practical or well distributed) to read someone's:

- Inner monologue OR

- Mental imagery OR

- Hidden commitment/guess in a guessing game

With 98% accuracy? i.e. 49/50 times it gets it right.

It all depends on the criteria for this Manifold bet. Analyzing emotions and responses in the brain versus reading out a script word for word of human thoughts is very different. While the technology in this field is certainly advancing and taking huge steps in the right direction, 2030 is not that far away to provide a reliable way to read thoughts. It would be interesting to go deeper into this subject and ask the perspective of professionals in this field.

https://ai.meta.com/blog/brain-ai-image-decoding-meg-magnetoencephalography/

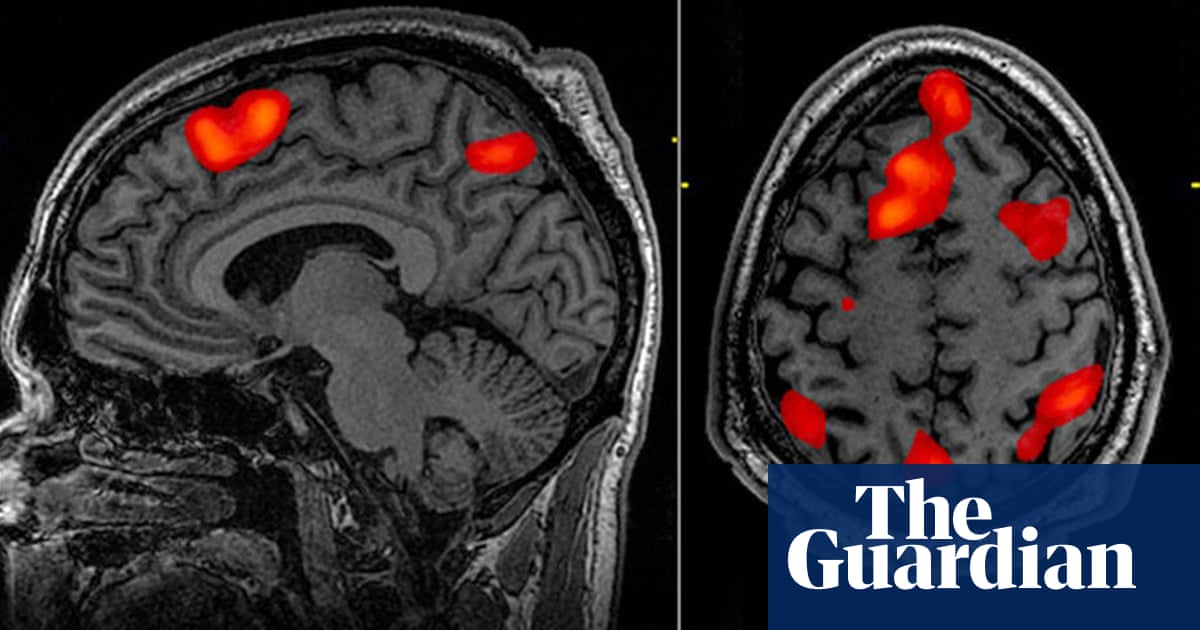

Today, Meta is announcing an important milestone in the pursuit of that fundamental question. Using magnetoencephalography (MEG), a non-invasive neuroimaging technique in which thousands of brain activity measurements are taken per second, we showcase an AI system capable of decoding the unfolding of visual representations in the brain with an unprecedented temporal resolution.

This AI system can be deployed in real time to reconstruct, from brain activity, the images perceived and processed by the brain at each instant. This opens up an important avenue to help the scientific community understand how images are represented in the brain, and then used as foundations of human intelligence. Longer term, it may also provide a stepping stone toward non-invasive brain-computer interfaces in a clinical setting that could help people who, after suffering a brain lesion, have lost their ability to speak.

"predict someone's internal monologue" seems rather different from "predict what answers someone will give to questions". Will this resolve as Yes if only the first part is true? E.g there is a reliable method of listening in on internal monologue, but it won't be useful for predicting answers that contradict internal monologue or don't pass through it at all.