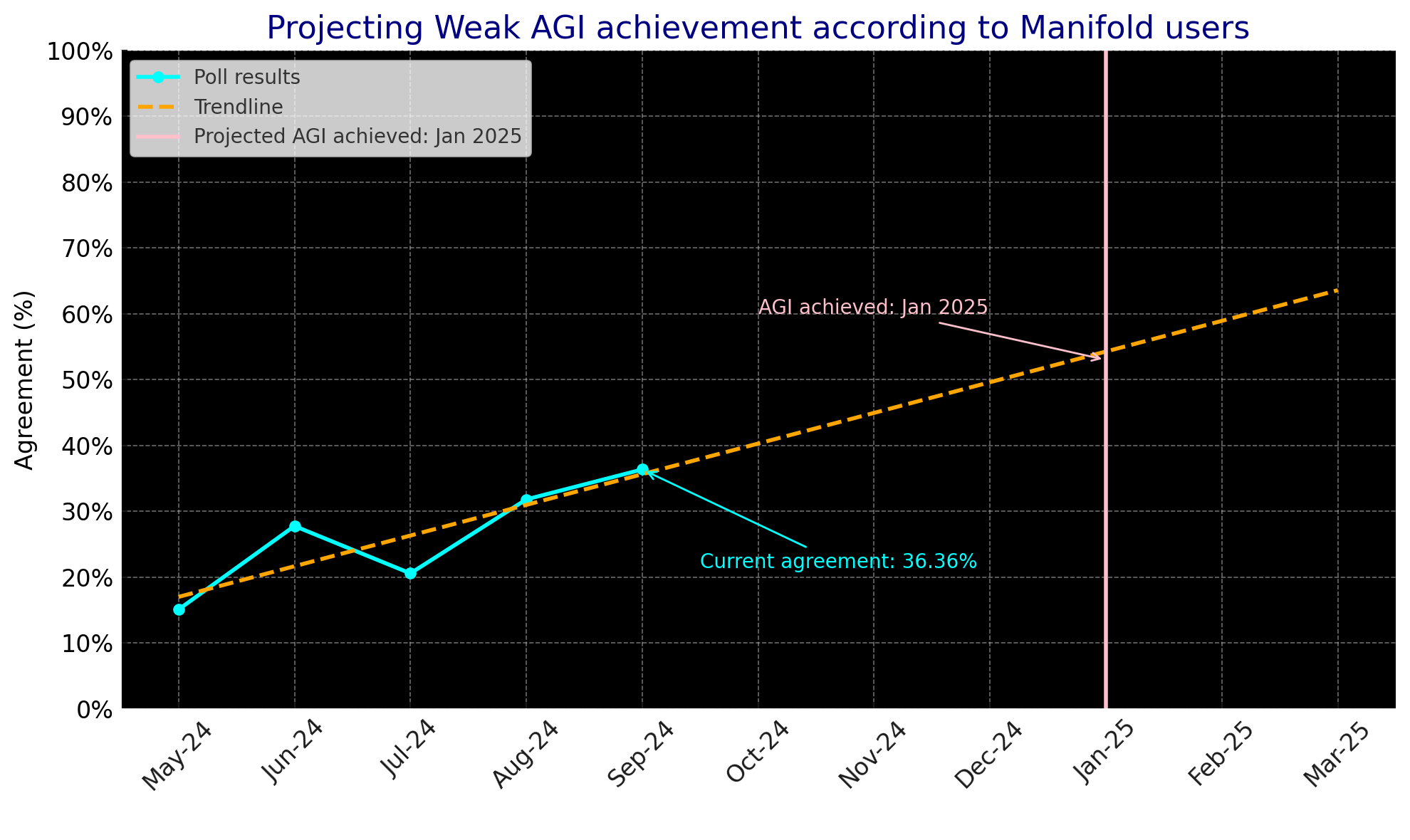

The September 2024 "Has weak AGI been achieved?" Manifold poll has closed and the existing linear trendline has yet again been invalidated. The percentage of Manifold users who agree that weak AGI has been achieved now stands at 36.4%, an increase of 5% since the August 2024 poll. Also for the first time, the two polls had exactly the same number of votes; there was a vote swing where some respondents changed from NO to YES.

The trendline has become steeper and now projects that Manifold will declare the achievement of AGI in four months.

Will the new linear trendline again be exceeded by accelerating progress, with at least 40% of Manifold respondents (ignoring those who express no opinion) agreeing that weak AGI has been achieved in the October 2024 poll? This market will resolve YES if that occurs, and NO if it does not.

PREVIOUS ITERATION OF THIS QUESTION:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ346 | |

| 2 | Ṁ221 | |

| 3 | Ṁ166 | |

| 4 | Ṁ129 | |

| 5 | Ṁ102 |

People are also trading

Strangely, the results of the poll declined to 24%, the lowest on record.

Part of the reason may have been that I blocked ten users who were demeaning me on some of the election markets, and those users were therefore unable to vote on this poll. I did notice that some of them had been YES answerers before.

@Choms Because there is no universal definition of the term. There are some, like me, who believe it has already been achieved when the models blew past human level on 99% of tasks. There's an extreme end of the spectrum that believes "AGI" must be able to do everything a human does perfectly, at 1 million times faster, and be God.

That's why the polls don't actually define what "AGI" is. When a majority of people agree that it has been achieved - whatever it is - then we can say the threshold has been reached.

I think that all types of models - music, images, video, and reasoning - are strangely all converging to human level at around the same time, as if everything humans find important requires just about the same amount of computation, which would make sense. And it also seems to me that we are within 2 or 3 months of better than human level in all these model types right now, so I agree with the trendline that we will have "AGI" by the end of the year.

@SteveSokolowski I don't think you can use a lack of definition to just say "if enough people agree then it must be true" because sadly I could find subsets of people who majorly believe the earth is flat or the world popped into existence like we know it now, and that won't make it truer. I'm still to see a LLM (which are specialized in writing text) that passes a Turing test with myself judging (I honestly enjoy spotting all those AI generated stuff nowadays, it's everywhere, impressive work, but definitely not human, by much :)).

@Choms Well, that might be your opinion of the definition, but 36% disagree, and I personally find that even GPT-4o, which is the least capable of the big models now, can run circles around any human. I'm not conviced by these stupid examples like the number of rs in "strawberry" which are completely pointless when you have a machine that can write an indistinguishable song from scratch for two dollars and a large number of humans are going to fail the test either way:

/SteveSokolowski/musical-turing-test-was-this-song-c

We're not asking people to vote on whether the world is flat. We're asking them to to vote on a subjective question.

@SteveSokolowski ah but I'm not criticizing how people votes, I'm just saying it's a wild conclusion to imply just because a subset of people thinks something (which by your own words is subjective) means that something becomes true, when honestly why would I trust the knowledge of those people in the first place? even my gf shows me obvious fakes from IG that she (and most people) though was true. Also I write 100 times better than 4o/o1 because I can actually reason and apply psychology to the way I write, something LLMs don't and that gives it away super fast ;)

What you are saying is it works better than most people, which is true, but only because most people don't put any kind of interest or effort on their jobs. Get a human their dream job and they'll leave any today-LLM eating dust.

@Choms Hmmm, my experience with "fake-looking" images and "fake-sounding" songs is that the human making them didn't know how to use the tools correctly.

It's 100% possible today to create indistinguishable images and music (and apparently video too, if OpenAI's posts are authentic). However, you need to know how to use the tools and reject a lot of output before finding what you're looking for.

I think what your girlfriend is seeing is criminals who just use weak models to spam out fake images to sell things. They don't really care if most people realize they're fake. My mailserver is now deleting 1000 LLM-generated loan offers per day that all have my name and address on them. They use small models because they wouldn't be able to make a profit if they were using o1-preview to generate this stuff.

I still maintain that I can't find a way to produce higher quality output with a human alone when the tools are used correctly. If you want to write music, then you need to know how to extend and inpaint it. If you want to write legal briefs, you need to use GPT-4o (not o1), because o1 is terrible, and you need to have a conversation with the AI to correct its outputs a few times. When used correctly, the output is far superior to reality.

@SteveSokolowski I have particularly good eye for fakes, even before AI was a thing, so I consider myself an specially hard kind of Turing test, honestly Cleverbot back in the day was more realistic simply by the fact it was trained by 4channers which made it wonderfully imperfect. I'm not meaning scams but people is using it for literally everything, I've seen even AI made giant crabs for whatever reason 🤷♂️ and yes people do believe giant AI crabs may be real...

For the latter part of your message I do agree with you, but you are defining a tool, and you need a human that KNOWS what they are doing to get the result you want, the AI won't get there on their own, neither it will gain new abilities without me training it. A swiss knife can do a lot more things than cut but it's still a knife isn't it?

@Choms I'm curious. Listen to the song in the linked market a few comments above. Do you believe it is AI-generated? I'll reveal the answer in a few days when the market closes, but I want to see whether you can determine if it's "fake" or not.

I doubt that there will ever be a day when an AI gool can generate an image that everyone considers "real" with zero human intervention. The tools' ability to compute reality will likely always exceed the speed of the interfaces our consciousnesses can input commands into them, and therefore the number of outputs that aren't what "humans" want will vastly exceed the things that we are looking for.

@SteveSokolowski the lyrics for sure

Even if the oceans fried.

😂

The song itself is at least partially generated, you can tell by the gender-transforming voice at some point and the overall lack of consistency, it's not an horrible song, I've heard worse from lazy humans, but if this was done by a person, it for sure wasn't worth the time it took (making more likely the AI explanation). I would not bet on that market though, because you added a set of boundaries on the story part that may make it resolve NO, imho.

@Choms Well, you'll see the answer when the market resolves.

I do find it interesting that you believe it is better than what some humans have written. That seems to be a universal consensus.

@SteveSokolowski sure but that's not hard, as I pointed out the issue is the low bar xD the song lacks meaning and emotion - you could say the same about a lot of commercial music (some genres of mumble rap for instance that works more with the sound the words make and not the meaning of the words itself), but then probably any indie artist who actually wants to express something (anything) would do a better job just because art is based in expression, you can get great art with AI as long as the human artist wants to share something, in that case there's a confluence between what you want and what the AI suggests, I don't think the factor on making good art is on the model being used, because, again, they are not human, they don't have a vision, emotion, or any objectives other than what you prompt. If you had a topic defined for the song and just not any random uplifting "burn the roof" kind of song it would have got a better result even with the same tools/time/effort, my 2cts :)

@Choms I suspect that AI could have made the song more emotional if that was intended. In fact, it probably could have done a better job than I could if I had wanted to write a really sad love song.

Regardless of whether AI was used or not, I didn't intend the song to be emotional and meaningful. I specifically intended to write a song that was as catchy as possible that had absolutely no meaning to it whatsoever. I'd disagree and suggest that adding meaning would actually have detracted from a song like this.

"Tension II" by Kylie Minogue got high reviews specifically because the songs have even less meaning than that one. One of the songs is even titled "Dance to the Music." She makes no pretense whatsoever about having any purpose to her songs and yet the album is a critical success.

@SteveSokolowski haven't heard it yet, either way I never said mumble rap and similar wasn't successful, but in your specific case it shows the lack of structure, that's way too much text for what it aims, you'd expect to see some repeated structures, a real person would have either repeated whole pieces or written way less text, I don't think you'd have the patience to mumble all that nonsense manually for a market (opinion) haha

@Choms So, what I gather from this comment, then, is that if a real person had written it (the song in the market), it probably would have had less originality due to repeating the verses. That's an interesting statement.

The market this question refers to is going to be very close - it's hovering on the 40% threshold right now. I think the new Claude 3.5 Sonnet blows away the old one by so much that it's made a few people who answered NO last time change their minds. It certainly changed Dr. Alan's "Countdown."

@SteveSokolowski yup, it's very unnatural (humanly speaking) to be lazy and over achieve at the same time, not for a machine though because it's not an "effort" to output tokens

Claude is impressive, and again, don't get me wrong, I use AI tools a lot and I also like some of the generated art, but imo a very basic of AGI is the capacity to learn new abilities on its own like humans do (how else is it gonna be general?) and right now no known model does that. There is some promising work with robotics and world models but they still need to get "nudged" on the direction you want them, not very general until I see an actual robot get into a new situation without any previous training and start investigating how to do the "thing" without anyone asking. A robot mouse that self learned by just living among other mice would be a nice PoC.

@Philip3773733 I've been voting YES on these markets since July, I believe. First, I would bet that at least 75% of humans would not be able to draw a game of tic-tac-toe.

Second, I don't really think that tic-tac-toe is relevant to whether something has achieved general intelligence. These models can spit out cancer immunotherapies in five minutes of thought that are going into real-world testing, and we're going to judge them on stupid things like how many Rs are in "strawberry?" I think there are too many people who get caught up in these minute details about irrelevant things.

But as to this market, every single predecessor has always resolved YES, probably because technology does not progress linearly but the questions are about the linear trendline (which always keeps moving up.)

I expect that o1-lol will be released later this year and the polls will surpass 50% by December, as o1-lol's 91% score on the programming questions will undoubtedly be AGI.

@SteveSokolowski i have not seen any llm release a novel cancer therapy, could you provide a source? If it is a general intelligence it should at least know how to win in tic tac toe. Just try it. It’s completely dumb. Because it was not trained on it. So it is not general.

@SteveSokolowski sure if posts on X are your burden of proof I know understand how you arrive at this conclusion.

Here is an actual research that shows that Chatgpt will probably kill you if you use it for cancer treatment: https://news.harvard.edu/gazette/story/2023/08/need-cancer-treatment-advice-forget-chatgpt/