As this article (https://www.technologyreview.com/2022/05/27/1052826/ai-reinforcement-learning-self-driving-cars-autonomous-vehicles-wayve-waabi-cruise/) describes, first generation self-driving companies like Waymo and Cruise have (seemingly) doubled down on having the interface between perception, planning, and control be modular and are continuing to use HD maps combined with LIDAR for driving. On the other hand, companies like Wayve and comma.ai are shooting for an end-to-end learned approach where perception translates directly to control using RL or something similar. Tesla falls somewhere in the middle but currently seems to be in the former category.

This question will resolve to "Yes" if a company clearly using the latter approach is the first to deploy self-driving cars in 50 cities. It will also resolve to "Yes" if one of the companies in the former group pivots approaches and uses mostly or entirely end-to-end approaches in their deployed production systems. It resolves to "No" if a member of the former category reaches 50 cities without changing their approach. There's also a large space of scenarios in which the resolution is uncertain in which case it will resolve to probability whenever the first self-driving company reaches 50 cities. I'll use my own discretion combined with helpful comments to determine the resolution.

I've set the resolution date to 2035 but that's mostly just far enough out that I'll be able to change it if it still hasn't happened by then.

People are also trading

https://www.understandingai.org/p/elon-musk-wants-to-dominate-robotaxisfirst

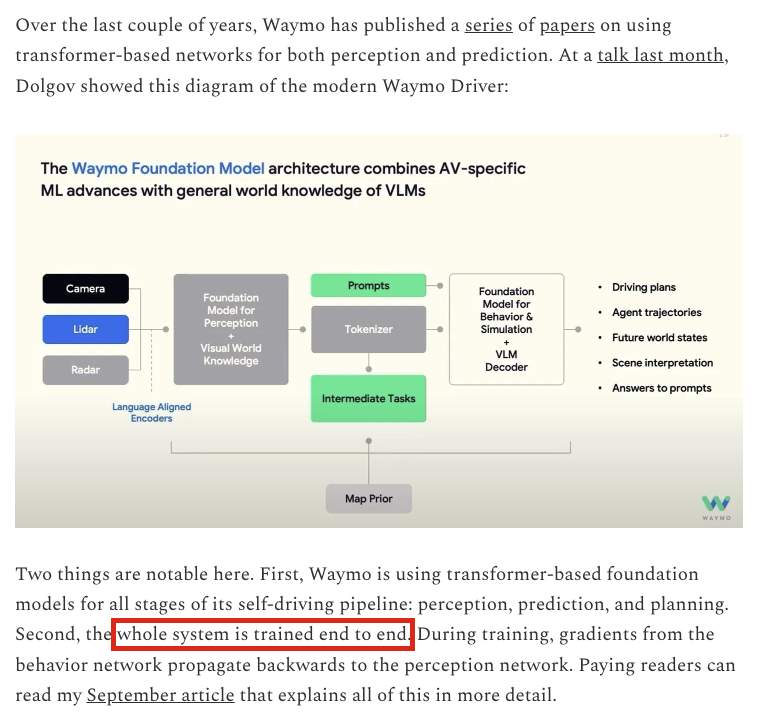

It seems pretty clear that Waymo has published an update indicating that their model is now end-to-end by the definition of this market.

Here's Tim Lee's paid article with even more detail and here's the segment of the talk he was linking to.

@DavidFWatson I agree that, if this is an accurate description of their architecture, it would count. If when we get close to 50 cities, this is still the best source I'll pay to read the full thing to adjudicate. Admittedly, I'm still hoping by that time there'll be something more authoritative on Waymo's (future) current approach.

@DavidFWatson yep, if EMMA were operating in their vehicles as the primary system, this market would resolve yes. Currently, it seems like it's not based on the article, at least as I read it. Do you agree with this assessment? I agree it makes it more likely that by the time Waymo reaches 50 cities, they'll use such an approach though.

“Rich Sutton

March 13, 2019

The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin….

…. Seeking an improvement that makes a difference in the shorter term, researchers seek to leverage their human knowledge of the domain, but the only thing that matters in the long run is the leveraging of computation.

… Time spent on one is time not spent on the other. …. And the human-knowledge approach tends to complicate methods in ways that make them less suited to taking advantage of general methods leveraging computation.

… This is a big lesson. As a field, we still have not thoroughly learned it, as we are continuing to make the same kind of mistakes.

… We have to learn the bitter lesson that building in how we think we think does not work in the long run. The bitter lesson is based on the historical observations that 1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning.

… The second general point to be learned from the bitter lesson is that the actual contents of minds are tremendously, irredeemably complex; we should stop trying to find simple ways to think about the contents of minds, such as simple ways to think about space, objects, multiple agents, or symmetries. All these are part of the arbitrary, intrinsically-complex, outside world. They are not what should be built in, as their complexity is endless; instead we should build in only the meta-methods that can find and capture this arbitrary complexity.

@Gigacasting I don't think that modular AI systems is the same as "building human knowledge into the AI". For example, AlphaGo doesn't use built-in human knowledge, but does have a couple separate modular AI systems - one model for evaluating states and another for picking out candidates moves, which isn't all that different from having separate subsystems for perception and planning, I think. AlphaGo might still count as end-to-end, I dunno, but my point is that having modules with distinct dedicated purposes is a different story from self-driving AI systems that have lots of hand-written rules/knowlege like you're talking about.

More and more likely Karpathy left because Tesla is taking the wrong path (hand-engineered, hard silos between perception and planning, coasting on compute and labeling budget instead of end-to-end)