I will resolve this based on some combination of how much it gets talked about in elections, how much money goes to interest groups on both topics, and how much of the "political conversation" seems to be about either.

People are also trading

@MachiNi I agree the chart is uninformative, but remember this market is very subject to Scott Alexander's judgement. He's already called it that he was wrong. Given his personality and interests, I'd be very surprised if something sufficiently dramatic happens to change his mind.

(I think somebody else already said it, but personally, I think abortion will have more people for whom it's an issue they feel very strongly about, but AI will have more money and more "political conversation". There's not much to say about abortion that hasn't already been said. Not so for AI.)

https://www.astralcodexten.com/p/links-for-december-2025

Scott writes (in #24):

"If this is to be taken seriously, AI is already a bigger political issue than abortion, climate change, or the environment. I fail my 2023 prediction that there was only a 20% chance this would happen by 2028."

I doubt he'll resolve early, but it sure sounds like a YES

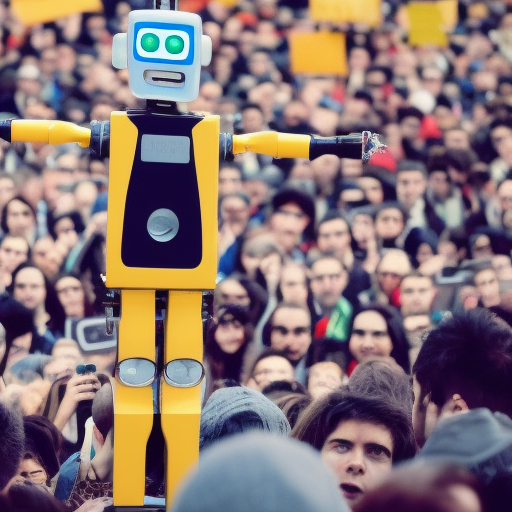

That survey doesn't prove what he thinks it does. It may be the case that when you list AI people pick it because it sounds important, but if you were to just ask people what the biggest political issue is without listing any options, I think ~1% would say AI. Probably ~5% would say climate change and ~10% would say abortion. This goes back to the point that many of us have made earlier, which is that AI is a big issue but not a big political issue. There is no clear partisan split on any position related to AI, and most people have not formed any political opinion regarding AI. Is there even any mainstream slogan or symbol which represents a political opinion regarding AI? Have there been any large protests? Is there any AI voting bloc? There are tons of these for abortion and climate change.

@Dulaman I suspect it was based on bad guesses about the technical situation, not the political situation. In 2023 a surprising number of people seemed to have an implicit assumption that short-term technical progress wouldn't be a big deal, talking about near-term AI impacts in terms of 2023-AI. Today the situation is even worse: Some nontrivial fraction of people haven't even kept up with current progress, so their expectations for social impacts are essentially based on 2024-AI.

@Dulaman Same reason most people thought AI Safety as a research field to prevent the threat of human extinction due to AI was laughable and sci-fi 10 years ago. Humans are just terrible at extrapolating trends. Covid-19 being an excellent example of how dumb we are when it comes to computing mere exponential curves and preparing accordingly. Today, by contrast, AI Safety is discussed in the Pentagon as a legitimate threat with massive long-term military and geopolitical implications.

The early pioneers are always the crazies at first. Respect to them because I was huge sceptic back then as well and would have lost $1000s betting that we would never see AI capabilities we have today 10 years ago.

@CornCasting I think many of the people who were talking about AI Safety 10 years ago did a lot of damage to the cause of AI Safety

@Dulaman I bet no in 2023 and I'm still betting no. I think LLM progress is a logistic curve that we're already slowing down on, and better architectures will take time & research to find.

@DanW I think LLM progress is going to continue for some time but there will also be other architectures that appear. Are there other questions on manifold that provide more granularity regarding these elements?

@Dulaman Couldn't agree more with a huge caveat: blaming them for problems coming home to roost sounds like blaming Galileo for not using the right cultural language that became relevant 10 years later when describing the giant meteor he discovered hurling towards earth. Like yes, 10 years later people look up and see possible signs there might be a meteor and are like "bro, why did you not say this in a language we understand and can take seriously 10 years ago" but also now do something about it dipshits instead of whining about the pioneer's off-putting communication 10 years ago, otherwise the blame is fully on you.

Which is why aisafety.dance might save us all. AI Safety concepts explained by a dancing cat-robot femboy maid. Truly the accepted language of the modern age. No silly outdated millenarianism in sight.