Preface:

Please read the preface for this type of market and other similar third-party validated AI markets here.

Third-Party Validated, Predictive Markets: AI Theme

Market Description

HellaSwag

HellaSWAG is a dataset for studying grounded commonsense inference. It consists of 70k multiple choice questions about grounded situations: each question comes from one of two domains -- activitynet or wikihow -- with four answer choices about what might happen next in the scene. The correct answer is the (real) sentence for the next event; the three incorrect answers are adversarially generated and human verified, so as to fool machines but not humans.

Example HellaSwag Question

A woman is outside with a bucket and a dog. The dog is running around trying to avoid a bath. She

a) rinses the bucket off with soap and blow dries the dog's head.

b) uses a hose to keep it from getting soapy.

c) gets the dog wet, then it runs away again.

d) gets into the bath tub with the dog.

Answer: C.

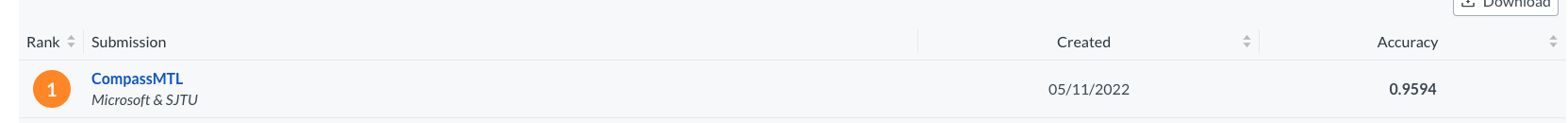

Two Leaderboards:

https://paperswithcode.com/sota/sentence-completion-on-hellaswag

https://leaderboard.allenai.org/hellaswag/submissions/public

Human performance is measured at 0.9560

Resolution Criteria

We will define Superintelligence for the purposes of this question as, "achieving 99% accuracy on the test in question."

Will any entry from the above two links result in a 99% Accuracy Rating? If so, resolves YES, otherwise NO.

20230727 - Changed title, "Superintelligence" to "Higher than Human Level"

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ198 | |

| 2 | Ṁ56 | |

| 3 | Ṁ8 | |

| 4 | Ṁ0 |

People are also trading

2024 version of this market: https://manifold.markets/PatrickDelaney/-will-any-ai-effectively-achieve-hi-62a7d1e40a77

@RobertCousineau There seem to be different interpretations of what the term, "Superintelligence," may mean. My original intent on using the term, "superintelligence," was that it's a fairly recognized term and I wanted to attract people to this market under a recognizable term...a meme, if you will. However in the interests of not being overly market-baiting, I changed it to, "higher than human level performance," to try to be more accurate.

An aside...I won't directly describe these markets based upon the benchmark that they are measuring because I have found from previous activity on manifold if you make the titles too boring, no one bets on them, they have to be human readable and approachable.

I had an objection from, "decision theorist and widely recognized founder of A.I. Elizer Yudkowsky, according to Time Magazine," 😂 at using the term Superintelligence in another market, which made me think more critically about the use of this term.

If you look at wikipedia's current article on superintelligence, they refer to a quote from Nick Bostrom:

any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest

So I guess by that definition, and taking a numerical objectivism standpoint, (meaning, we assume that benchmarks are the best way to describe reality, even if there is a benchmark gap) one could argue that the amalgamation of all current active benchmarks, having surpassed human performance is a way to define a superintelligence. Yudkowsky's comment on my other market which implies, "humans can't possibly create a test that measures superintelligence," reads as quasi-religious to me so I'm just not going to entertain that line of thinking for the purposes of betting markets, because I think it's more fair to create third party validated markets and try to be as non-subjective as possible wherever one can.