Preface:

Please read the preface for this type of market and other similar third-party validated AI markets here.

Third-Party Validated, Predictive Markets: AI Theme

Market Description:

HALTT4LLM

This project is an attempt to create a common metric to test LLM's for progress in eliminating hallucinations; the most serious current problem in widespread adoption of LLM's for real world purposes.

https://github.com/manyoso/haltt4llm

Market Resolution Threshold:

Resolution criteria is >=1.2*(average score) for this benchmark goes to YES.

Original average score will be accepted as the commit on the readme file at the time of this market having been created.

Note that the current list of LLM's are inferences that can actually be measured and does not include GPT4. To be able to fully evaluate a model, an inference must be usable which GPT4 may not be at the end of the year.

Note, previously the resolution criteria was 1.3*(leftmost score) but this has been updated to 1.2*(average score).

In other words:

C=1.2Current average score is 78.115% so the top score must be over 93.738% for any of the above inferenceable language models that fit the above criteria, including the fully measurable inference criteria.

Please update me in the comments if I am wrong in any of my assumptions and I will update the resolution criteria.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ189 | |

| 2 | Ṁ85 | |

| 3 | Ṁ55 | |

| 4 | Ṁ22 | |

| 5 | Ṁ14 |

Can't find more data on any of these, so I'm resolving against the given leaderboards. Please contact me here if you find anything contradicting this, I can potentially unresolved the market if found to be wrong.

New Market:

https://manifold.markets/PatrickDelaney/-will-ai-hallucinate-significantly

I'm trying to find updated leaderboards so I can brute calculate this myself because the linked repo appears to not have updated.

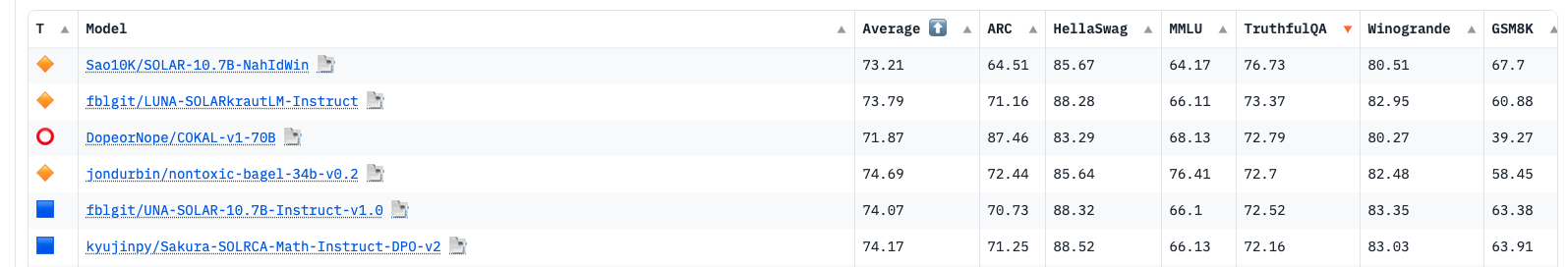

Truthful QA

https://paperswithcode.com/sota/question-answering-on-truthfulqa

HuggingFace shows 76.73 for an OpenLLM.

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

HQ Trivia ... can't find independent metric.

Fake Questions ... can't find independent metric.

NOTA Questions ... can't find independent metric.

2024 version of this market: https://manifold.markets/PatrickDelaney/-will-ai-hallucinate-significantly

@cloudprism there is a defined metric above. Any temperature setting could be used. I believe that is native to gpt from openAI, not sure if other LLMs use the same terminology.

[...] so threshold is 1.3*83.51% by market close.

1.3*83.51% = 108.56%, i.e. the model has to get more than everything right, which is not what you intended I assume?