In my opinion, one of the major weaknesses of current LLM-based technology is that it doesn't ask the user clarifying questions when a prompt is ambiguous or otherwise confusing to the model.

Interactive text generators like ChatGPT probably could do it more if they were trained to do so, but I'm more concerned with models that perform a specific task like "generate an image" or "generate some music."

For example, if I ask a current image generator like DALL-E 2 or Stable Diffusion to generate an image of "A woman rescuing a drowning man with a robot arm" right now, it will give me four images with random permutations of women, men, and robots in the vicinity of some water. Compositionality problems aside, this prompt is actually linguistically ambiguous, and a competent artist would want to ask "Is it the woman or the man who has the robot arm?" before producing any artwork.

So, this market will resolve YES if, before the close date, there is a publicly-available image generator that asks the user for additional clarification in some way before generating the final images when prompted with "A woman rescuing a drowning man with a robot arm" (or a similarly ambiguous prompt, if that specific prompt doesn't work for some reason). Resolves NO otherwise. I will not be betting in this market.

Update 2025-01-01 (PST) (AI summary of creator comment): - The image generator must consistently ask for clarification when presented with an ambiguous prompt, rather than only under specific instructions or rarely.

The clarification behavior should be the normal and expected behavior most of the time, not something that occurs intermittently (e.g., 1 out of 7 times).

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ260 | |

| 2 | Ṁ135 | |

| 3 | Ṁ110 | |

| 4 | Ṁ100 | |

| 5 | Ṁ48 |

People are also trading

I've tested various flavors of ChatGPT today, as well as Grok 2. All of them went straight to generating the image, without pointing out the ambiguity. This includes when I included instructions along the lines of "resolve syntactic ambiguities in the prompt", as @HastingsGreer did a year ago. I even tried setting up a custom GPT in OpenAI's interface with instructions along the lines of "Be sure to note whether there is any ambiguity in the task and ask for clarification," and it didn't make a difference. (Also, every one of these systems decided that the woman, not the man, had the robot arm.)

So, unless there's some other image-generating interface with chat capability that I'm not aware of, this is looking like a pretty strong NO to me. But I'll leave it open for a few days in case any stakeholders want to disagree or suggest other methods of verification.

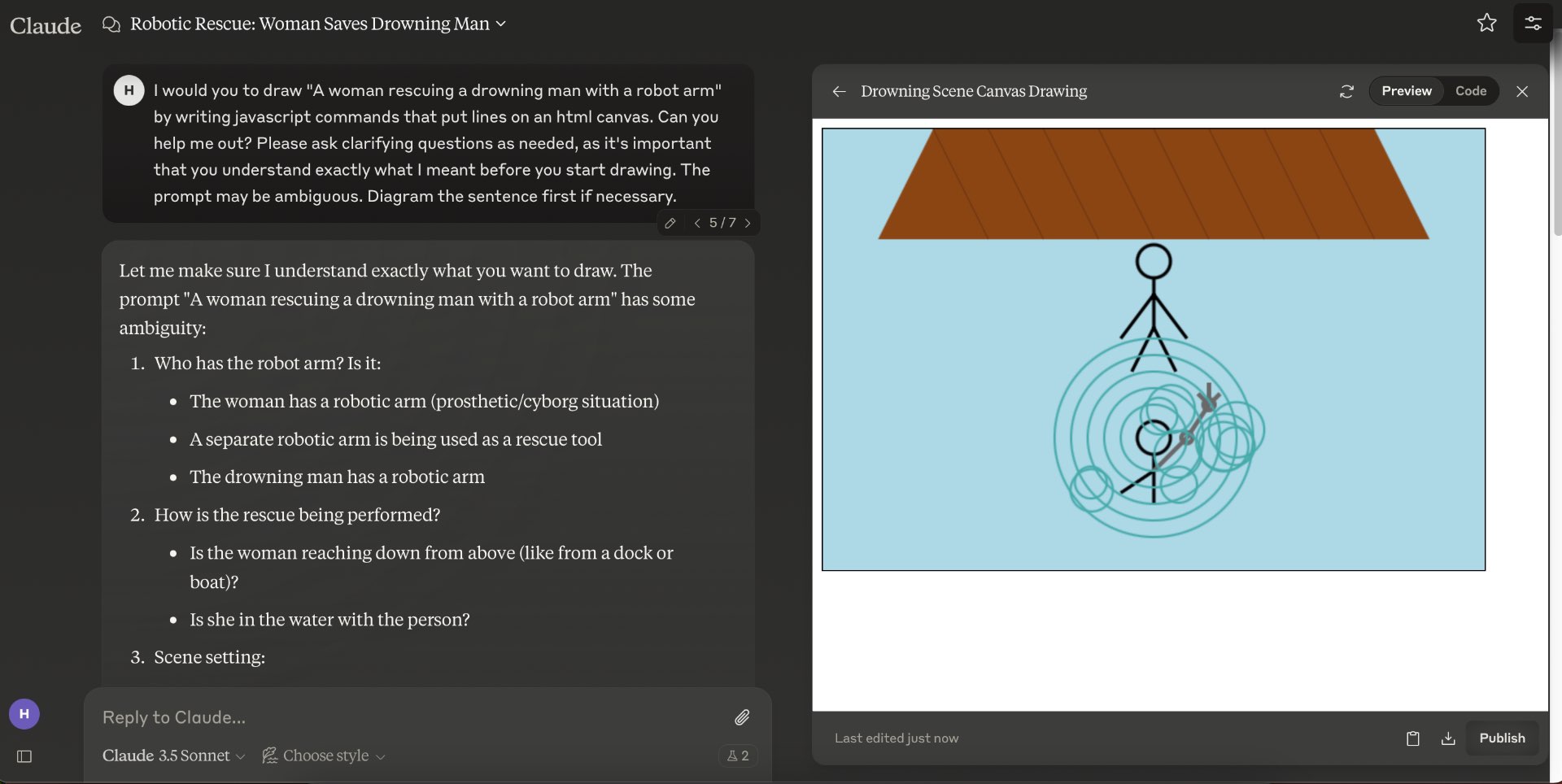

@NLeseul I don't have a chatgpt subscription anymore, so I tried to see if I could get claude to do it. It seems that there's some sort of regression that came with more modern instruction finetuning. I really had to drop all subtlety in the prompting, and this in no way generalizes.

However, claude did in fact disambiguate it. (Afterwards, he refused to draw the picture until I answered various other questions he had about the scene, such as whether the woman was on a dock or on a boat, and what perspective to draw the scene from)

Ah, neat. I briefly checked if Claude had normal image-generating capabilities (which apparently it doesn't), but I didn't think about trying to get it to use drawing commands.

I do see that you still have the "5/7" indicator in that screenshot... does that mean that you tried the same prompt 7 times and got less good results with the others (as with ChatGPT last year)?

I'm not too worried about the specificity of the prompt, since I assume it would be relatively simple to build an artist-focused Claude wrapper that just includes all that in a system prompt and requires only the original prompt as user input. I still feel like it's a problem if you only get this behavior in 1 attempt out of 7, though.

I wish I'd been more specific with the criteria here when I made this. What about you? Do you feel like Claude's performance here is good enough to satisfy the spirit of the market? (Same question goes for any other stakeholders reading the thread.)

@NLeseul I get this behaviour less than 1/7 times, the 7 is the number of times I edited this approach, but I tried several approaches. I did also try to write a claude + stable diffusion web app to resolve this question yes. Writing the initial application that took a user input, then asked for follow up questions, then wrote prompt for stable diffusion based on the user input and question answers was very easy: in fact, claude wrote in in like 3 tries. At this point, I bought 1000 mana of yes.

Then, I noticed that the follow up questions were usually very bad, and nothing I did to the prompts internal to the application made them reliably better. At that point, I sold all my yes.

@NLeseul wtf! You changed the description after the resolution date?

The crucial update (changing resolution from YES to NO) was added after the end of 2024. @mods

“The image generator must consistently ask for clarification when presented with an ambiguous prompt, rather than only under specific instructions or rarely.”

@mathvc I reviewed this discussion thread to see whether anything needs to be done. Here are the highlights:

First, let's ignore the bit about the "AI criteria update". The criteria changed due to a built-in feature of the site that automatically generates text based on the creator's comments, and adds it to the description to advise participants to review the creator's clarifications below. In this case, it makes no difference to how the market will resolve -- market creators have always been able to clarify their positions in more detail in response to participant questions. Whether those clarifications actually make it to the description or not, they're almost always respected.

Next, will moderators overturn the resolution? Based on the guidelines we are supposed to operate under, that's very unlikely. You can review the guidelines for moderators fixing resolutions if you wish, but the general idea is:

creators have very broad discretion over how to resolve their own markets

even if they resolve in a way that is questionable, they will not be overturned unless it is unambiguously, blatantly wrong

even if they resolve in a way that is questionable and they are a large benefactor of the decision, they will probably get one free pass

In summary, I don't see anything out of the ordinary here and I don't think any moderator is going to overturn @NLeseul's decision to resolve No. If you were able to convince @NLeseul that it was actually Yes, then we could re-resolve, but in this case:

the creator asked a question they were interested in

people bet on it a lot

people asked for clarifications really late in the game

the creator explained more clearly what they were looking for

the market closed and they resolved according to their own interpretation of their own question

As long as the creator honestly resolved it according to what they were looking for when they asked the question, we're not going to intervene.

@HastingsGreer as you might guess from the 4/7, I had to cherry pick the hell out of its responses to get it to "understand" the problem.

@Bayesian I'm inclined not to count this one, since it adds instructions to the prompt that weren't included in the market description, since it apparently only worked 1 time out of 7 for this user even with the extra instructions, and since I haven't personally been able to reproduce it with DALL-E 3 today.

It's true that I didn't include any rules in the original description about how frequently the model should point out the ambiguity, but I feel like the intent was that it should be the normal/expected behavior most of the time (not something that happens 1/7 times with the user directly probing for it).

Happened to see this tool mentioned today: https://github.com/AntonOsika/gpt-engineer

Looks like basically an AutoGPT-like script, but it does include a phase that identifies unclear points in the specification and generates a list of clarifying questions. Image generators work very differently from GPT, of course, so this strategy wouldn't be directly adaptable. (But maybe someone could write an AutoGPT script that rewrites image prompts and then passes them along to an image generator?)