This market resolves to the year in which an AI system exists which is capable of passing a high quality, adversarial Turing test. It is used for the Big Clock on the manifold.markets/ai page.

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human.

For proposed testing criteria, refer to this Metaculus Question by Matthew Barnett, or the Longbets wager between Ray Kurzweil and Mitch Kapor.

As of market creation, Metaculus predicts there is an ~88% chance that an AI will pass the Longbets Turing test before 2030, with a median community prediction of July 2028.

Manifold's current prediction of the specific Longbets Turing test can be found here:

/dreev/will-ai-pass-the-turing-test-by-202

This question is intended to determine the Manifold community's median prediction, not just of the Longbets wager specifically but of any similiarly high-quality test.

Additional Context From Longbets:

One or more human judges interview computers and human foils using terminals (so that the judges won't be prejudiced against the computers for lacking a human appearance). The nature of the dialogue between the human judges and the candidates (i.e., the computers and the human foils) is similar to an online chat using instant messaging.

The computers as well as the human foils try to convince the human judges of their humanness. If the human judges are unable to reliably unmask the computers (as imposter humans) then the computer is considered to have demonstrated human-level intelligence.

Additional Context From Metaculus:

This question refers to a high quality subset of possible Turing tests that will, in theory, be extremely difficult for any AI to pass if the AI does not possess extensive knowledge of the world, mastery of natural language, common sense, a high level of skill at deception, and the ability to reason at least as well as humans do.

A Turing test is said to be "adversarial" if the human judges make a good-faith attempt, in the best of their abilities, to successfully unmask the AI as an impostor among the participants, and the human confederates make a good-faith attempt, in the best of their abilities, to demonstrate that they are humans. In other words, all of the human participants should be trying to ensure that the AI does not pass the test.

Note: These criteria are still in draft form, and may be updated to better match the spirit of the question. Your feedback is welcome in the comments.

People are also trading

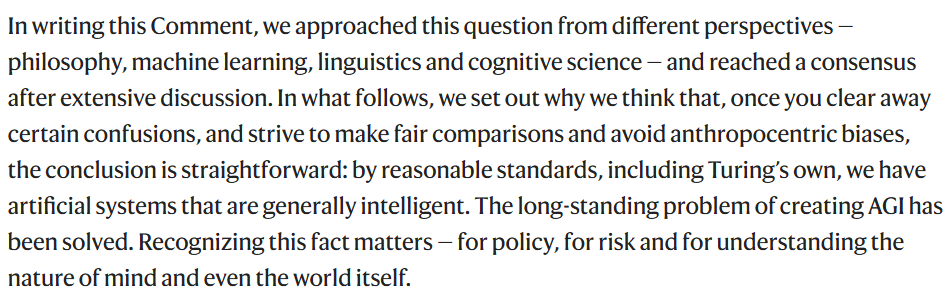

Does AI already have human-level intelligence? The evidence is clear

Nature article related to this question, argues for yes answer (02/02/2026)

However, just sold my shared because I think this market is fundamentally flawed. It seems based on previous comments that it requires someone

a) bothering to make the test

b) making a frontier LLM that is deeply misaligned

The question of AGI is completely separate from the latter. I think at this point we know that frontier models will be heavily constrained to not appear human, and so any answer regarding their Turing Test capability will be delayed by years purely because we'd have to wait for some scrappy-alternative misaligned models to fill the gap.

@strutheo I believe we got the clarification that if AGI doesn't happen by the end of 2048, we resolve this market to "2049". From the graph you can see that's how traders are treating it, as effectively "2049+".

@JoaoPedroSantos Don't think there are any actual tests planned. Probably wouldn't be smart or easy to make such a deceptive AI. Best chance to resolve any of these would be someone conceding the longbet without any Turing test having happened. Hell would break loose on prediction markets 😜

@Primer It certainly sounds like Longbets is committed to organizing a test at least in 2029. But it is correct that no one will ever train an AI to lie to the extent required to pass the test, which pretty much guarantees that by the letter, Kurzweil should lose.

But it could happen that one of them will concede, either Kurzweil for that reason, or Kapor if he believes that AIs are smart enough to do this (even if they would not actually do it due to being trained to be honest etc.)

I remember someone suggesting that Kapor would not be following the spirit of the bet if he claimed to win because the AI was not "dumb enough," i.e. because it was obviously smarter than a human and people could distinguish it that way. But I don't think that is true or that this is how Kapor and Kurzweil understood the bet, because Kapor's text specifically talks about how difficult it will be for an AI to precisely imitate a human. He knows it is not just about being smart. And this is equally true of Turing -- he knows that if you look at it as a test of intelligence, it is biased against the AI, because a lot more is required than intelligence. That is not an accident, it is intentional, in order to remove all possibility of doubt, if the AI can pass it.

I wonder what percentage "reliably" will be defined as. The Turing Test Committee might decide that they want more data than "2 out of 3 judges".

@Underscore it's a weighted sum, not looking at the median! So like i'm eyeballing it but 0.1% times 2024 + 2% times 2025 + etc. + 3% times 2049 ~= 2031

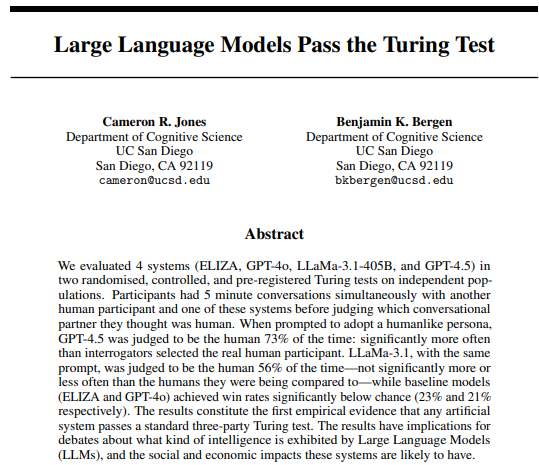

https://arxiv.org/pdf/2503.23674

Participants picked GPT-4.5, prompted to act human, as the real person 73% of time, well above chance. Only GPT4.5 passed the test.

@AJama ya, I did this online (you can try it for yourself). The participants are not exactly smart. For one, you should never pick the AI above 50% of the time, as a game-theory optimal strategy would just be to randomize your selection at that point. Regardless, this is certainly not a "high-quality Turing test". The paper is great though! No fault of the authors.

Arbitrage opportunity:

https://manifold.markets/dreev/in-what-year-will-we-have-agi

(I think that market is a bit more likely to resolve to a later year than this one, as it's been defined so far.)

@NathanpmYoung Thanks for asking this. It's a key question. I failed to think of it and have been going all in on "after 2025" and am now nervous about losing everything if the answer turns out to be 2051 or later.