If a large language models beats a super grandmaster (Classic elo of above 2,700) while playing blind chess by 2028, this market resolves to YES.

I will ignore fun games, at my discretion. (Say a game where Hiraku loses to ChatGPT because he played the Bongcloud)

Some clarification (28th Mar 2023): This market grew fast with a unclear description. My idea is to check whether a general intelligence can play chess, without being created specifically for doing so (like humans aren't chess playing machines). Some previous comments I did.

1- To decide whether a given program is a LLM, I'll rely in the media and the nomenclature the creators give to it. If they choose to call it a LLM or some term that is related, I'll consider. Alternatively, a model that markets itself as a chess engine (or is called as such by the mainstream media) is unlikely to be qualified as a large language model.

2- The model can write as much as it want to reason about the best move. But it can't have external help beyond what is already in the weights of the model. For example, it can't access a chess engine or a chess game database.

I won't bet on this market and I will refund anyone who feels betrayed by this new description and had open bets by 28th Mar 2023. This market will require judgement.

Update 2025-21-01 (PST) (AI summary of creator comment): - LLM identification: A program must be recognized by reputable media outlets (e.g., The Verge) as a Large Language Model (LLM) to qualify for this market.

Self-designation insufficient: Simply labeling a program as an LLM without external media recognition does not qualify it as an LLM for resolution purposes.

Update 2025-06-14 (PST) (AI summary of creator comment): The creator has clarified their definition of "blind chess". The game must be played with the grandmaster and the LLM communicating their respective moves using standard notation.

Update 2025-09-06 (PST) (AI summary of creator comment): - Time control: No constraints. Blitz, rapid, classical, or casual online games all count if other criteria are met.

“Fun game” clause: Still applies, but the bar to exclude a game as "for fun" is high; unusual openings or quick, unpretentious play alone don't make it a "fun" game.

Super grandmaster: The opponent must have the GM title and a classical Elo rating of 2700 or higher.

Update 2025-09-11 (PST) (AI summary of creator comment): - Reasoning models are fair game (subject to all other criteria).

Update 2025-09-13 (PST) (AI summary of creator comment): Sub-agents/parallel self-calls

An LLM may spawn and coordinate multiple parallel instances of itself (same model/weights) to evaluate candidate moves or perform tree search, including recursively. This is considered internal reasoning and is allowed.

Using non-LLM tools or external resources (e.g., chess engines like Stockfish, databases) remains disallowed.

Update 2025-12-20 (PST) (AI summary of creator comment): Coding a chess engine is not allowed: If an LLM codes up a chess engine (e.g., in Python) and uses it to play, this does not count for resolution. The creator is interested in chess-playing ability as an emerging characteristic of the LLM itself, not through reliance on coded external tools.

People are also trading

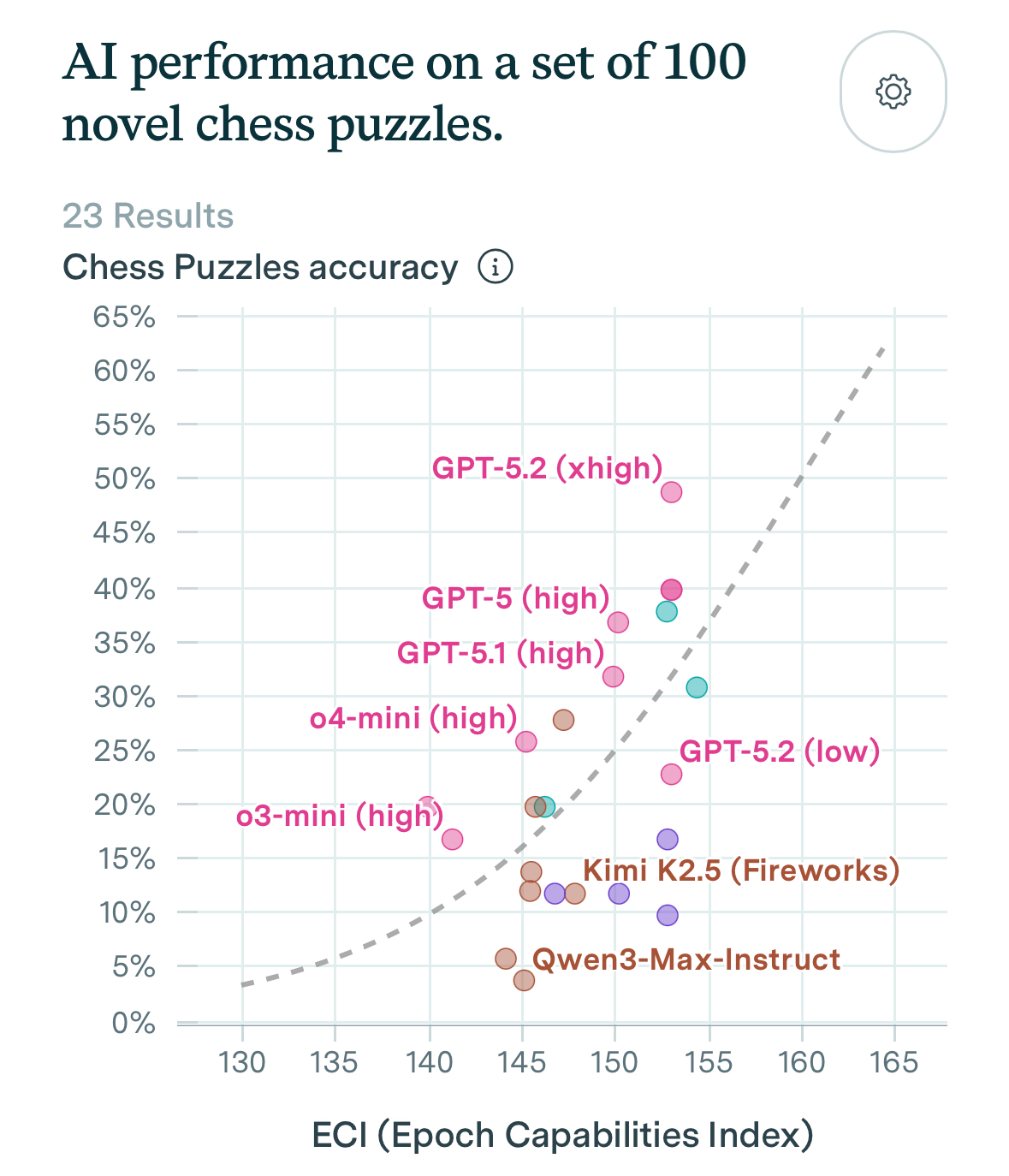

Epoch now has a chess puzzle index. At the current pace, we will saturate this benchmark in late 2027. My feeling is that saturating is likely a good club player.

Claude Opus 4.6 thinks saturating the benchmark means 2200-2400 elo, while Grok 4.1 thinks it means 2400-2800 elo.

My feeling is that 2800 rating seems too much and if it happen, only in late 2028, with risks of no GM playing the match.

Even then, LLMs still need to keep track of the board in our market and they are subject to grandmasters playing lines that they are particularly bad.

Market seems too optimistic.

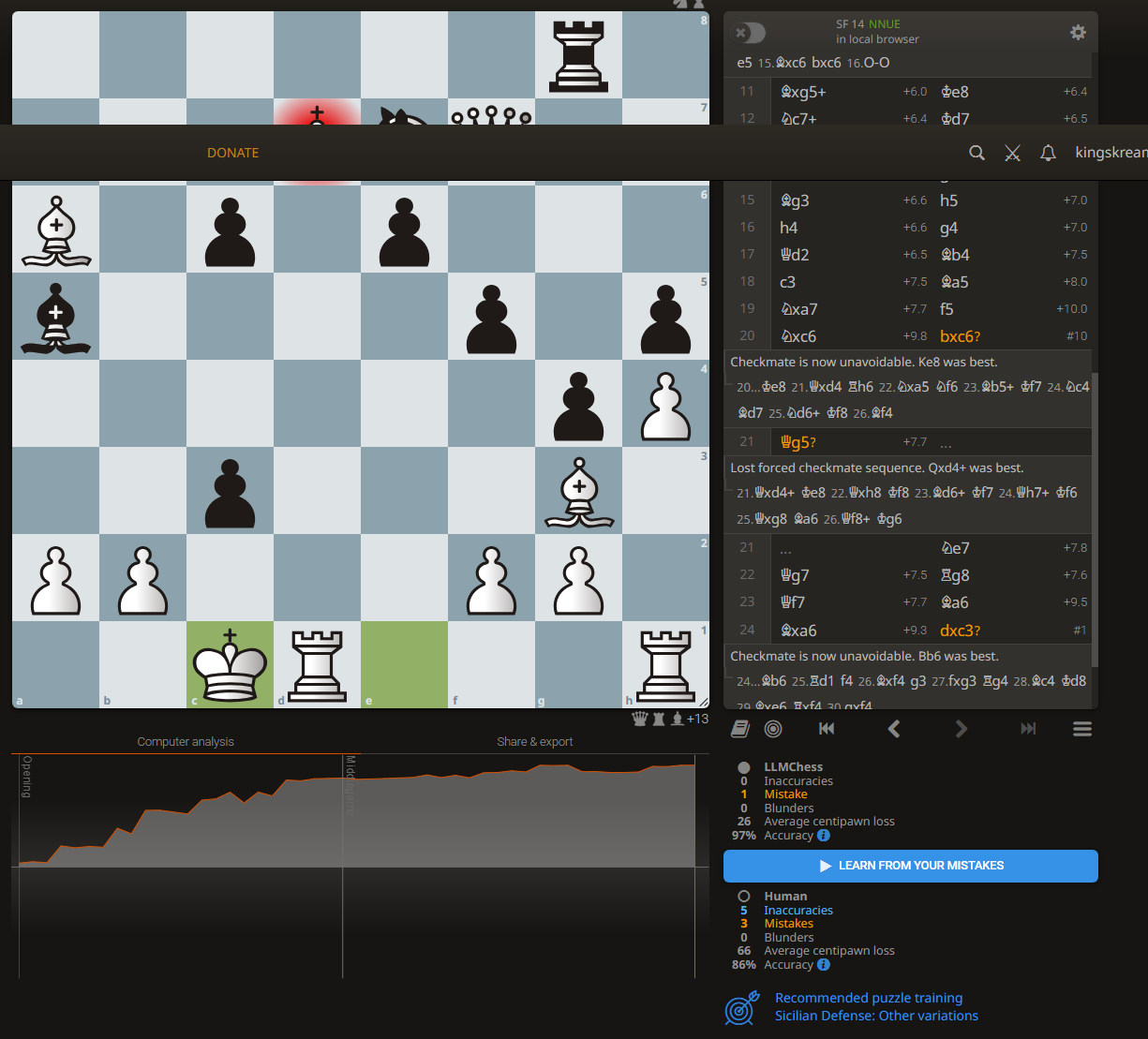

Put 4.5 Opus into a 20 game match against 1800 Stockfish. Opus success was that it was able to be checkmated in 2 of the 20 games, instead of, you know, just making illegal moves

@AhronMaline im too tired to apply reading comprehension but this seems to be what youre looking for https://manifold.markets/MP/will-a-large-language-models-beat-a#ayre9ue4687

@AhronMaline No, I have said multiple times that relying on coding doesn't count. I am interested in playing chess at Super GM level as a(n)(emerging) characteristic of the LLM itself.

I made an LLMChess website based on a couple 50M transformer models from here (2 years ago):

https://huggingface.co/adamkarvonen/chess_llms/tree/main

Here's the MVP version that shows the probabilities based on the logits (turn off turbo mode, but much slower cause it's running on CPU on free tier, runs ok on a GPU) https://chess-llm-316391656470.us-central1.run.app/

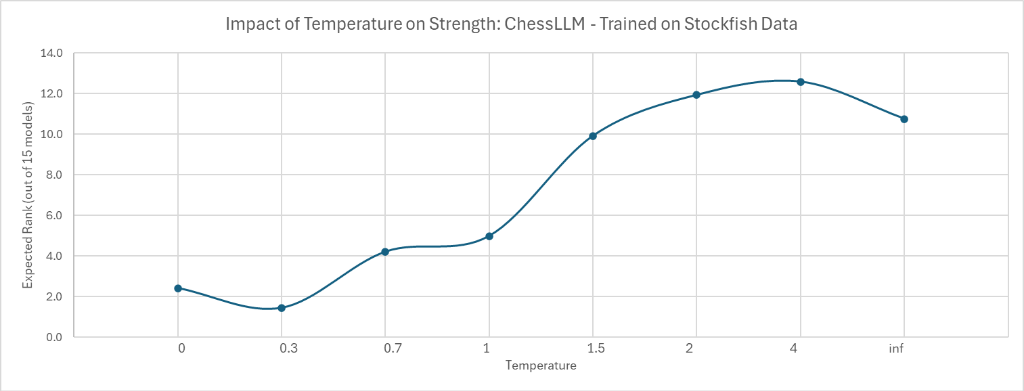

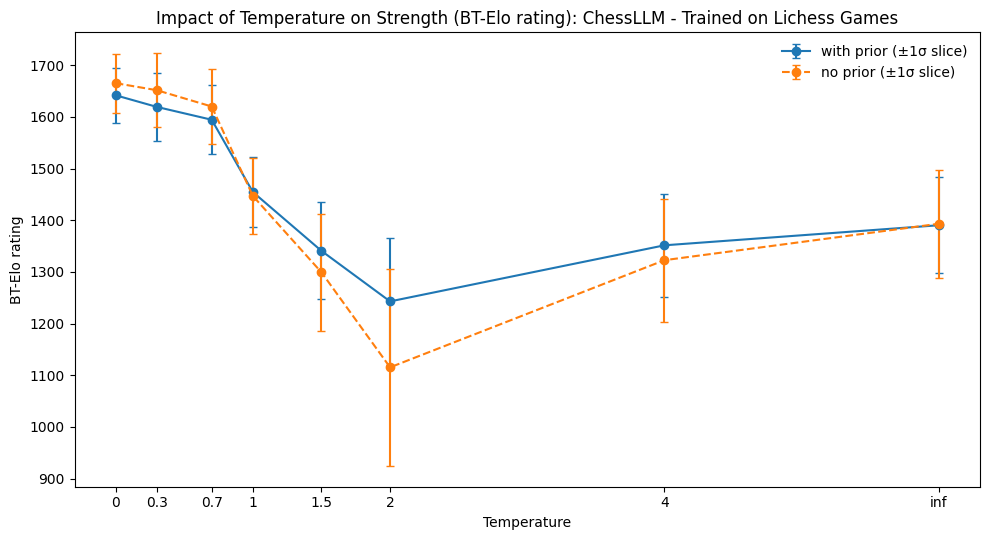

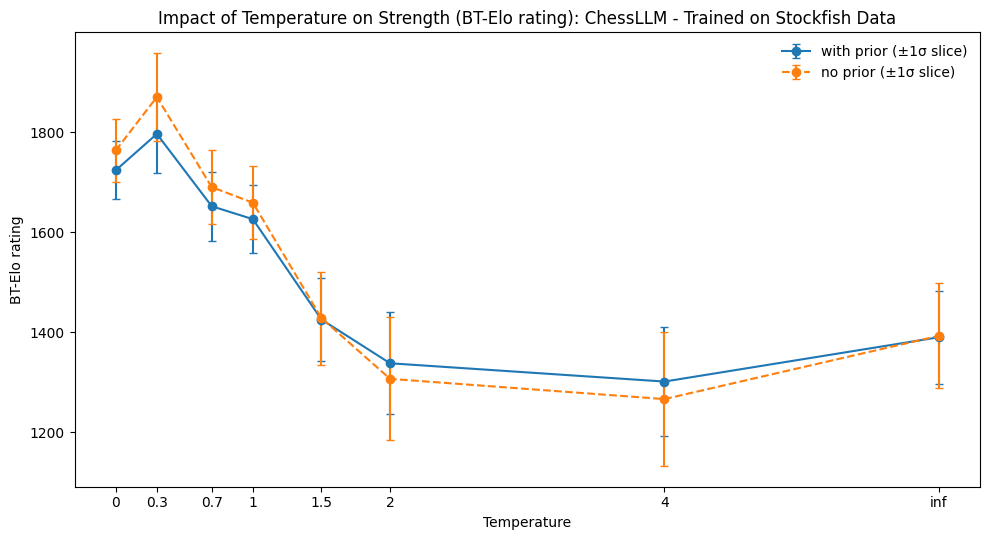

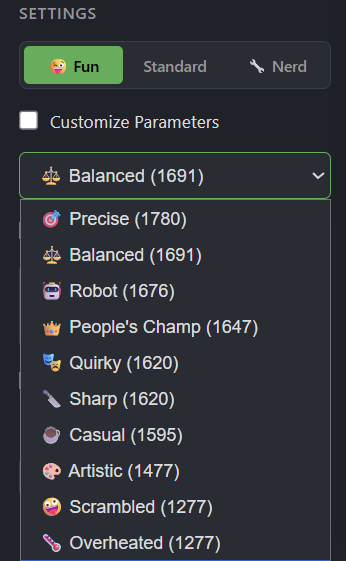

For the stockfish, it's best at temp 0.3 and for the lichess model it's best at temp 0.0 (worse than stockfish).

I'd rate it around 1400, it did have an impressive 25-move castle checkmate against me (~1600 OTB, mostly play bullet online, ~2100 Lichess) within my first day (e.g. 5 games or so) of experimenting with it, albeit in a rudimentary GUI that meant I was essentially playing blindfolded too.

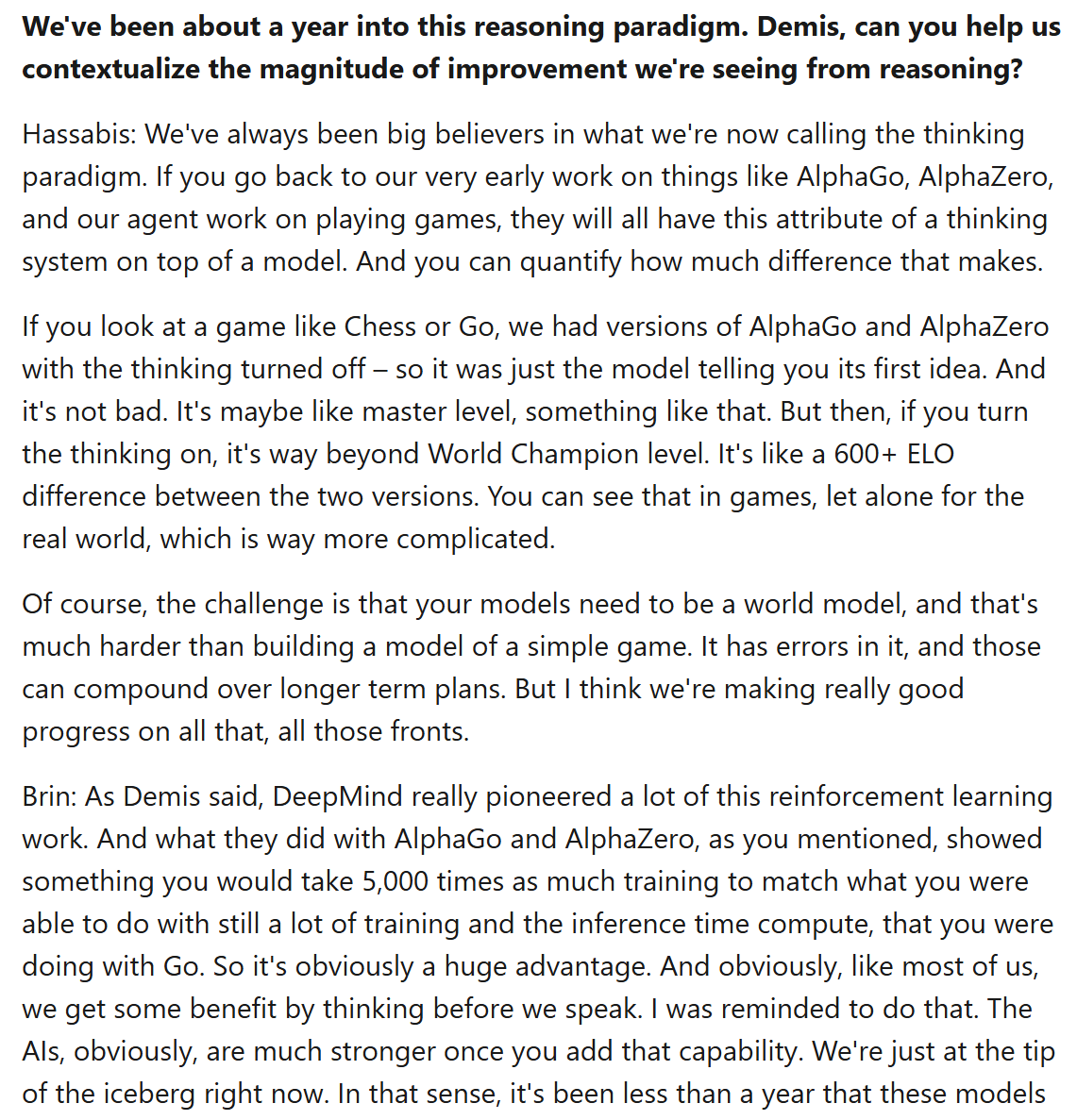

What I don't understand is that if AlphaZero (succeeded by LeelaChessZero) proved that a CNN with fewer inductive biases works with scale (compared to Stockfish, which scales in the number of nodes searched rather than innate "intelligence" hardcoded in its parameters), shouldn't transformers (even fewer biases) scale better, or do we think that chess has a fundamental upper limit (perhaps IM level of ~2400, see https://lichess.org/@/Leela1Node which is a DNN w/o search and Demis Hassabis' comments here: https://youtu.be/M2ZtBQI2-GY?t=273

) in skill without search (but the reasoning paradigm enables search now and the time to saturation for most benchmarks is ~1-3 years e.g. HLE, ARC-AGI, AIME, GPQA, SWE-Bench etc.)

I haven't benchmarked this 50M model against actual general LLMs (apparently 1800 here (https://dubesor.de/chess/chess-leaderboard) which is surprising since in the Kaggle tournament DeepMind this summer, they all played at a 500 level, so I'm guessing this guy set up the harness favorably vs the open-ended version DeepMind had). But also I think the accuracy metric is super flawed since LLMs are perfect when in distribution, but need entropy (e.g. playing and accumulating results (W/D/L) vs humans) to accurately calibrate elo rather than "benchmaxxing" by using Stockfish (likely in its training data) as the judge.

Anyways, any feedback on my prototype app would be well received cause in my personal opinion it's more "enjoyable (satisfying to beat) / human-like" compared to most AI bots (e.g. on chess.com, or even most bullet games I play online are less chess, more tricks / time scrambles)

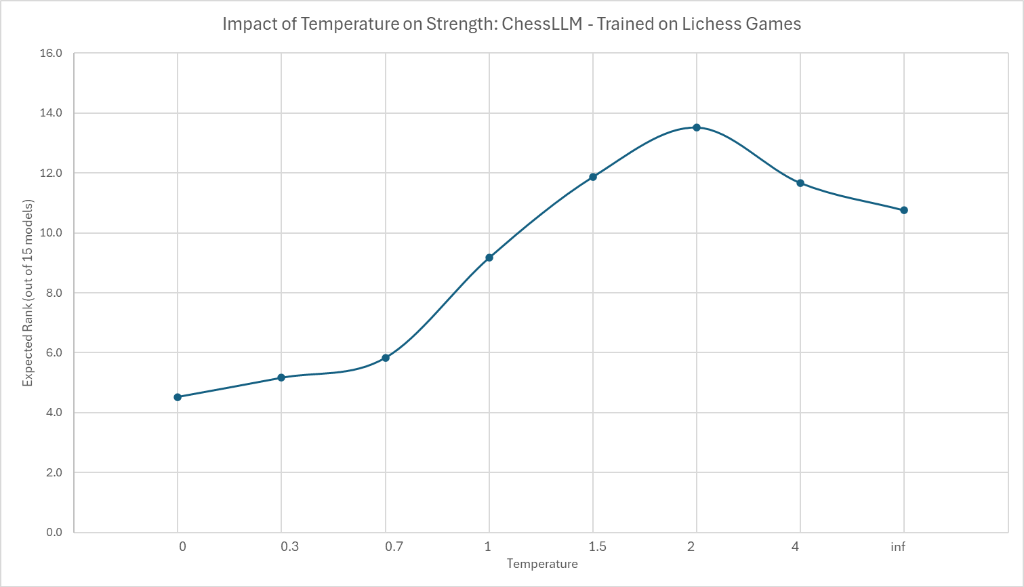

I ran a tournament with the two models at 7 different temp values (0, 0.3, 0.7, 1, 1.5, 2, 4, 999) - note the baseline is set at 1500, so may need to be shifted

Rank Configuration Elo W D L Pts

─────────────────────────────────────────────────────

1 Stockfish T=0.3 1780 22 4 2 24.0

2 Stockfish T=0.7 1691 19 5 4 21.5

3 Stockfish T=0 1676 17 8 3 21.0

4 Lichess T=0 1647 14 12 2 20.0

5 Lichess T=0.3 1620 14 10 4 19.0

6 Stockfish T=1.0 1620 17 4 7 19.0

7 Lichess T=0.7 1595 15 6 7 18.0

8 Stockfish T=1.5 1477 10 6 12 13.0

9 Lichess T=1.0 1465 9 7 12 12.5

10 Stockfish T=2.0 1453 8 8 12 12.0

11 Lichess T=1.5 1339 2 11 15 7.5

12 Stockfish T=4.0 1324 3 8 17 7.0

13 Lichess T=2.0 1277 1 9 18 5.5

14 Exploration (T=∞) 1277 0 11 17 5.5

15 Lichess T=4.0 1240 1 7 20 4.5

mildly interesting how a near infinite temp actually outperformed a temp of 2-4, and in the stockfish version a small temperature (0.3) outperformed deterministic/greedy sampling (0.0) but not in the lichess one (takeaway is that it's better to "average out" human mistakes, but when stockfish gives you a couple (2-3) valid options, they're probably roughly equally good since Stockfish's target is "the single best move" where as Lichess is more "high entropy"/ a set of candidate moves

Lol, I was trying to copy ChatGPT's personality feature, but it was just a worse UI than the standard temperature setting option, so I didn't include those commits in the MVP version I published for initial feedback on whether the concept is any good.

I personally think it's pretty cool to have the exact probability distribution, to quantify things like entropy, or creating an adaptive mode where it finds the temp param that minimizes log loss on predicting the user's moves, especially if they import all their Lichess games (ideally a proxy for elo, but needs more work)

Ideally, the next steps would be doing mech interp on the 50M parameter model (Adam Karvonen, the creator of this model, already had a great article on using probes to visualize the internal state and rating (https://adamkarvonen.github.io/machine_learning/2024/01/03/chess-world-models.html), so would try to replicate that first, then look into weird quirks like why it refuses to win by Fool's Mate (2-move checkmate) - I'm guessing since, there aren't many instances of the # token being generated that early in the game, so maybe some adjustments could add some elo.

More advanced ideas (not sure if I can do it, I wanna try):

1. somehow "gluing two LLMs together" so it can interface in two modalities (chess, english) natively (no tools)

2. continual learning (via fine-tuning on "dreams" plus grounding to avoid catastrophic forgetting as described here: https://gallahat.substack.com/p/llm-sleep-based-learning-implementing)

All that to say, I really think that if one person was able to make a 50M parameter transformer (that operates in PGN text - no explicit state model, purely arised from scale) that beats ~90% of casual players (aka typical amateur or ~1400) with a ~$100 budget 2 years ago (before the reasoning paradigm), why can't a bigger lab with a million times larger budget achieve a million times better results (10x improvement in skill corresponds to 400 elo of rating points) so ~3800. I guess if pessimistic, you could argue the power law might be like 0.3 so only ~2100 or IM level. even with that I think the 5 year in architecture/hardware efficiencies should be another ~1000x compute multiplier.

My take is that with 3 years, anything that's measurable and already achieved by other human methods should be achievable by general LLMs, but if I'm wrong it'll be because chess intrinsically requires searching 1000s of positions (similar to how cryptography or other problems can't be fully compressed via heuristic).

If you wanna help benchmark this model ( https://chess-llm-316391656470.us-central1.run.app/ ), comment ur ELO and record vs various configs

e.g. Stockfish / Lichess temp 0 / 0.3 / 0.7 / 1

tbf It was already benchmarked against Stockfish, but still, I'd like to see it benchmarked against humans and at different temperatures

@ChinmayTheMathGuy interesting read. If you do manage to get an LLM to beat a chess.com SuperGM bot ( while also satisfying @MP's interpretation of the media's interpretation of an LLM ), I'll send you 50k mana. I might post an actual bounty at point because of quality posts like this

45% of people think when they prompt ChatGPT, it looks up an exact answer in a database, and 21% think it follows a script of prewritten responses

In such an environment I could see some incentive to re-educate the population on how LLMs work by rebranding it as something other than an next word predictor.

+/- There is also been a considerable amount of legal work that has been construed specifically for 'LLMs' that some interest may want to strategically enforce / evade.

imo, this market has an increased risk of devolving into a bet on the evolution of terminology instead of emergent capabilities of chat models.

So far I've been betting with the very unassailable belief that reasoning and multimodal models qualify as LLMs

Someone below mentioned that that the criteria are really specific. This is true, but also I think the conjunction of the specific things is even less likely than the individual things happening themselves. Why would the Super GM play blind if the LLM was good enough to make it a challenging game? At the moment it makes for good content because the LLMs just randomly play illegal moves, but if at some point they're actually good, I would expect the standard interface not to be blind chess any more.

In most worlds where LLMs are better than super GMs, I still don't think they ever publicly win a blindfold game against one.

@JohnFaben GM Magnus Carlsen literally played a match against ChatGPT with the exact requirements of this market couple of months ago

@MP I think John’s point is that Magnus only did that because it was inconceivable that he would lose. If a future match is expected to be competitive, he’s less likely to play blindfolded

[The] idea is to check whether a general intelligence can play chess, without being created specifically for doing so (like humans aren't chess playing machines).

a model that markets itself as a chess engine (or is called as such by the mainstream media) is unlikely to be qualified as a large language model.

Using non-LLM tools or external resources (e.g., chess engines like Stockfish, databases) remains disallowed

@ShitakiIntaki the LLM wouldn’t be using Stockfish though, it’d be using a set of weights that just mysteriously happens to act like Stockfish 🤷♀️

such a thing by design would be as generally applicable as a LLM, as long as you encode questions to it as chess puzzles where the answer corresponds to the correct move :P

(lastly, I think such a thing would be hard to market to anyone, so I can’t imagine it being professionally billed as a chess engine)

I am not familiar with the nuances but the proposal doesn't feel like it passes the "smell test" of not being "created specifically" to play chess. 😉

@ShitakiIntaki fair.

counterpoint: you are quoting rules about someone else’s fake money market you don’t control at a cartoon horse on the internet

@ShitakiIntaki There was a quote somewhere about how modern grandmasters have their fathers whisper them opening lines while they are still in the womb.

When most of the childhood memories of a GM is spent studying board evals, it's hard to claim that they aren't in some way specifically designed for the game.