"Consumer hardware" is defined as costing no more than $3,000 USD for everything that goes inside the case (not including peripherals).

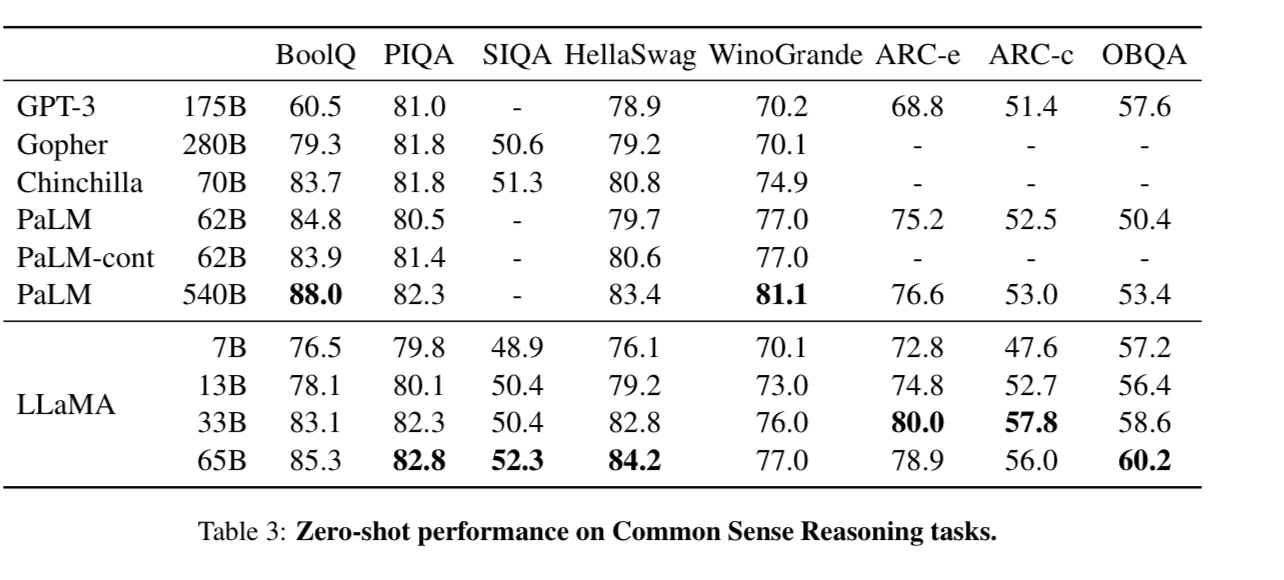

In terms of "GPT3-equivalent model," I'll go with whatever popular consensus seems to indicate the top benchmarks (up to three) are regarding performance. The performance metrics should be within 10% of GPT3's. In the absence of suitable benchmarks I'll make an educated guess come resolution time after consulting educated experts on the subject.

All that's necessary is for the model to run inference, and it doesn't matter how long it takes to generate output so long as you can type in a prompt and get a reply in less than 24 hours. So in the case GPT3's weights are released and someone is able to shrink that model down to run on consumer hardware and get any output at all in less than a day, and the performance of the output meets benchmarks, this resolves to whatever year that first happens in.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ13 | |

| 2 | Ṁ6 | |

| 3 | Ṁ4 | |

| 4 | Ṁ0 |

People are also trading

@firstuserhere

1. "In our experiments, we employed the gpt-3.5-turbo and text-davinci-003 variants of the GPT models as the large language models"

2. "we use ChatGPT to conduct task planning when receiving a user request, select models according to their function descriptions available in HuggingFace, execute each subtask with the selected AI model, and summarize the response according to the execution results."

3. "We built HuggingGPT to tackle generalized AI tasks by integrating the HuggingFace hub with 400+ task-specific models around ChatGPT."

@Gigacasting Link me to the paper source a repo where I can run it on my machine? (I'm inclined to believe you, just want to verify a bit)

@LarsDoucet Not that I can tell, yet. Here's the announcement: https://crfm.stanford.edu/2023/03/13/alpaca.html

@LarsDoucet I am fairly confident this is doable right now. The model runs on CPUs after it is trained after all.