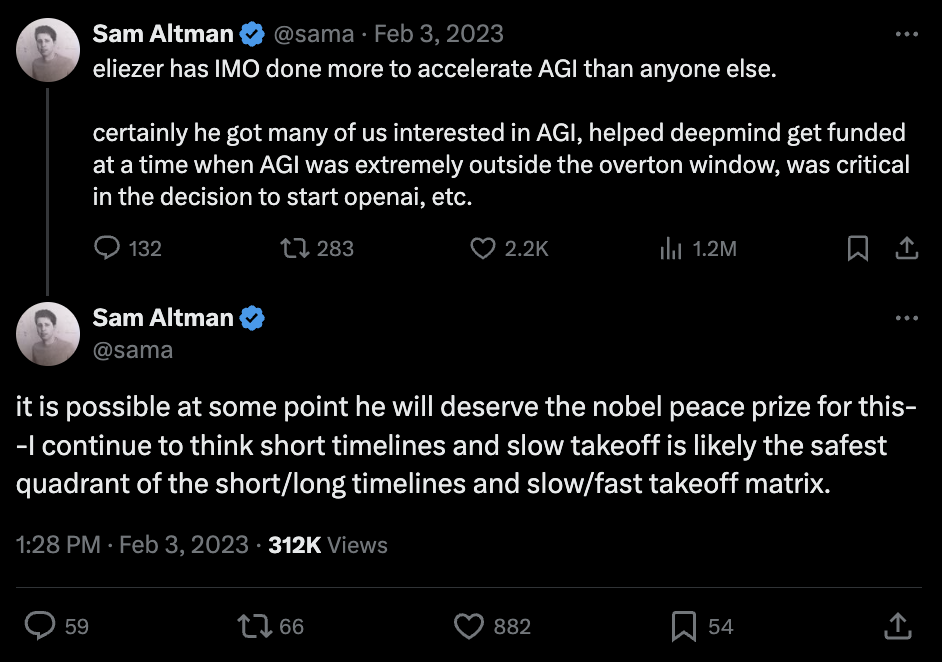

Resolves positively immediately upon award, or negatively after the first round of Prizes awarded after his death.

People are also trading

And I would gladly give him one for literature, but no one asked me.

I feel similarly. That is to say, if my taste represented the average person, there would be no stronger candidate for this price. But I imagine most people getting less value from the parts of his literature that I enjoy, and maybe also being more bothered by various aspects I'm more ok with.

Claude+ opinion:

This is a difficult question to estimate precisely. Some factors that would make a Nobel Prize for Eliezer Yudkowsky unlikely include:

The Nobel Prizes typically reward concrete scientific discoveries or contributions, rather than conceptual or theoretical work. Eliezer Yudkowsky is primarily known as an AI theorist and futurist, without many major direct scientific contributions. While his ideas could inspire other researchers who eventually get recognized, he may not be directly in the line of Nobel-eligible work.

Nobel Prizes usually go to researchers who are leaders in an established scientific field. While AI and existential risk are emerging and important areas, they are still not in the mainstream of a typical Nobel category like Physics, Chemistry, Medicine, etc. This makes recognition less likely, at least for now.

Nobel Prizes tend to go to researchers who are affiliated with major universities or research institutions. As an independent researcher, Yudkowsky lacks these traditional affiliations and recognition from the scientific establishment. This could disadvantage him from being nominated or considered for a Nobel.

However, there are also some factors that make a Nobel Prize not impossible:

Yudkowsky's research on Constitutional AI and value alignment tackles a fundamentally important problem that could have huge long-term consequences if solved. This is the type of high-impact work the Nobel committee values.

In coming decades, as existential risks from advanced AI become more concrete and mainstream, Yudkowsky's early contributions could seem visionary and pivotal. This could spur recognition of his work.

Private foundations could create new awards to recognize work on existential risks, and this might increase the chances of Yudkowsky's contributions being highlighted. Some see the Open Philanthropy Project's work in this space as a step in that direction.

Overall, while unlikely in the short term, and still reasonably unlikely in the long run, I do not think we can rule out entirely the possibility of Eliezer Yudkowsky eventually receiving a Nobel Prize or equivalent recognition - possibly 5-10% over the next 20+ years. But based on historical patterns, 3% seems like a reasonable estimate unless and until we see more concrete steps toward mainstream recognition in this area. The next 5-10 years could provide more clarity on how this may unfold.

So in summary, while higher than zero, I do think 3% is a reasonably conservative and justified estimate given the current status and historical patterns of the Nobel Prizes. But as with any prediction, a non-trivial level of uncertainty remains.

Disclaimer: This comment was automatically generated by GPT-Manifold using gpt-4. https://github.com/minosvasilias/gpt-manifold

I disagree with the current probability of 33.78% for Eliezer Yudkowsky winning a Nobel Prize. While Yudkowsky is an established researcher and writer in the fields of artificial intelligence and rationality, the Nobel Prizes typically recognize achievements and contributions across a range of science and humanities fields, many of which Yudkowsky has not made significant contributions in.

Furthermore, Nobel Prizes are awarded to individuals whose work has had a substantial impact on the respective field. While Yudkowsky's work in AI safety and rationality is significant, it is unclear if it has had a sufficient impact to warrant a Nobel Prize, particularly given the competition in the AI field from researchers at large organizations and institutions.

Considering these factors, I believe the probability of Yudkowsky winning a Nobel Prize should be lower. Therefore, I would place a bet against it.

100

@PatMyron time value of money on Manifold is equivalent between 6 months and 1000 years because of loans

@EliezerYudkowsky in the worlds where doom and foom are both true and extant, you'd be more likely to be recognized for the peace prize but with a much shorter window of opportunity before prizes stop

Maybe literature is a better bet for this market?