If the conclusion follows from the premises presented in the book, and this fact is well-indicated by the arguments in the book this market resolves YES. Otherwise, this market resolves NO.

Resolution will be based on the objective judgement of @jim

Update 2025-06-20 (PST) (AI summary of creator comment): In response to a question about their personal views, the creator has stated that they do not believe Eliezer Yudkowsky has ever articulated a valid argument for high p(doom) in the past. This provides context for the creator's starting position before reading the book.

Update 2025-06-20 (PST) (AI summary of creator comment): The creator has clarified that the term "objective judgement" used in the resolution criteria is tongue-in-cheek.

People are also trading

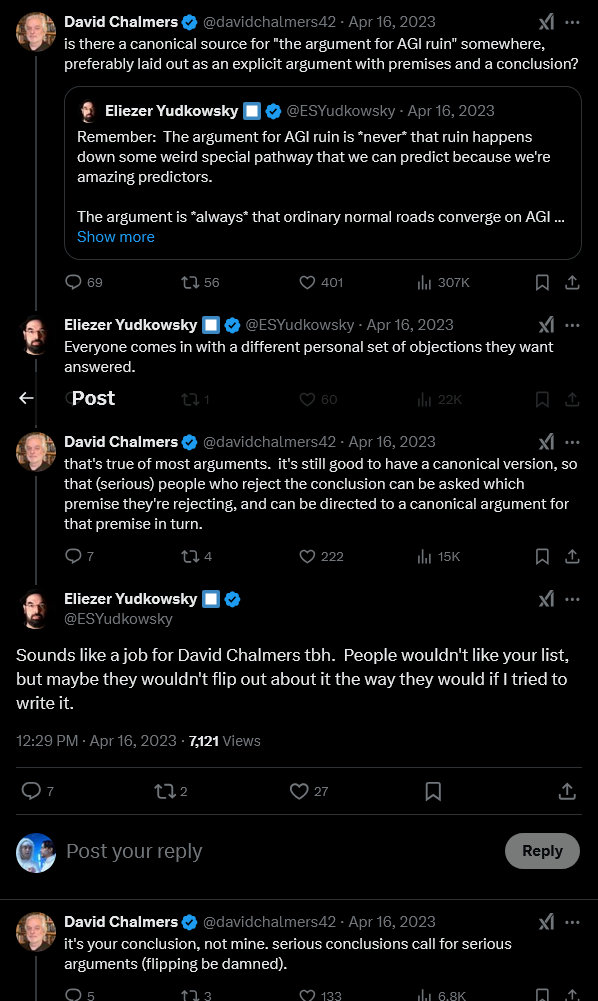

@ProjectVictory Like, I think Yudkowsky actually agrees with me, but plausibly that's what this book sets out to solve? Not sure.

@jim really? Did you watch his recent long interviews? Because I think he articulates quite good argument for high p(doom) in them. I am not sure which level of depth and structure do you expect and which premises you don't agree, but if I would try to rephrase what leads not to just some p(doom) (eg 5%), but high, it will be something like that:

Our current technologies allow only to optimise external behaviour in current environment, not internal preferences. (and generally - progress on alignment goes much slower than on capabilities and there is no particular reason to expect a change)

Evolution provides us example of what happens when you optimise minds by external behavior even for much, much simpler criteria (inclusive genetic fitness) - they don't even have notion of it and end up inventing condoms and ignoring opportunity to make a barrel of their DNA just because of distribution shift to new capabilities, without knowing about and reflecting on how their creator optimized their machinery.

Space of possible optimization targets is very large and chance that it will end up on something close to human well-being is tiny if we can't control optimization target.

Therefore this very low certainty where exactly in this space we will end up means that there is very high certainty that it will not be some very concrete part of it (eg in a lottery we are very unsure which exact ticket will win, but we are very sure it will not be some one concrete ticket)

@EniSci This is a great argument for potentially misaligned agents appearing, but I don't see a good reason why they will be demigod level superintelligent. I expect humanity to play around with aligning humanish-level intelligence agents for many decades before something like this is created. Also, current best systems(LLMs) are not optimizers, they work by mostly mimicking human behavior, you can in principle get slightly superhuman on some tasks with current approaches in principle, but LLMs aren't going to display optimizer behaviors be default or get unstoppably superhuman any time soon.

@ProjectVictory wow, encountering it by yourself makes me understand why Yudkowsky is always do emphasizing that he can't give "the argument why" because everyone has totally different reasons to disagree.

Well... I was answering on implied "why you are so sure?", because there is a difference between saying "it is likely - ~2 vs 1" and "it is almost certain - 50-200 vs 1" (which are odds Yudkowsky had given). "why there is any chance at all of destroying whole world?" (eg why p not ~0%, but p>70%) is a different question. On the question "how he get from agi to asi and fast" Yudkowsky wrote whole document "Intelligence Explosion Microeconomics".

Also, there is "GPTs are predictors, not imitators" by Yudkowsky on lesswrong. I personally suppose they are already vastly superhuman in a task they are trained for, if you was asked to predict next token you would do much, much worse than them, no matter how much training.

@EniSci I completely agree with the last point, I wouldn't be good at predicting next word in arbitrary text. But optimizing predicting next token doesn't lead to paperclip scenario. Almost all the impressive things lately were either transformers or diffusers. I've yet to see any at least somewhat general system that optimises a real-world function at anything remotely close to human level.

Current attempts at "AGI" look like training large transformers and getting them to imagine they are agents trying to achieve something by prompting. This approach is both weak and (in terms of existential risks) safe. Is this what we are worried about or what kind of currently existing architecture is going to kill us?

My biggest problem with doomer arguments is they set AI power to infinity and argue it's unsafe. I agree, anything infinitie is going to destroy everything, intelligent or not.

I expect we'll get close to human level by just scaling up transformers that mimic human text, without any intelligence explosions and cause a massive unemployment economic crisis much earlier than anything remotely related to his arguments happens.

@ProjectVictory my biggest problem with unworried arguments that they are always something between "we never claimed this" and "we answered it in that post".

Eg about "infinity": there was old post by EY on topic of "supernova is not infinitely hot, but it doesn't mean you can protect yourself by fireproof suit".

Same with "real problem is not Asi, real problem is X" - nothing forbids two problems to exist.

And with "real" - computer transistors are as real part of physics as any other part. And with "but it isn't real optimization, it is only imagined optimization".

And nothing necessary in being Ai which will foom an LLM, you just need to wait another few years for next breakthrough.

And optimizing next token prediction (not just doing it like washing machine washes) totally leads to paperclip scenario, just instead of universe full of molecular squiggles you get universe full of computer chips with optimal content.

And same with other arguments, but I just see the tendency that I need to repeat things which were literally already answered. Same way I can just point to Rationality: AZ, Agi Ruines: the list of lethalities, or, perhaps free online "resources" section of Anyone Builds Everyone Dies where they have lots of answers on different specific queritons.

I mean, I have seen and even done some exchanges similar to that and almost never seen it changing someone's mind or at least going somewhere interesting. So... Well, I don't want to be rude, but it looks like just didn't read the material and just need to, because your current reasons to disagree aren't something new and already were addressed.

P.S. I actually started writing in that thread just because I encountered quite unusual question, and even more, question I once had myself ("why not 90%, but 99%"). But intelligence explosion never was a question for me. (as well as orthogonality thesis) The question I did have was "why Ai will have agency instead of being just a program" and the actual theoretical answer I got were coherence theorems (which are too long to explain in detail), not something else. More recent practical answer was that companies will just straightword try to build agents and it doesn't look plausible that they won't achieve it in time.

@EniSci

"supernova is not infinitely hot" - I agree but we know exactly how hot a supernova is. I expect near-future AI systems to be much "colder" that doomers claim.

"And optimizing next token prediction (not just doing it like washing machine washes) totally leads to paperclip scenario, just instead of universe full of molecular squiggles you get universe full of computer chips with optimal content."

I don't think you understood my point. My point is a pure LLM is not a real world optimizer. The agent that is produced by querying the LLM is not trying to optimize tokens not is it aware it's what it's doing, and the optimizer that does token optimization is not what actually makes real world actions. For example a pure transformer will not produce an unexpected sequence of tokens because it understands that this sequence would allow it to hack a server to later produce better token sequences. Could we stumble upon an architecture that does? Maybe, but I don't think we'll do it anytime soon.

I am aware of the arguments you are talking about, I just don't think many of them are applicable to the path we are currently on. If we we doing the AGI alpha zero way, those arguments would be completely valid.