Will an AI score at least as many points as the top human competitor on the 2025 International Mathematics Olympiad (IMO)? Resolves YES if this result is reported no later than 1 month after IMO 2025 (currently scheduled for July 10-20). The AI must complete this task under the same time limits as human competitors. The AI may receive and output either informal or formal problems and proofs. More details below. Otherwise NO.

This is related to https://imo-grand-challenge.github.io/ but with some different rules.

Rules:

The result must be achieved on the IMO 2025 problemset and be reported by reliable publications no later than 1 month after the end of the IMO contest (so by end of August 20 2025, if the IMO does not reschedule its date).

The AI has only as much time as a human competitor (4.5 hours for each of the two sets of 3 problems), but there are no other limits on the computational resources it may use during that time.

The AI may receive and output either informal (natural language) or formal (e.g. the Lean language) problems as input and proofs as output.

The AI cannot query the Internet.

The AI must not have access to the problems before being evaluated on them, e.g. the problems cannot be included in the training set.

(The deadline of 1 month after the competition is intended to give enough time for results to be finalized and published, while minimizing the chances of any accidental inclusion of the IMO solutions in the training set.)

If this result is achieved on IMO 2024 or an earlier IMO, that would not count for this market.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ6,291 | |

| 2 | Ṁ2,625 | |

| 3 | Ṁ2,002 | |

| 4 | Ṁ1,222 | |

| 5 | Ṁ946 |

People are also trading

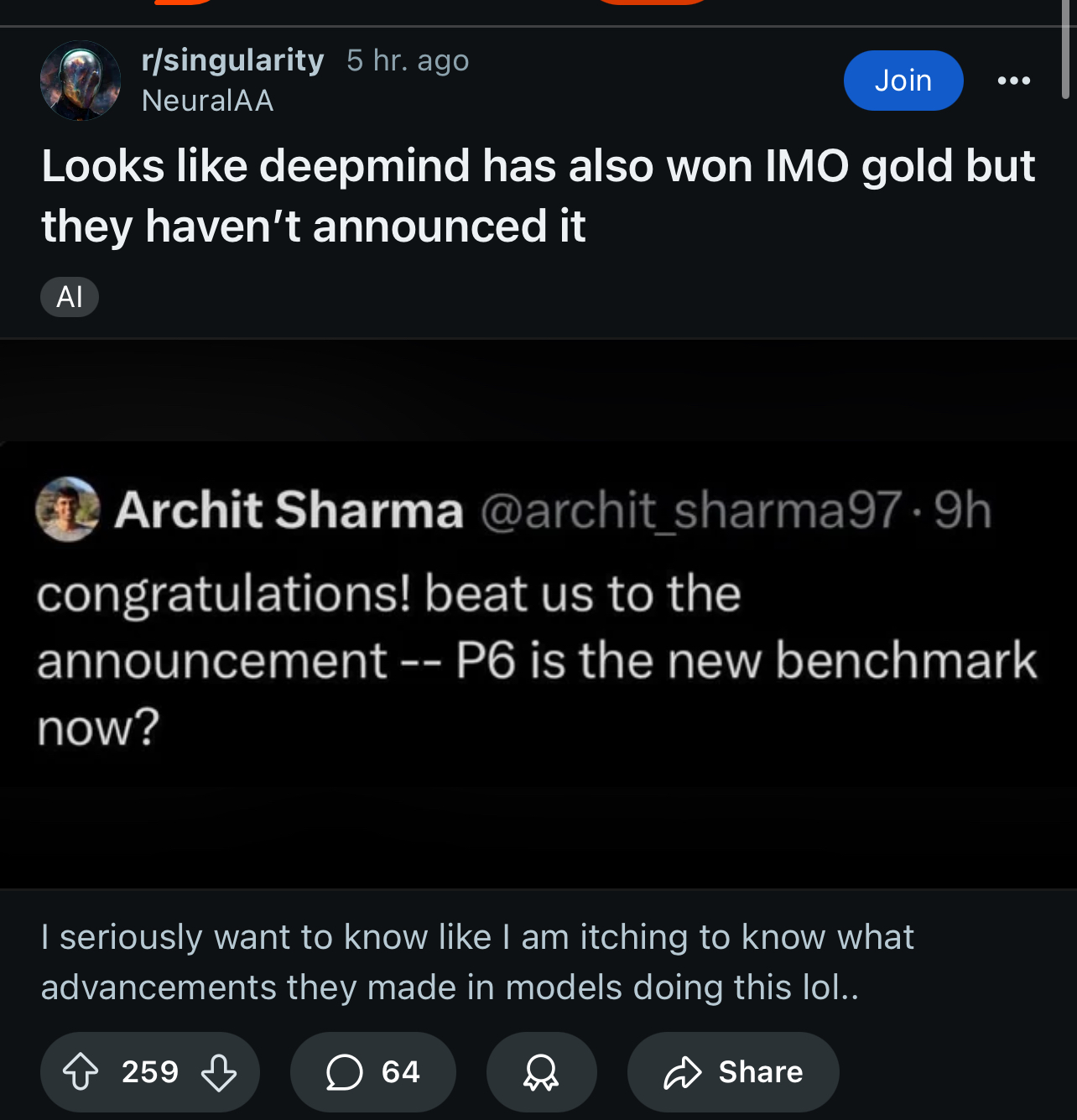

@AdamK it actually made me update downwards somewhat, because it seems likelier in worlds where DeepMind also didn’t get P6, so it’s now a “benchmark”

.

The last year that no one from China got a perfect score was 2018:

International Mathematical Olympiad (imo-official.org)

https://deepmind.google/discover/blog/ai-solves-imo-problems-at-silver-medal-level/

>First, the problems were manually translated into formal mathematical language for our systems to understand. In the official competition, students submit answers in two sessions of 4.5 hours each. Our systems solved one problem within minutes and took up to three days to solve the others.

AI took up to 3 days to solve some problems, and still greatly struggles with combinatorics problems. I think AI will eventually surpass humans at IMO, but 2025 is too soon given this question's conditions.

Yeah, combinatorics still seems hard. But if they make the same amount of progress as they did in the last year...

The 3 days part seems like it shouldn't make a big difference. It sounds like most likely they can just scale up the compute to reduce the time spent.

Converting natural language to formal part isn't the most interesting to me. We already know LLMs are good at NLP, I'm interested in how quickly the reasoning capabilities advance.

The criteria (first sentence) is "Will an AI score at least as many points as the top human competitor". So it resolves YES.

I edited the title to make it less confusing.