Will async SGD become the main large-scale NN optimization method before 2031?

3

Ṁ100Ṁ1512031

78%

chance

1H

6H

1D

1W

1M

ALL

Resolves as YES if asynchronous stochastic gradient descent becomes dominant in large scale neural network optimization before January 1st 2031.

This question is managed and resolved by Manifold.

Market context

Get  1,000 to start trading!

1,000 to start trading!

People are also trading

Related questions

Will there be a significant advancement in frontier AI model architecture by end of year 2026?

21% chance

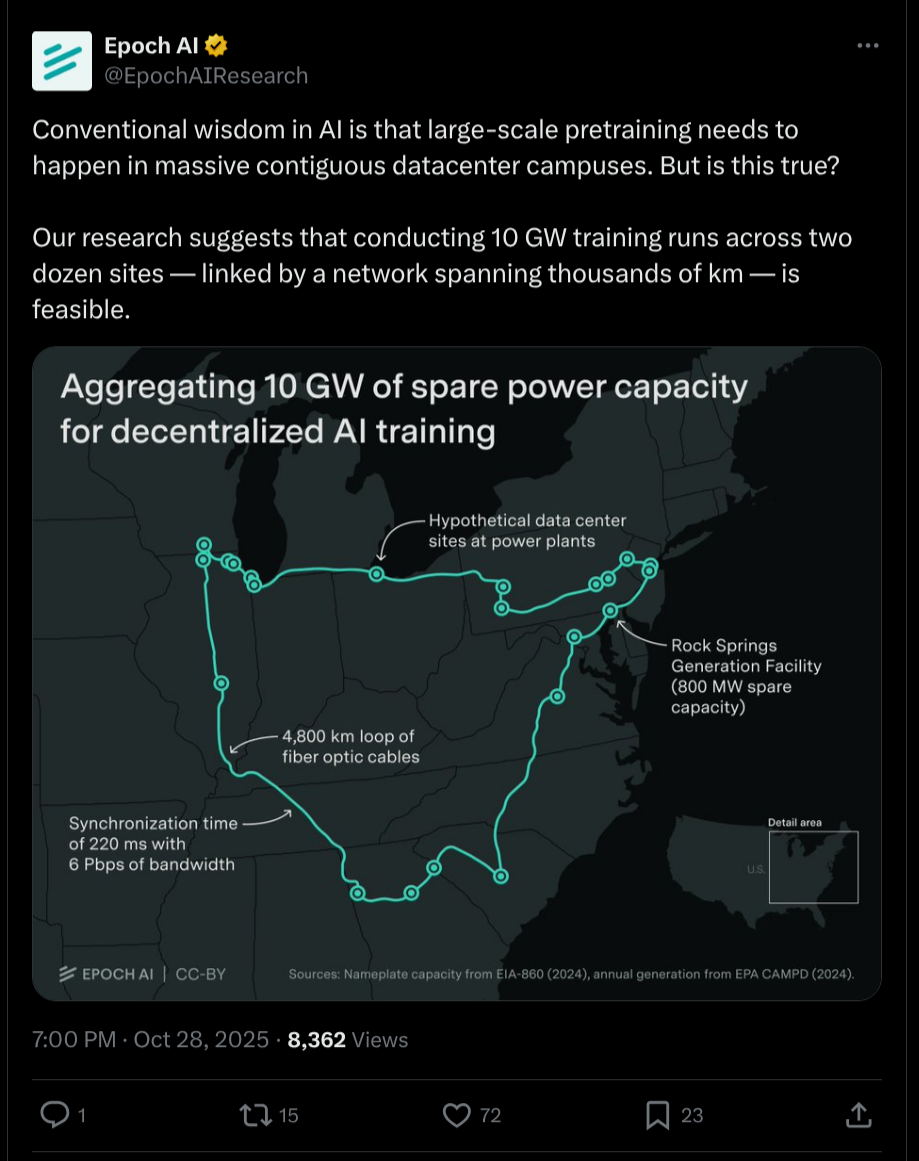

1GW AI training run before 2027?

81% chance

100GW AI training run before 2031?

33% chance

By 2027 will Adam be replaced by a novel optimization algorithm?

56% chance

Will Adam optimizer no longer be the default optimizer for training the best open source models by the end of 2026?

43% chance

Will we see the emergence of a 'super AI network' before 2035 ?

72% chance

By EOY 2026, will it seem as if deep learning hit a wall by EOY 2025?

13% chance

10GW AI training run before 2029?

20% chance

By the time we reach AGI, will backpropagation be used in its weights optimization?

61% chance

What will be the parameter count (in trillions) of the largest neural network by the end of 2030?