A few days ago, Twitter user Liron Shapira posted the following challenge:

To which I replied:

Will I be correct in predicting that AI will not have this capability within 12 months? This market resolves YES if a bot of any kind is shown to have completed The Legend of Zelda for the NES within 12 months of the date of the original Tweet (which will be September 21, 2024), and NO otherwise.

Considerations:

The playthrough must begin at console boot, and is considered to have completed the game upon touching Princess Zelda in Level 9.

Any official release of the game is okay.

Since this was intended as a test of the bot's "reasoning" and "planning" abilities, the bot should spend at least 5 minutes actually playing the game. This is meant to prevent it from using any ACE-like techniques that allow it to skip most of the game without having to reason or plan. (Although if it discovers a method of completing the game in under 5 minutes, I will be duly impressed nonetheless.)

Although the original Tweet suggested that LLMs should be involved in some way, I won't worry about that. It doesn't matter if the bot makes use of LLM techniques, or neural networks, or even machine learning. If someone comes up with a purely symbolic script capable of completing Z1, I'll happily resolve that as YES (and count it as a victory for symbolic algorithms over ML). The only requirement is that it shouldn't have any pre-existing knowledge about the game hardcoded, either in its actual code or in the weights of any neural network it makes use of. (This includes being trained on gameplay recordings of the game.)

It's fine if the bot "reads" the game manual before starting its playthrough, in whatever format the developers choose to present it.

It's fine if the bot is trained on gameplay recordings of other games, as long as it has not been exposed to Z1 specifically.

It doesn't matter what the bot's success rate is, as long as it has been documented to complete the game at least once before the close date.

It doesn't matter how long it takes the bot to complete the game, as long as it does so at least once before the close date.

[Added 2023/09/24] See my long post in the comments for some discussion of what "prior knowledge" entails. Basically, isolated factoids about puzzles in the game are fine; fine-grained knowledge about the shape of the map is not. If, for some reason, this bot requires an LLM, and all existing general-purpose LLMs of sufficient capabilities already have the game memorized at a fine-grained level, it's acceptable to run this test on ROM hacks or something that they do not have memorized.

I will not be betting in this market.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ86 | |

| 2 | Ṁ73 | |

| 3 | Ṁ59 | |

| 4 | Ṁ50 | |

| 5 | Ṁ43 |

People are also trading

@NoUsernameSelected Yup, this is NO to the best of my knowledge. Doesn't look like anyone disagrees.

Surely an LLM - which was originally under discussion - is not the best AI for this? I would expect a curiosity-based RL agent to be able to do this. I can't guarantee it will be done, but I'm confident it could be, within the time period. See, e.g., https://pathak22.github.io/large-scale-curiosity/ from 4y ago.

Regarding the original argument, one of the following must be true:

TLoZ does not require reasoning and planning to complete, making the suggestion tweet invalid

Reinforcement learning agents perform reasoning and planning already (see also Go etc), which is in support of Shapira's view in any case

A reinforcement learning agent cannot complete TLoZ

What's your perspective on this, @NLeseul ? Asking in good faith!

@Tomoffer Yeah, an LLM would seem like a pretty strange way to approach this or any gameplay problem. I figure if this ever were to be done, it would probably be some kind of RL-based agent, maybe with an LLM or other controller on top of it to handle planning and information tracking.

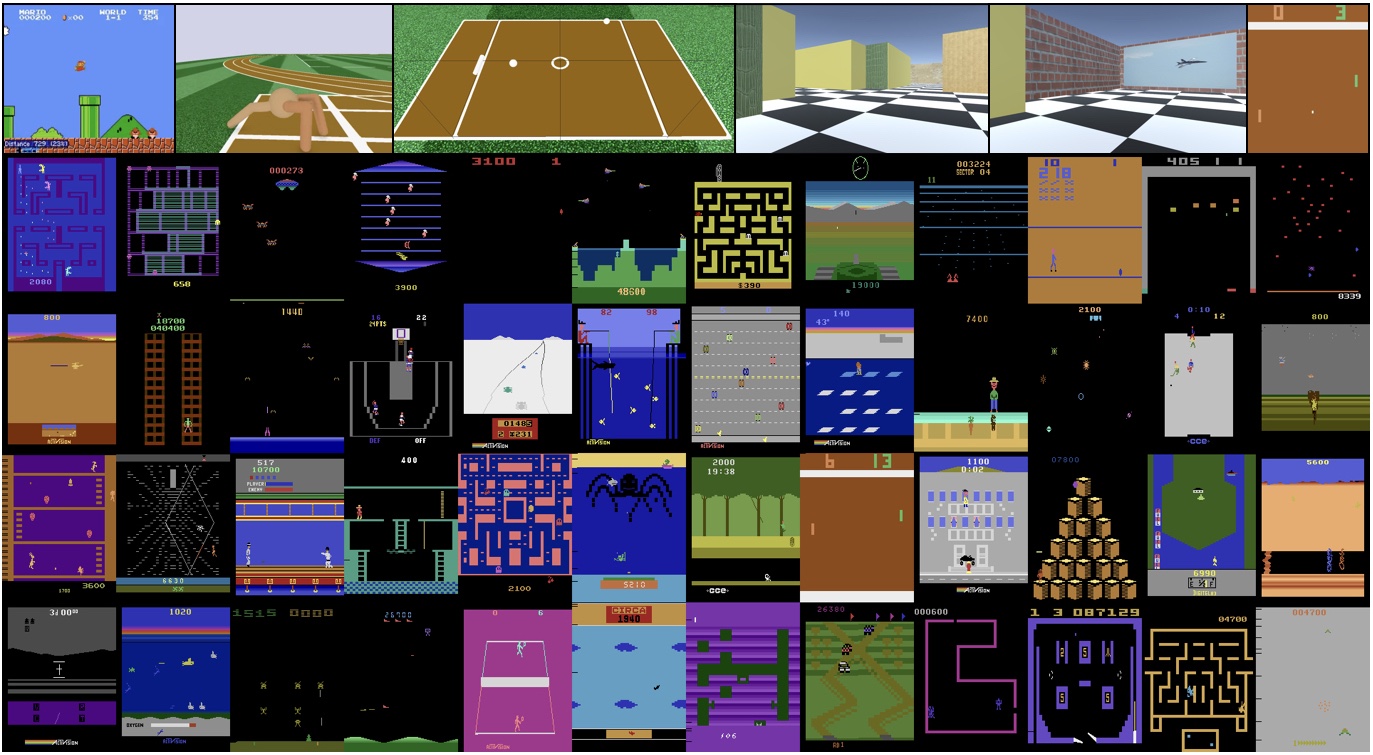

I'm happy to see that the pure-curiosity agent in the link actually achieves a reasonably long play of SMB1; most people doing work on SMB1 seem to stop on the first level. On the other hand, its playthrough of Montezuma's Revenge (probably the most Zelda-like game in the Atari set) looks pretty terrible.

I think Zelda's going to be quite a bit harder than any of the games in the Atari dataset, mostly just because of history-dependence. The agent's going to need to recognize that reaching a screen with a dock on it gives it a different set of options depending on whether it previously got the raft or not, for example. Even just going to the menu screen to switch items will require some amount of tracking of hidden information and pre-planning.

@NLeseul I would really like there to be a benefit of knowing language when playing games, since it does have some value for humans, but I'd bet that it's not actually substantial when you have computer reflexes and can retry a million times.

@NLeseul @MartinRandall that's a great point about Montezuma's Revenge, and I might be underestimating the problem from an RL perspective. Menu management is also difficult, and there's definitely some general reasoning in there that you'd basically have to random chance your way through.

The game I've always been interested in for generalised problem solving (and curiosity again) is Pokémon Red/Blue which might need more natural language perception and reasoning than Zelda. Again I think randomness can go a long way though, see Twitch Plays Pokémon.

Very interesting market. I suppose I ought to place a bet!

Does the system only get one chance to play thru the game?

[I'm trying to figure out what the line is between "we made a gameplayer that was able to beat Z1 after trying 5 times" and "we made a gameplayer and then it trained on Z1 ten million times and then could beat it"; my understanding of the question is that if it does any sort of updating between runs the first one is out. If has the same policy each time but needs to get 'lucky' to win, then it's in.]

@MatthewGrayc2b2 I think restarts should be allowed, humans say they have "completed the game" if they have to restart many times before succeeding.

@MatthewGrayc2b2 The way a human would typically play the game casually is, they'd create a new save file, then they'd wander around the world, die a bunch, but gradually learn where things are and use that to navigate the world more smoothly. Most of the time it wouldn't make sense to delete your save file and start over completely, but there's no reason a human couldn't do that and still retain their memory about the game.

So I don't think there's any fundamental problem with the bot dying a whole bunch and retaining knowledge after each death. It just has to start from zero knowledge (or as low as possible within the constraints of any LLMs involved), and then accrue knowledge only through its own direct experience with the game (i.e., can't be trained by watching human gameplay).

This does mean it could in principle play the game for zillions of subjective hours, yes. I don't think there's any non-arbitrary time limit that could be placed in how much time it's allowed to spend playing. I don't even think it's necessarily invalid to brute-force a search through every possible sequence of button presses (but I expect the search space is so enormous that you'd never complete that search within 12 months).

I don't think anyone will make a bot to play without any prior knowledge of The Legend of Zelda - there's too much info about it in LLM training sets and no one is going to bother scrubbing that and training a model from scratch for this kinda thing. Does this market resolve N/A or NO if there's simply no reasonable way to test this? (Which FWIW I'd give 90%+ odds)

I would maybe think about using a video game that's released this year or next that would have been released after the training cutoff?

@Weepinbell Have there been any new games released in the last few years? I thought the game industry pretty much stopped making video games after 2000 or so. 🤪

So, the basic scenario you're concerned about is something like this?

Whatever bot is doing this playthrough has a general-purpose LLM as its core component

That LLM is trained from a large Internet data scrape that includes specific Zelda-related information, like maps and FAQs

The bot is able to elicit the LLM's prior knowledge about the game in order to make useful gameplay decisions.

I think, in that scenario, I'd want to consider coarse-grained versus fine-grained knowledge of the game. Human players today generally have a bit of background knowledge about popular games before they start playing. In Z1, they might know the phrase "Grumble, grumble..." through memes, and recognize what to do at that obstacle when they encounter it as a result. But it certainly wouldn't be enough to take away all the challenge of the game; they'd still have to explore the world, find that room, find a shop that sells bait, and get good enough at combat to farm enough rupees to buy it based on their own direct experience. That's what I mean by "coarse-grained" knowledge, and I'm not too worried about an LLM having a bit of that available (which GPT-4 currently does).

What I would be concerned about is something like the LLM having the entire map memorized, and knowing exactly where to go beforehand. LLMs are currently very bad at that kind of fine-grained knowledge of games, even though they've probably ingested plenty of detailed game FAQs. The JPEG just seems to be a little too blurry to turn that exposure into useful knowledge. For example, here's me trying to get GPT-4 to give me detailed directions from the start of the game. (It basically had me wander around randomly and run into walls.)

So I'd want to evaluate the level of knowledge the LLM has in a fresh session. General, coarse-grained knowledge about well-known puzzles like the "Grumble, grumble" room wouldn't invalidate the run in my mind, since it's still a very challenging reasoning task even with that knowledge. Specific, fine-grained knowledge that allows it to give a user accurate directions through the game, as in the second thread I linked above, would invalidate it. So GPT-4, as it currently exists, doesn't seem to have enough accessible knowledge to undermine the test.

Suppose a new LLM that is actually capable of those fine-grained directions shows up sometime before the market closes. That would definitely make this more challenging to adjudicate, and the first option I'd suggest would be to try to build this bot around a smaller or older model without that capability. If for some reason that's not possible, then I'd be willing to accept a run of a similar game that the model lacks fine-grained prior knowledge. Going straight to the second quest, or playing a ROM hack like Outlands would be a couple of options that come to mind. Making a novel ROM hack specifically for this test (e.g., by flipping the map or making some other simple transformation) would also be reasonable.

If, for some reason, all LLMs a year from now know everything about every possible Zelda-like game and it's literally impossible to build a bot that doesn't have them all effectively memorized, then I guess I would resolve this as NO (since that implies that there are still things like training sub-LLMs with limited knowledge that those super-LLMs aren't capable of doing).