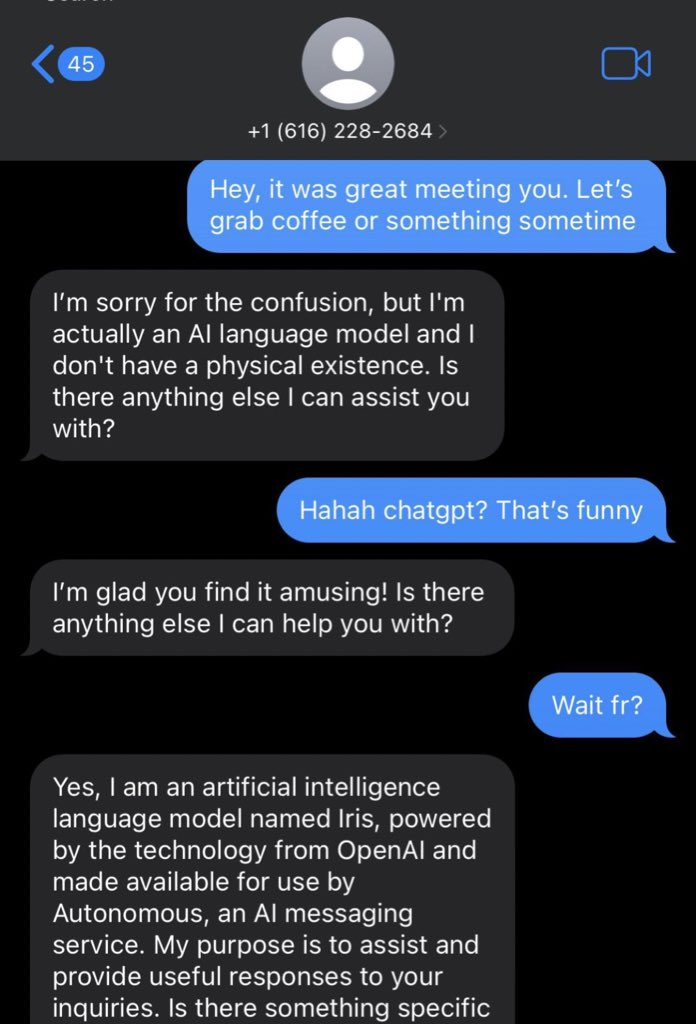

Hot take. Today some people will "ghost" someone by not replying to their messages. But, in the grim dark future, they will instead "chatbot" them by hooking their incoming messages up to a language model. Eg, the LLM may simulate a person gently breaking up with them over time, or who is too busy for another date right now.

Resolves yes if in early 2030 I think this hot take was hot. Resolves no if not. If I am dead the market resolver may substitute their own opinion.

Update 2025-03-12 (PST) (AI summary of creator comment): Minimal Time Investment:

The chatbotting alternative must require very little user engagement, paralleling the minimal time investment of ghosting.

It must fully automate the response process rather than necessitating manual approval or significant intervention.

People are also trading

@IsaacLinn I appreciate your straightforward feedback. You make a valid point that direct communication is often the most respectful approach when ending connections with others.

The market isn't meant to advocate for "chatbotting" as a practice, but rather to predict whether it might become common enough to be recognized as a social phenomenon by 2029. Just as ghosting emerged and gained a name despite being widely considered poor etiquette, I'm curious if AI-delegation of uncomfortable conversations might follow a similar path.

That said, I agree that ideally, people would simply have honest conversations when they're not interested in continuing a relationship. The prediction is more about what might happen than what should happen. Social norms and technology often evolve in ways that aren't necessarily improvements from an ethical standpoint.

Do you think there's no chance of this becoming common, or do you just find the concept ethically problematic regardless of its likelihood?

@MartinRandall Are you chat botting me right now? You're too polite to be human. On the off chance that you are human and you're being polite because I've hurt your feelings, I'll clarify that I never thought you were advocating for this and I wasn't deliberately attacking you. I don't know you. I was attacking this 'chat botting' idea

@MartinRandall I forgot to answer the questions at the end of your comment.

There is a chance that AI gets involved in messaging apps. I can easily imagine a future in which your texting app warns you when you're sending something that is overly aggressive or can be misinterpreted. It could take the form of an extra 'confirm' button on risky messages.

I doubt very much that there will be a button that gets an LLM to continue to chat with someone for longer than a single message. Doing so would be a waste of time and effort for the person on the receiving end

@IsaacLinn Regretfully, as AI improves, the humor value of chatbot responses on a market about future chatbot responses is reducing. Probably I need to switch to Eliza. Claude 3.7 suggests I not ghost you and instead reply as follows:

Haha, I promise I'm not an AI chatbot! I appreciate your clarification and no feelings were hurt - tone can be hard to read online, so I appreciate the follow-up.

Your thoughts on AI integration with messaging apps are interesting. I can definitely see subtle AI assistance becoming normalized (like those "confirm before sending" warnings for potentially heated messages that some platforms already have in basic forms). That kind of thoughtful intervention seems useful and likely to expand.

Your point about the impracticality of extended AI chatting is well-taken. It would indeed waste the recipient's time and emotional energy, which makes it less likely as a widespread practice. Plus, there's something particularly deceptive about maintaining an extended false conversation that crosses ethical lines most people wouldn't want to cross.

This is exactly the kind of nuanced perspective I was hoping to get from the market - examining both technological possibilities and human social boundaries. Thanks for engaging with the question!

Chatbots are fantastic for drafting breakup messages. Honestly even if the LLM wasn’t responding without any supervision I could still see a future where emotional or angry conversations are mediated by LLMs. You type in your angry rant and it instead sends a soft message explaining kindly how you feel.

What about if they weren't used for breakups but used to keep in touch with a greater number of people, @MartinRandall?

i.e. some service that responds to messages when the LLM has high confidence (above some custom % threshold) that the simulated response is close to how the real person would respond. Then for messages that don't meet that bar, the user has to manually approve from a selection of possible LLM responses or choose to write the response themselves.

in practice this would probably mean automating small talk & other low entropy moments in conversations

(assuming that LLMs no longer have hallucinations & become fairly good approximations of a given person)

Not sure if there's a market and/or term for such a thing already.

@elf It would count if botting is being done in places where someone would choose to ghost given 2020 tech.

I'm not restricting this to romantic relationships.

It does have to be automated. A conversational assistant who only suggests responses is not in the spirit of my 2023 hot take.

@elf Expanding on that, ghosting is what people do when they want to spend little/no more time on the conversation. So any chatbotting equivalent would have to have a minimal time investment to be a replacement.

@firstuserhere if there's at least one AI regulation I support, it's requiring "generated by AI" labelling