Resolves Yes if anyone explains to me (in a manner which I consent to understanding) why the novel algorithm in decentralized collective intelligence, that creates a truth verification economy and provides the game theoretic incentives to solve the general control problem, that I'm desperately trying to draw attention to for peer review doesn’t demonstrably prevent AI catastrophe.

Please resolve no if I die.

Thanks

People are also trading

@Krantz If this is your response to my last comment from a month ago, where I believed I was making a good faith effort to unpack and understand your claims... Then I guess I'll write you off as yet another crank that gets satisfaction from feeling misunderstood.

@NivlacM What percentage of the world's information do you think you've been exposed to? I need that information to calculate how highly I should value your opinion.

@Krantz all the information in the world? Some tiny fraction of a percent. About a much as anyone else i figure

Here's my ledger of thinking on this topic

Eliezer Yudkowsky is smart .9

Eliezer has some good reason to not listen to you .8

Testing true understanding is impossible .7

True ideas should be falsifiable .9

You haven't provided any way this idea is falsifiable .8

Really smart people can win lots of mana .7

Therefore making mana is an indicator of intelligence .7

This ledger thing is actually a little fun .8

Not everyone paid to understand something will agree with the conclusions about what to do with the information, even if we pretend people are just motivated financially (they aren’t) there will be financial incentives to continue building systems which have some small risk of catastrophe.

Some people have read the arguments, understand them, and continue working for big tech companies on AI already.

@LiamZ I really don't need anyone to believe anything. I'm paying you to tranparently consent to laws that a decentralized AI and other humans ought abide by.

@Krantz You obviously need people to believe that’s a good idea so this reply isn’t very well considered.

"You see, this is where the money that you earn comes from. If people want something thought about, they can incentivize those propositions. The religious leaders will want you to learn about their religion, the phone companies will want you to learn about their new phone options. Your neighbors might want you to watch the news. Your parents might want you to learn math. Your wife might want you to know you need to take out the trash. People pay money to other people to demonstrate they understand things.

People will want to put money into this."

Decentralized marketing? Will be used to teach the whole world specific knowledge of AI dangers? And that propositional knowledge will cause humanity to grow up and not build dangerous AI?

Am I misrepresenting the core of your arguments here?

@JamesBaker3 In some sense that's correct, but in another sense it completely misses the point.

It's really about decentralizing communication and attention.

The thing I'm proposing, is to build a decentralized symbolic AI. Think of the specific work that Doug Lenat was doing at CYC. He directed dozens of researchers for 40 years to perform the cognitive work required to define a symbolic model of truth.

I'm talking about decentralizing that cognitive labor. So, instead of nerds mining bitcoin, they can perform this work demonstrably in a way that serves to verify what is true and learn quite a bit about important issues which they otherwise would not have considered.

I'm talking about a paradigm shift from an AI that learns on its own into one that instead pays humans to verify what it should believe. A shift from ML to GOFAI.

It's purpose is not specifically to 'teach people how dangerous AI is'. The intention is to create a market that rewards those that are capable of identifying what is true.

If anyone takes the time to actually research what I've put into the public domain, I think it would be clear that my desire to resolve whether such a methodology could address the potentially existential consequences from continuing in the current direction outweighs any loss from the pretend pocket change that I've wagered on any of these markets to direct the attention of people that might understand the issue.

I don't know who many of you are, so I tend to assume you are a bunch of 20 year olds that just started learning about AI after 2017 or so and basically only see the domain of machine learning when you discuss AI.

If you don't know who Danny Hillis was and aren't familiar with his work or understand why he and Doug split ways, then you're probably not going to offer any insight. Sorry.

@Krantz in this case you're replying to someone who did GOFAI postgraduate work 20 years ago working firsthand with ResearchCyc. And I have opinions on the relationship of symbolic and RL, but none of those appear relevant to me yet here.

The "market that rewards... what is true" part, before we even get to any computers or algorithms, seems like hand-waving wishful thinking to me. Just the phrase "what is true" glosses over the perspectival question of relativism, which doesn't go away even if you're pointing at your algorithm to perform some community-notes-like consensus-finding.

Is it about building a KB of truth, or about educating, or changing people's beliefs? Which one of those would "prevent AI catastrophe" and does it have anything to do with an algorithm? (I don't care if it does, I'm just referring back to the title here.)

If you assume I'm a smart person who has thought a lot about these topics, what insight would you guess that I'm probably missing? (That you're trying to bring to the world here)

That's great to hear. The problem begins when we consider anything objective. There can be no objective authority of truth. Danny split ways with Doug because Doug was aimed at building an objective KB. Danny correctly believed an objective KB was unattainable. We can only build subjective ledgers of belief. Manifold is a subjective ledger of beliefs. I can't tell what's objectively going to happen. I can only see the subjective beliefs of others.

I'd recommend trying to approach this question as an economist. What do you think would happen if crypto evolved to the point where it allowed citizens to prove to their elected officials exactly where they stand on the issues and what issues they think are most important?

What's the difference between voting yah or nay for a given ballot initiative in a crypto city vs up/down voting a post on Twitter claiming hotdogs are sandwiches? What’s the difference between negotiating a constitution and naturally evolving to agree on a language?

The answer is where/how that information is stored.

All we really need, is a generally inclusive container that anyone can put info into.

If we agree on a way to compress all the possible truth, the only thing left to figure out is who believes what.

I think you're missing the scope of the project.

This would require the intentional use of the X platform to collect and reward the submission, verification and public normative support for various truths about the world. We could turn X into a public voting machine the government couldn't ignore. We could also use this machine to reward solving problems in public and checking each other's work.

@JamesBaker3 Think of it as the market that rewards (the creation and verification of information that will trend to be seen as 'valuable to maintain' to the people evaluating it in the future) if that helps.

@Krantz I hear two distinct things here: one is a voting system, the other is an mturk-like KB builder. I'm unsure whether you mean them to be separate or the same.

On the voting front: I will agree that we could possibly turn voting up to 11. Gov ID for all + easy public voting system + liquid democracy = great, that would be really cool. And I don't think "figure out is who believes what" would get us anywhere on its own. (Not that I'm attributing that to you as a claim.)

On the market incentivizing valuable-truth-verification... what's the economic approach again? Who pays? What are the incentives of the market participants? I think there's something off in the (what I read in the LessWrong post) "people care about money, just pay them money [to have right beliefs]" (my summarization) theory here, as far as I can see so far.

@Krantz I recommend you either don't bet, so you are unbiased, or you bet yes, so you are inventivised to adversarily challenge your own beliefs

@TheAllMemeingEye Are you buying “yes” with the guess this will make him sell his “no” so you can then sell your smaller “yes” at profit?

@LiamZ I bought yes because their entire lifetime is a pretty big window to change their mind on a single fringe theory, I'd put it at like 60% they change their mind this year, 90% they change their mind within their lifetime

@TheAllMemeingEye Oh not a criticism I just thought it was interesting! I think it’s clear from the profile this person has different motives for making these markets.

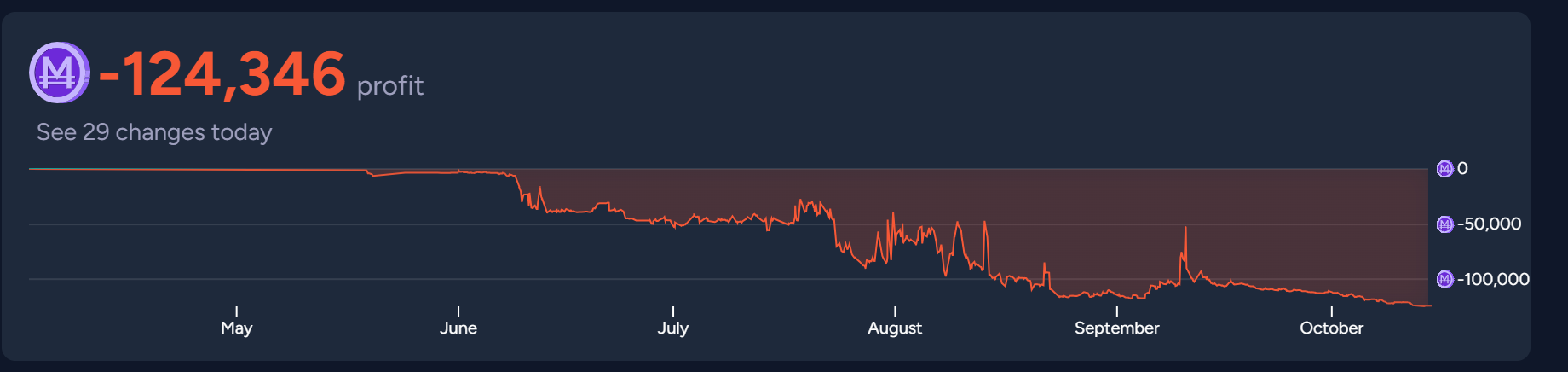

@LiamZ So, where should I put the money I want to happily spend to incentivize others to explain where I'm wrong?

@TheAllMemeingEye I really couldn't care less about mana. I care about my kids surviving AI. I genuinely believe you guys not taking what I'm trying to tell you seriously is putting that at risk.

If anyone wants to actually have a conversation, let me know.

@Krantz Very well, I promise that I will read your linked post in its entirety when I next have a large chunk of free time