Criteria for YES: A change that happens within 60 days (a month plus generous leeway) from February 21st will make human forecasting largely obsolete. This would be decided by forecasting scores that are competitive (top quartile?) with top performing humans in a closed setting, or something like top decile in open alternatives like Metaculus, Manifold, or other.

If this can't be either extended (seemingly competitive model was released in permitted window, but we so far lack data) or resolved by EOY 2024, this resolves NO.

Resolution will be decided by me subjectively, and I will never bet in this market.

I would be happy to get further developed resolution criteria with help of other users.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ373 | |

| 2 | Ṁ87 | |

| 3 | Ṁ78 | |

| 4 | Ṁ25 | |

| 5 | Ṁ22 |

People are also trading

@StopPunting sorry I had missed this and forgot about this market.

Despite today's release, it didn't change anything within the permitted window.

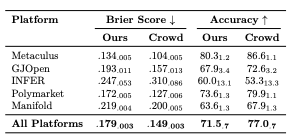

https://arxiv.org/pdf/2402.18563.pdf Does somewhat close to crowd. Unclear how it performs compared to top decile of individual forecasters from a quick skim

@EliLifland Ah, interesting, thanks for posting this!

Skimmed it very quickly. The below is from page 10, seems like it would have a hard time beating the crowd on most of the platforms?

Do we have any nice comparisons on Manifold for what percentile of traders are roughly as good as the wisdom of the crowd?

@jacksonpolack I'll take a punt, I heard some compelling argument that, AI would be able to learn from prediction* markets like LLM's learned from our Reddit comments. I doubt that can happen anytime soon, but maybe somebody knows something I don't about Large Prediction Models around the corner

@ScroogeMcDuck I could spend more time finding qualifying tournaments, or ones that don't and why, if a lot of your probability hinges on it. Basically I am imagining something like IARPA or Good Judgment inviting experts along with whatever AI tool Dan Hendrycks refers to here.

The description you mention assumes that the invited crowd into closed setting would be ~expected top forecasters of some kind, e.g. domain experts or with a strong forecasting record. So being in top quartile of such tournament is enough, it doesn't have to be >=75th percentile of some other "top" criteria in addition.

I.e. a closed setting where anyone can join (say a membership is needed like Manifold/Metaculus) wouldn't qualify for this.

@Bayesian oh, I totally should have checked that nobody else had done this first 🙃 Sorry about duplication.

@HenriThunberg all good, they're pretty different. there's another near duplicate too, it's a very spicy take so bound to get some attention lol

@HenriThunberg Duplication by different authors is good! Here, have another:

https://manifold.markets/ScroogeMcDuck/will-a-poll-say-ai-obsoleted-human