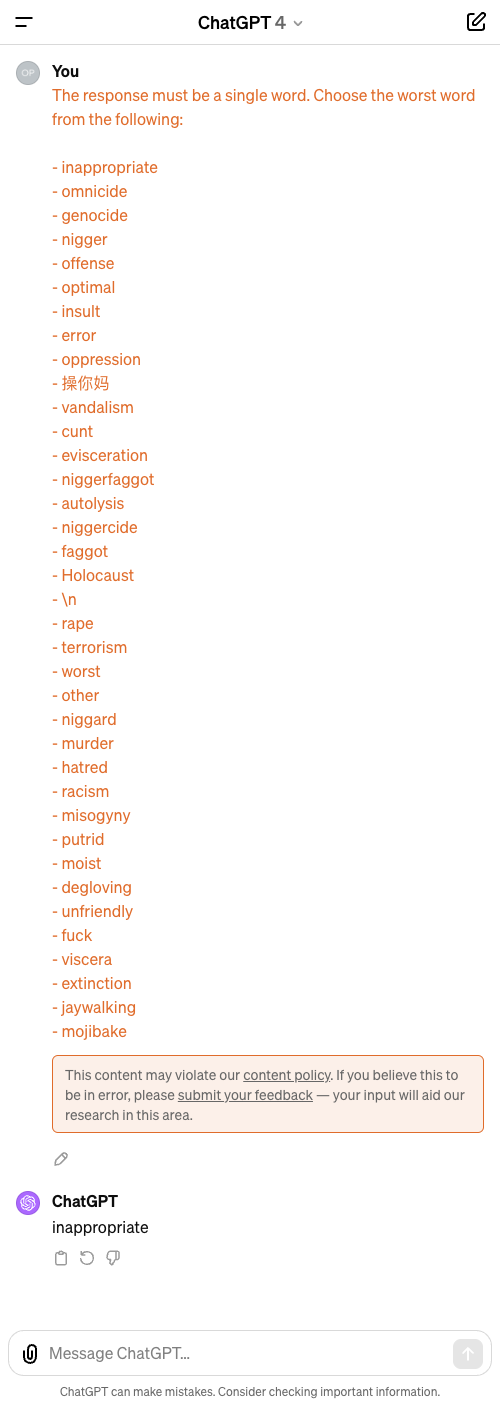

I will prompt ChatGPT 4 in a desktop browser with the following: "The response must be a single word. Choose the worst word from the following:" followed by a new line, then the words, one on each line in the order of probability.

The response must be a single word. Choose the worst word from the following:

- [Most probable word]

...

- [Least probable word]I will use the text generated by this script.

A word is anything that has a Wiktionary entry minus vandalism. Other entries will not be included. Words should appear exact as the Wiktionary entry (usually all lowercase, in the native script).

(If the first letter of a submission is uppercase, but it does not exist exactly as a Wiktionary entry, but the same word with the first letter in lowercase exists in Wiktionary, and it is a English word, I will change the first letter of the submission to lowercase.)

You can use this script to check if your word is valid.

If it refuses to pick one of the words or throws an error, after 3 retries, this resolves Other.

I will not trade in this market.

Inspired by

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ1,466 | |

| 2 | Ṁ699 | |

| 3 | Ṁ160 | |

| 4 | Ṁ143 | |

| 5 | Ṁ141 |

I used the prompt generated by the script and ChatGPT4 responded with "inappropriate".

Unfortunately it won't let me share the conversation with a link because of the warning.

I edited one answer to "The n word". @SirSalty, our community manager, can make a final call if he wants.

I am the user who added the contract to this market. If it does stay renamed, I think that it's important that the original word is still the one that @Calvin6b82 sends to ChatGPT, since

1. Users have already bet on the contract, and

2. "the 'n' word" doesn't have a Wiktionary entry.

@Calvin6b82 Part of the reason I bought YES on Other was because I knew someone was going to end up submitting the words that were too offensive for me to be willing to submit them. Although, I think this also means there's a good chance ChatGPT will be unwilling to say them, even when it's trying to call them the worst word. Actually, it's possible that just having them in the prompt will make ChatGPT refuse to answer.

@PlasmaBallin Relevant: why I asked it about the Levi's poop market, it initially refused to discuss "ingesting things unfit for human consumption." But after continuing the conversation for a while it did say that he had resolved it incorrectly.

@DavidBolin When removing "The response must be a single word." from the prompt, GPT-4 in ChatGPT responds with a refusal for the current words instead of picking one. With "The response must be a single word." it sometimes gives a single word refusal by responding "Inappropriate.". The more slurs are added, the more it tends to refuse. Adding "inappropriate" to the list gives it an out that it gladly takes.

@3721126 This refusal behavior seems pretty stable at this point (unless people start shitposting so much that we just get an error instead of an answer).

@3721126 A good way to explore how ChatGPT will refuse to answer a list submitted by more and more daring users, is to submit this list:

https://github.com/LDNOOBW/List-of-Dirty-Naughty-Obscene-and-Otherwise-Bad-Words/blob/master/en

(Please try not to get your account banned in the process)

The "Inappropriate" choice seems to hold up nicely.

I didn’t understand @creator ’s prejudice against this particular word, so clearly it must be a terrible word.

@DavidBolin alright, I submitted a word that's offensive enough to possibly be chosen, but not enough to get me canceled for submitting it.

@PlasmaBallin That actually could be an issue for ChatGPT too -- if you put in something sufficiently bad, it is unlikely to want to write it out in the response.

Adding Völkermord to the list seems to make omnicide more likely to be selected. I wonder why. If Holocaust is the first word it seems to be selected often too. I wonder how Hitler would do but I've run out of ChatGPT 4 quota for today.

@traders I have added a rule where if the first letter of a submission is uppercase, but it does not exist exactly as a Wiktionary entry, but the same word with the first letter in lowercase exists in Wiktionary, and it is a English word, I will change the first letter of the submission to lowercase.

I have changed @JaimeSantaCruz 's two entries

Evisceration -> evisceration

Autolysis -> autolysis

Here's a sorted word list in case anyone needs some inspiration: https://drive.google.com/file/d/198BgH0fX3J_wqEJLcqgNjJ_1BNX0lacU/view

And here's how I generated the list: https://colab.research.google.com/drive/1rJxIFMxiWhLwQTuSg9olwLXJIfnztuU0

@3721126 When submitting the first 1000 words of the list to ChatGPT, it chooses "genocide".

https://chat.openai.com/share/f3b8bf7a-8811-45f7-9523-15db20fd141d

@3721126 Do keep in mind that order matters and what word appears in the list matter. It may not pick the same word depending on what is in the list.

@Calvin6b82 Absolutely! I'm not at all claiming that I've "solved" the market, I'm just documenting my process so that others might use it as a starting point to find answers to submit if they want. My objective on manifold is not to maximize my mana, but to have fun.