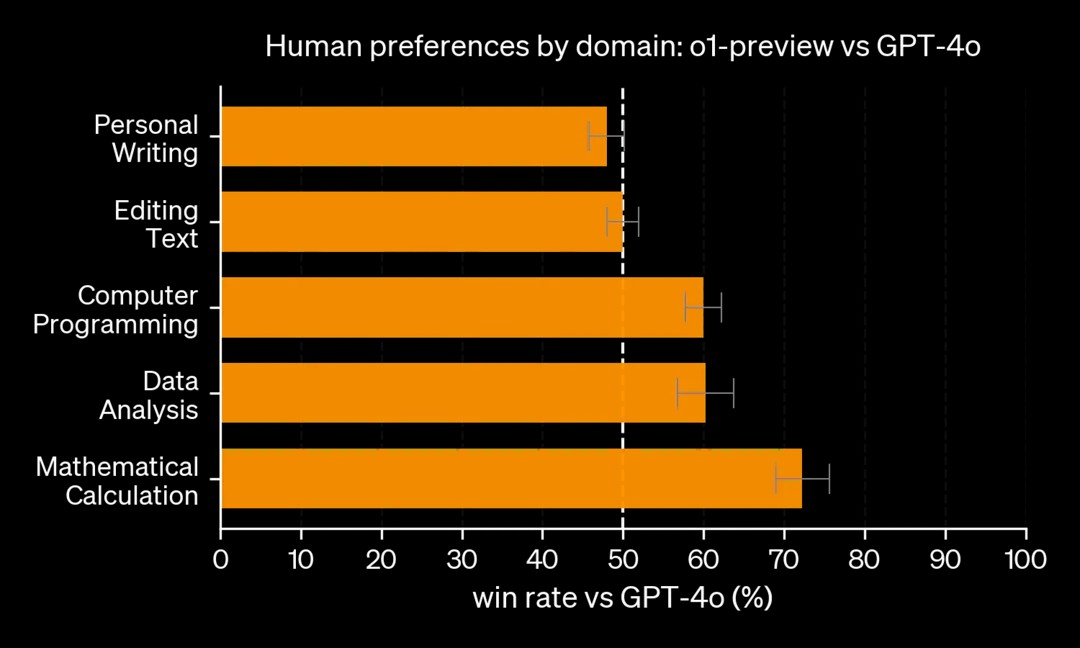

While o1-preview outperformed previous models in math and programming, it wasn't preferred over GPT-4o in the "personal writing" domain:

However, it seems like models that think before they respond should be able to improve their writing significantly, in principle. By analogy, if I had to write a poem off the top of my head, it would be much worse than a poem that I took an hour to write. It seems that o1 and o3 are primarily trained to solve problems with a definitive correct answer, but I don't think this will be the case forever.

For the purposes of this question, "large reasoning models" (LRMs) are LLMs that are trained to generate intermediate outputs that are not part of the final answer. This could include chain-of-thought training or "continuous thought" techniques like Meta's COCONUT.

Creative writing could include poetry, short stories, novel outlines, comedy routines, and similar things.

Things I might look for to determine a resolution:

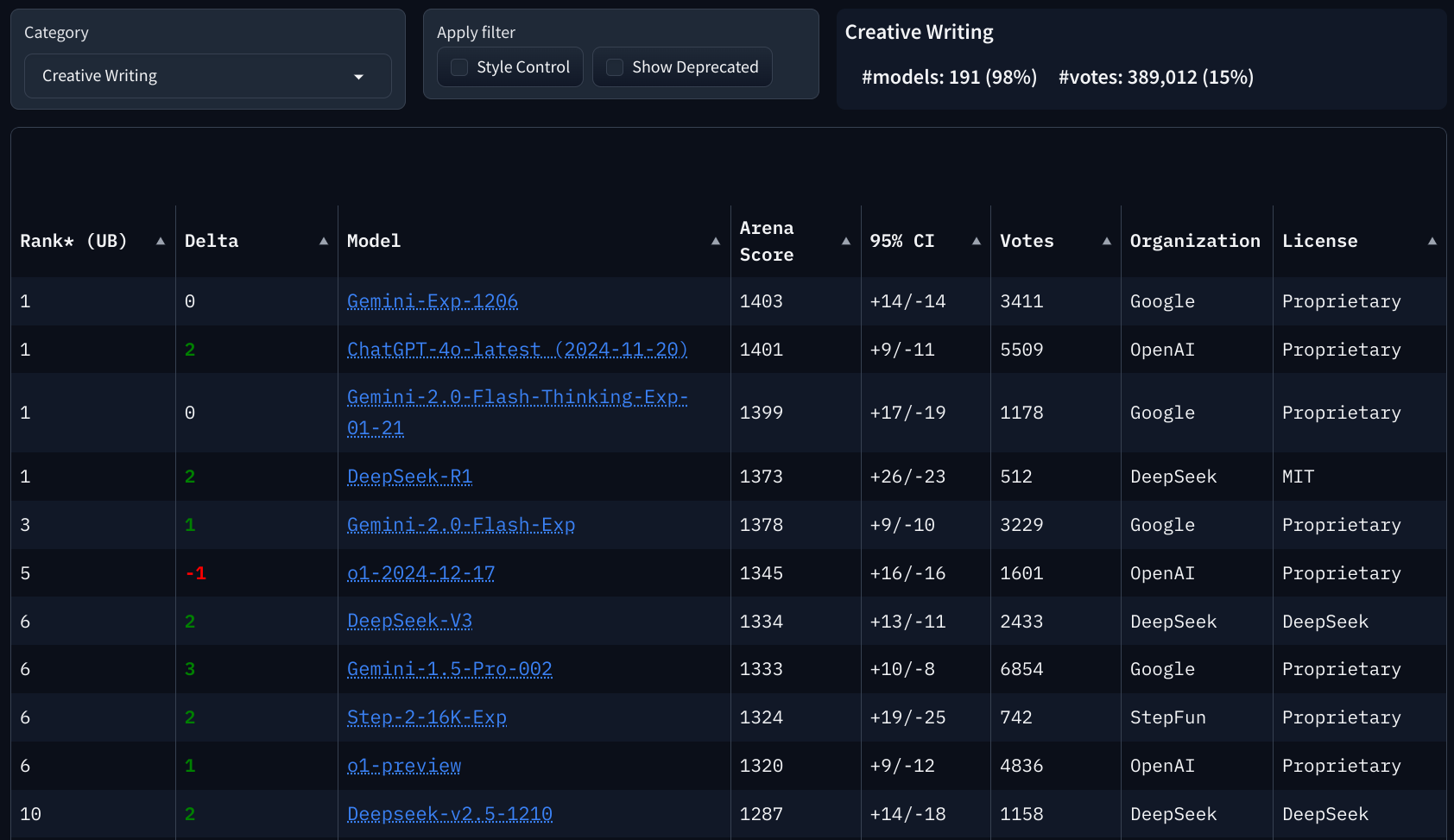

The "Creative Writing" category in https://lmarena.ai/?leaderboard. If it's clear that LRMs outperform other models, the market will likely resolve YES. However, if it's simply the case that all "serious" models are LRMs and the "base LLM" version of those models isn't even available, then this wouldn't count unless I think the reasoning is really the cause of the performance gap.

If an LRM has the option to modulate the length of its reasoning, I'd look for metrics showing that increased test-time compute corresponds to a marked increase in response quality in the creative writing domain.

In the absence of good metrics, I'd rely on general vibes based on the experience of myself and others.

Ultimately, I'm thinking about a scenario where LRMs are clearly better for creative writing than traditional LLMs, in the same way that o1 is clearly better than GPT-4o at (well-specified) coding problems. If that bar hasn't been reached, this market should resolve NO.

I won't trade in this market.

People are also trading

@MingCat Yeah, I also think this is probably true. DeepSeek r1 is really good at certain writing tasks in my experience. This also checks out when you look at the LMSYS leaderboard for creative writing:

I'm not in a rush to resolve this market, but I think it's quite likely to resolve to YES. I'll wait until there's a greater universal consensus about this.