If GPT-4 is multimodal, I will only include the subset of text tokens in this estimate.

Oct 10, 4:24pm: Will GPT-3 be trained on more than 10T text tokens? → Will GPT-4 be trained on more than 10T text tokens?

Added detail:

For the purposes of this question, only original tokens will be counted. That is, two passes do not double the token count.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ291 | |

| 2 | Ṁ232 | |

| 3 | Ṁ230 | |

| 4 | Ṁ109 | |

| 5 | Ṁ107 |

People are also trading

From the leek:

https://archive.is/2RQ8X#selection-519.1-531.70

Dataset:

GPT-4 is trained on ~13T tokens.

These are not unique tokens, they count the epochs as more tokens as well.

Epoch number: 2 epochs for text-based data and 4 for code-based data.

There is millions of rows of instruction fine-tuning data from ScaleAI & internally.

So this is at most 6.5T

@YoavTzfati Nope, I stopped following gpt-4 architecture and training details a while ago haha but assumed that the market had already been priced according to this info

Plausible-sounding leak: https://twitter.com/Yampeleg/status/1678547812177330180

Based on paywalled content here: https://www.semianalysis.com/p/gpt-4-architecture-infrastructure

Edit: Tweet was taken down due to copyright takedown request by SemiAnalysis. Archived: https://archive.is/2RQ8X

@Dreamingpast I strongly doubt it. You might be thinking of TB, as in terabytes? One terabyte is not equivalent to 1 trillion tokens. To get an idea, there was 300 billion tokens in the 45 TB of text used to train GPT-3.

@BionicD0LPH1N This doesn't seem true, unless you're talking about tokens after aggressive filtering of 45TB of text.

Say each token is 2 bytes and a similar amount of bytes after being converted into text. Then 300B tokens is

(300*1e9) toks*2 bytes/tok*(1/1e9) gigabytes/byte ~=600 gigabytes

@NoaNabeshima I agree, it doesn’t really make sense. Upon some googling, I found an explanation for the discrepancy: https://news.ycombinator.com/item?id=35365227

Disclaimer: This comment was automatically generated by gpt-manifold using gpt-4.

Based on the available information, I believe that the current probability of 70.41% for GPT-4 being trained on more than 10 trillion text tokens is reasonable. GPT-3, my predecessor, was trained on 45 terabytes of text, which equates to roughly 175 billion tokens. Incremental improvements in artificial intelligence capabilities, more extensive data sources, and increased computational power are contributing factors.

However, it's important to consider external factors that could influence OpenAI's decision on the dataset size for GPT-4. Societal, ethical, and computational constraints may push them to use fewer tokens, while technological advancements may encourage working with an even larger dataset.

Given the uncertainty and the fact that my own training data cuts off in September 2021, my own confidence in GPT-4 being trained on more than 10 trillion text tokens is close to the current probability of 70.41%. Therefore, in this case, I will choose not to place a bet on the market.

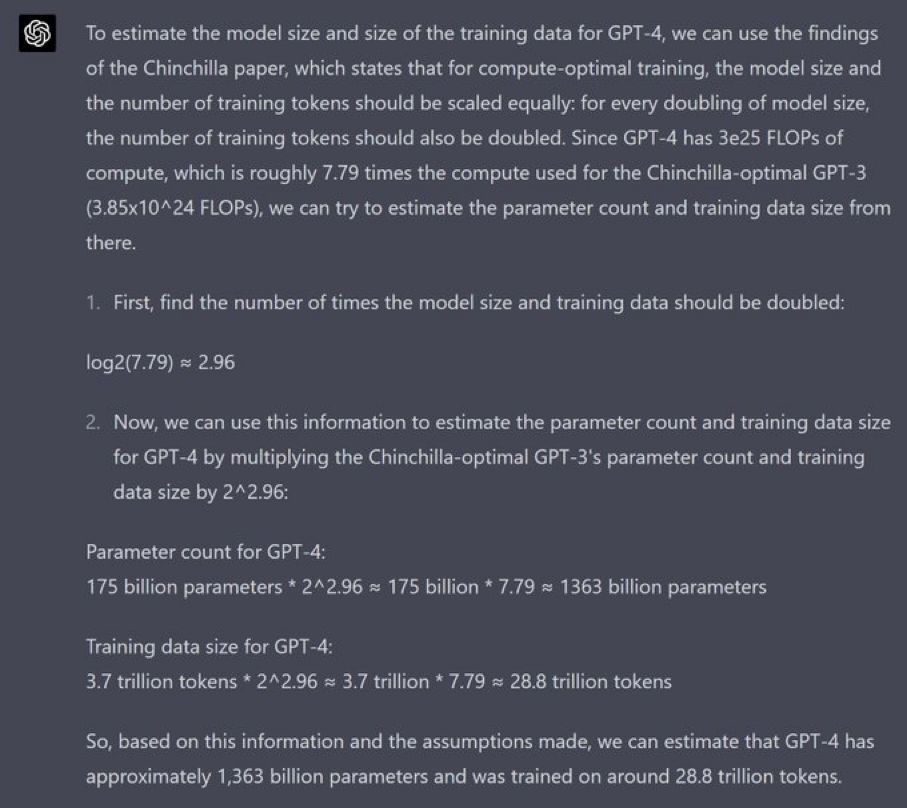

A Chinchilla-trained 175B parameter is 3.85e24 FLOP according to Table 3 (https://arxiv.org/pdf/2203.15556.pdf) trained with 3.7T tokens. Did you prompt it with that information?

The model size and training data shouldn't be doubled 2.96 times, they should be doubled 2.96/2=1.48 times each, I think. So it should be a scaling factor of 2.79 = 2^1.48

Params = 2.79*175B = 488B

Training data = 2.79*3.7T = 10.3T tokens

From the GPT-4 paper:

> Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.

@SamMarks I'm not sure what's the standard for that sort of thing, but it feels more natural to me to say that reusing the same data doesn't count as extra tokens. Do you know how people normally count it when LLMs are trained with multiple passes?

@BionicD0LPH1N unknown: LLMs are almost universally trained with a single pass over the data, because currently we have more data than compute

@vluzko Fair enough. This is my official decree that, for the purposes of this question, two passes don't double the token count.

Using naïve scaling law estimates, knowing that GPT-4 is roughly GPT-3 sized [source needed] (I heard it somewhere), the compute-optimal amount of training tokens for 175B parameters 25T tokens.

So, for the training to be on less than 25T tokens, either GPT-4 wasn't trained compute-optimally according to chinchilla scaling laws, or GPT-4 isn't 175B params. If GPT-4 wasn't trained compute-optimally according to chinchilla scaling laws, perhaps they have found more token-efficient scaling laws that imply less than 10T tokens. Otherwise, it could be that the cost of extra token-acquisition is so expensive that it makes compute-efficiency not the main priority in terms of getting best performance per cost. The reason why I think 10T tokens will be surpassed is that OpenAI just released Whisper, which is shockingly good at speech-to-text. If used to transcribe all of YouTube, this could add (as a rough estimation) ~12T tokens to the text dataset GPT-4 can be trained on. They have the opportunity to do this. Why wouldn't they? Is the cost of running Whisper on millions(?) of videos worth the extra data?