This market matches General Capabilities: Epoch Capabilities Index from the AI 2026 Forecasting Survey by AI Digest.

See other manifold questions here

Resolution criteria

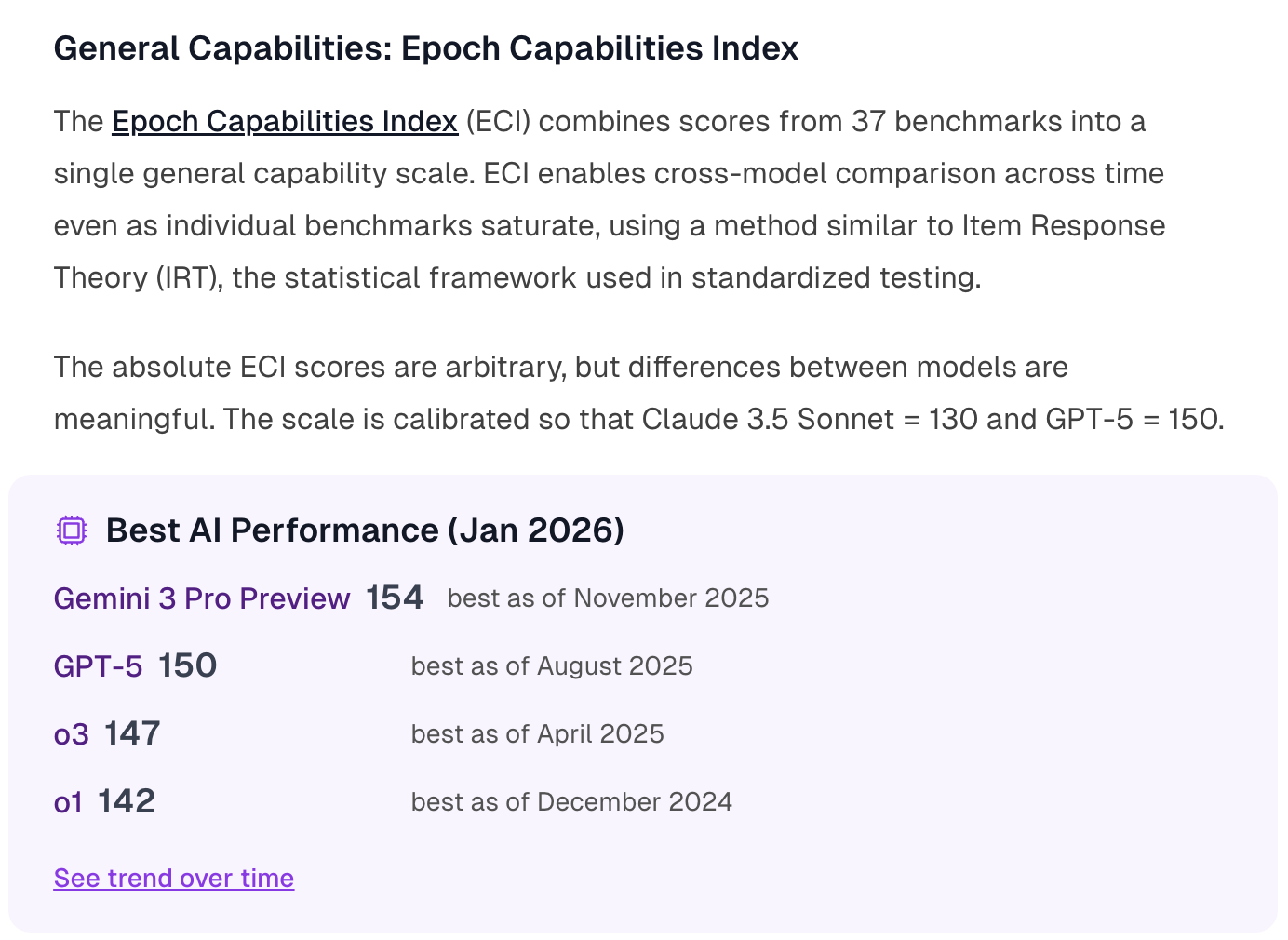

Resolves to the highest ECI score reported by Epoch AI as of December 31, 2026.

If Epoch revises the ECI methodology, re-normalizes scores, or updates the benchmark set, the resolver will use the most recent official ECI scores as reported by Epoch at year-end. If the scores change their meaning, the resolver may adjust them as deemed appropriate to be comparable to the current scores, or set this question's resolution to be ambiguous.

Which AI systems count?

Any AI system counts if it operates within realistic deployment constraints and doesn't have unfair advantages over human baseliners.

Tool assistance, scaffolding, and any other inference-time elicitation techniques are permitted as long as:

No unfair and systematic advantage. There is no systematic unfair advantage over the humans described in the Human Performance section (e.g. AI systems are allowed to have multiple outputs autograded while humans aren't, or AI systems have access to the internet when humans don't).

Human cost parity. Having the AI system complete the task does not use more compute than could be purchased with the wages needed to pay a human to complete the same task to the same level. Any additional costs incurred by the AIs or humans (such as GPU rental costs) are included in the parity estimation.

If there is evidence of training contamination leading to substantially increased performance, scores will be accordingly adjusted or disqualified.

The PASS@k elicitation technique (which automatically grades and chooses the best out of k outputs from a model) is a common example that we do not accept where it would constitute an unfair advantage. Since ECI aggregates 37 underlying benchmarks, PASS@k restrictions apply at the level of each underlying benchmark as appropriate to that benchmark's methodology.

If a model is released in 2026 but evaluated after year-end, the resolver may include it at their discretion (if they think that there was not an unfair advantage from being evaluated later, for example the scaffolding used should have been available within 2026).

Eli Lifland is responsible for final judgment on resolution decisions.

Human cost estimation process:

Rank questions by human cost. For each question, estimate how much it would cost for humans to solve it. If humans fail on a question, factor in the additional cost required for them to succeed.

Match the AI's accuracy to a human cost total. If the AI system solves N% of questions, identify the cheapest N% of questions (by human cost) and sum those costs to determine the baseline human total.

Account for unsolved questions. For each question the AI does not solve, add the maximum cost from that bottom N%. This ensures both humans and AI systems are compared under a fixed per-problem budget, without relying on humans to dynamically adjust their approach based on difficulty.

Buckets are left-inclusive: e.g., 160-165 includes 160.0 but not 165.0.