This question resolves positively if some person or group credibly announces the existence of an AI that can generate appropriate videos at least 10 seconds long from arbitrary prompts (similar to the way DALL-E generates images from arbitrary prompts) and provides examples.

This is question #50 in the Astral Codex Ten 2023 Prediction Contest. The contest rules and full list of questions are available here. Market will resolve according to Scott Alexander’s judgment, as given through future posts on Astral Codex Ten.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ527 | |

| 2 | Ṁ290 | |

| 3 | Ṁ222 | |

| 4 | Ṁ200 | |

| 5 | Ṁ185 |

People are also trading

@oh What evidence is that. Not one of these seems to generate anything more than a still frame morphing in some weird way unrelated to the prompt. Do you have an example of even a single successful prompt?

@cherrvak ehhhh

Prompt: A ninja on a skateboard does a flipkick, then tosses a banana to a chimpanzee in an army uniform and playing a banjo

Videos have to be at least 10 seconds long. It doesn’t have to be good at generating them. At pace of progress, it seems highly implausible that this won’t happen as defined. Even if the product isn’t ready for prime time, does that seem like it is stopping anyone these days? The only real risk is Scott saying ‘not good enough’ and I don’t put Scott on saying that without a lot of justification. I bought M1000 of YES here to bring it to 85%, I definitely have a fair in the low 90s similar to Metaculus.

I think this would count.

Text to video

examples are 5-10 seconds long

Can take any text as imput

Yeah, this should have been resolved “Yes” before the prediction contest even started. This very clearly counts for the resolution criteria as stated, these videos are 10 seconds long and generated from arbitrary prompts.

The only remaining uncertainty is whether Scott’s going to say “lol this one isn’t good enough” for some incoherent reason.

@MichaelRobertson that's not a production service tho. It might be in the future but I cannot sign up and create video (currently) like one can sign up for DALL-E and create images.

@Kronopath you are seeing Meta AI's cherry picked results on that web page. You cannot use this as a service making it very different from DALL-E

@21eleven Doesn’t matter, none of that is in the resolution criteria. All that’s required is if someone “credibly announces the existence of [such] an AI […] and provides examples”. No need for this to become a production service or provide non-cherry-picked examples.

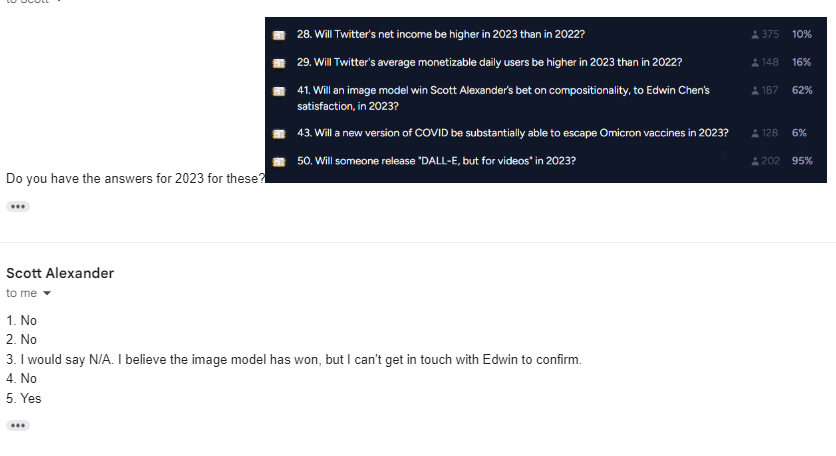

You know this market is high on hopium bc it is above the image compositionality related market.:

https://manifold.markets/ACXBot/41-will-an-image-model-win-scott-al?r=MjFlbGV2ZW4

@PhilWheatley And for the record I absolutely think this is gonna happen. It just didn’t happen last April. Maybe this April though…

@BTE im not really sure why google is mentioned in the title and video, as its not mentioned in the paper: https://tuneavideo.github.io

sorry, dont have an answer for you.

@BTE @PhilWheatley This project was built on top of googles "Nerfies" project (https://github.com/google/nerfies). They haven't released their code yet, but if its just a custom data set they trained against, it would make sense to call it "Googles AI".

@PhilWheatley I don't think that counts as it requires an input video to style transfer onto where that style transfer is based on some user text. A DALL-E for videos would be based on a text prompt alone.

@21eleven fair point, it hadn't explicitly excluded a video input so thought I'd point it out at least

This should resolve True, here is a paper:

https://arxiv.org/abs/2204.03458

And here is an example:

https://video-diffusion.github.io/

@MichaelRobertson I can tell you for certain this is not going to resolve YES based on something published last April. Those videos are more like gifs than videos. As evidenced by the fact that they embedded dozens of them on a single webpage (though it still crashed almost immediately so somewhere between gif and video.

@BTE what does that even mean? whats the difference between a gif and a video other than one having the capability of sound?

@PhilWheatley Is that a serious question? How many videos do you watch in a constant 3 second loop?

@BTE where in the definition of a video does it say it needs to be a certain length? a video is defined as "a recording of moving visual images" i.e. a series of images to depict motin, same thing as what a gif does, by definition they are the same.

@PhilWheatley Dude, if it was a video they would call it a video instead of a gif. You aren’t going to convince me. And it’s irrelevant because, again, that paper was published last April. That clearly means @ScottAlexander doesn’t think gifs are videos and his opinion is the only one that matters.

@BTE ? are you serious? why do they call things gif's instead of pictures then? or why are some videos mpegs? if you are going to use a file format to define it then there are no pictures on the internet, only jpegs, gif's etc. no videos only avi, mpg's etc. and im not arguing in favour of that paper to be clear, just that gif and video are not distinct from each other, both are a slideshow of images.

@BTE thanks for the reminder, i forget sometimes it's fruitless to argue with the ignorant.