I'm reading about the Rabbit R1 and the Humane Pin, which seem to be like the ChatGPT smartphone app but with hooks into all your other apps and accounts so it can, like, magically order Ubers or book flights for you or whatever, via just talking to it. Adept.ai may be trying something similar.

I'm getting a lot of Google Glass vibes from these demos. (See my 2013 review -- pretty nostalgic!)

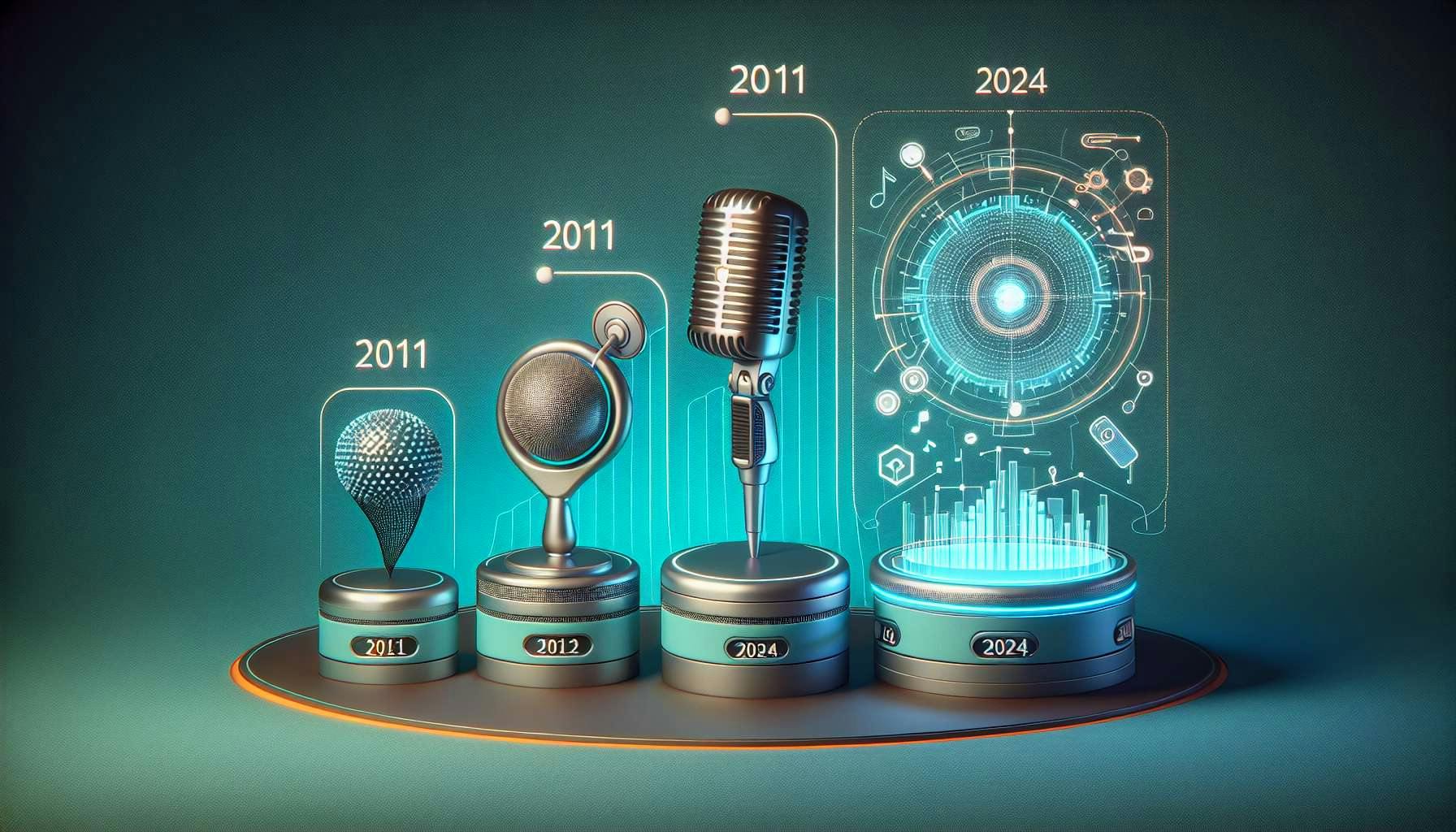

It's also reminding me of this skeptical 10-year prediction I made about voice interfaces in 2011:

Negative prediction: Siri is a fad!

That’s overstating it, of course. It will get better and become more common and more useful, but that’s it. I predict no progress on having a reasonable natural language conversation with a computer. Today’s Siri commercials will oversell even the voice interfaces of 2021. (I was similarly a bit down on IBM’s Watson earlier in 2011.)

And my verdict on the state of the art in 2021 (written early 2022):

True. This one is debatable, I suppose, but here’s my argument. The progress on voice interfaces since the introduction of Siri has been extremely incremental, just as I predicted. I admit Alexa is super useful, but only for a handful of specific tasks (playing songs, setting kitchen timers, answering arithmetic problems or googling things relevant to out-loud conversation happening among humans). There’s never any useful back-and-forth and if you watch one of the early ads and then try asking similar things, much of the time it falls on its face, even now.

But it's believable to me that LLMs are about to finally get us to the level of 2011 Siri commercials!

Now how to operationalize that for a prediction market?

So far I think the spirit of the question I'm aiming for is about a natural, human-like back-and-forth dialog for getting the computer to take actions for you in the real world. What a naive viewer would've inferred from the 2011 Siri commercials. (I know in retrospect those commercials weren't technically showing things that weren't technically possible, but, c'mon.)

If we don't find a way to pin this down better then I'll resolve according to my judgment and not trade in this market myself.

Here's what I have tentatively so far:

Will I personally use a back-and-forth human-like voice interface to cause actions to be taken (eg, ordering taxis, sending emails -- not just answering questions) on a daily basis (more than 1x per day on average) by the end of 2024?

Ideally I'd decouple this from my own choices so that the prediction is purely about the evolution of the technology. Ask questions before trading and we'll put together an FAQ with clarifications!

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ19 | |

| 2 | Ṁ12 | |

| 3 | Ṁ8 | |

| 4 | Ṁ5 | |

| 5 | Ṁ2 |

People are also trading

@Tulu Good idea. Got proposals? But also it's more than commands, it's about getting the computer to do things via back-and-forth dialog. Like in the commercial where the guy seems to be having a whole discussion with Siri about what to text people based on his calendar and other incoming texts.

@dreev Which commercial is that? The ones I could find only show simple things that already work, at least if the voice recognition is accurate.

@Tulu I have one linked in the market description. But watch it from the perspective of a lay person in 2011. From our perspective now it's like "yes, of course, you can ask Siri for the weather or to set timers or to convert grams to ounces -- that all works as advertised". What's deceptive about the ad is the cherrypicking and the cuts. The implication is that you can just ask it to do anything that you could do with your thumbs, and that the device will reply in a natural back-and-forth.

I guess Siri/Alexa fans are not going to see it this way but I really think that for those who didn't know better, those commercials gave a drastically wrong impression of how capable and useful voice interfaces were, and still are, as of January 2024.

@dreev I get what you mean, I would even say that is one of the main problems why voice assistants are not very successful: You cannot see what they can do.

I remember one video of Microsoft's vision for Cortana that shows something more at the level you are thinking about.

https://www.youtube.com/watch?v=G_v5B_gYceM

It's very focused at business calendar scheduling, but more advanced than voice assistants let you do now.

Maybe you could restrict the question to whether the assistant can do what you would expect from a human in a narrow field. Like today they are very good at media control or smart home controls. But a more complex task like finding a hotel you like, booking it, and remembering it for you, today no virtual assistant comes close to a human assistant.

For posterity, the original suggestion from @TheoSpears for operationalizing this was the following:

Will I, @dreev, interact with an LLM in order to cause an action to be taken (things like ordering a taxi or sending an email count, but not just answering a question) more than once a day on average by the end of 2024?

Or that's the original from the market description here. The original-original was this:

@dreev will interact with an LLM in order to cause an action to be taken (eg ordering a taxi or sending an email counts, but not just answering a question) over 2x a day on average by this date.

I think this shouldn't be pinned to LLMs in particular (even though it'd surely involve them) and I think I'd like to decouple it more from my own personal choices and instead predict whether we'll get voice interfaces with a natural and human-like back-and-forth for causing real-world actions.

Though I'm torn about this. Using such voice interfaces myself on a daily basis is a reasonable proxy for whether the tech has reached that level.

Do you think it would be better to rename the market to "Will I interact with an LLM to manage external services multiple times per day (2024)?", or something, with the rest of the clarification in the description? I don't think the current resolution necessarily matches the current title, since it's possible for the voice interfaces to reach that level without you using them more than once per day on average, especially if you get sick, lose your voice, become homeless, die, etc.

@12c498e Agreed, I think. But I actually would prefer to remove the me element of this and have it be a prediction about the evolution of the tech itself.

Theo's version might be most of the way there. Probably I won't trade if we use that version since it gives me discretion on how much to use such a thing or how hard to try to acquire one. But that also makes me less inclined to use that operationalization, since it's so contingent on my personal actions and choices.