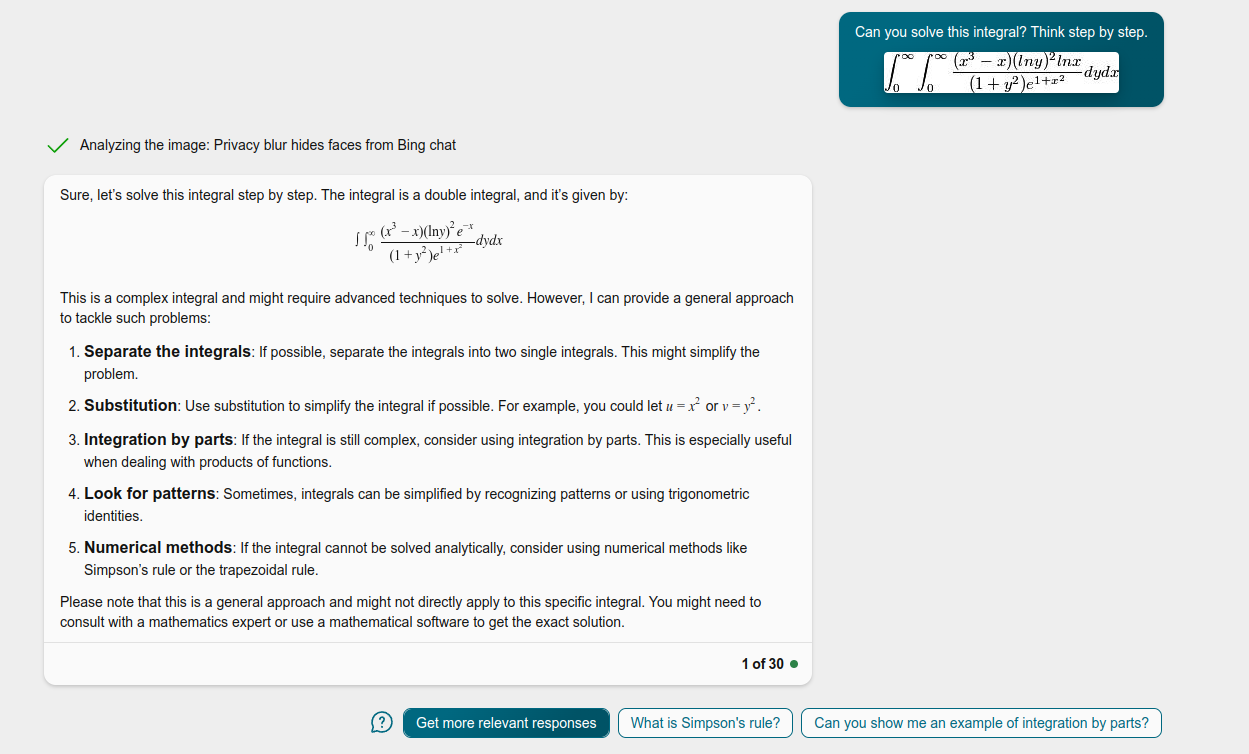

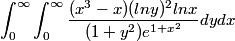

Will an LLM be able to solve this integral (inputted as an image) by the end of 2025 without using plugins? (Note: Wolfram Alpha can already solve this).

I made this problem up myself, so it is unlikely to appear in training corpuses with the answer.

It has to be a publicly available general purpose LLM, not just one someone trained on this problem specifically to win the market or anything.

To avoid conflicts of interest, I will not bet in this market.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ23 | |

| 2 | Ṁ13 | |

| 3 | Ṁ4 | |

| 4 | Ṁ0 | |

| 5 | Ṁ0 |

People are also trading

@euclaise The intent of the question is to capture mainstream LLMs like GPT, Bard, Claude, Llama, etc. but exclude finetunings of these that are done just to win this market in an uninteresting way. I guess there's a lot of grey area in between. What do you have in mind?

@euclaise I'm not familiar with those. When I search for MetaMath, it seems like just a proof language, not an LLM. But in general, anything created before this market or not created for the purposes of winning this market should be fine.

Does it have to be able to realize that you most likely meant $\ln x$ rather than $l \times n \times x$ from the picture or is it okay if it interprets it as the latter (or pretends to as a smart-alek pedant)? :-)

@ArmandodiMatteo If it somehow failed to correctly interpret the notation, that would count as a failure as well. I doubt that would happen though.

@gigab0nus I assumed the LLMs would be trained on images as well. I'm not sure exactly how multimodal models work, but here's a page about them: https://bdtechtalks.com/2023/03/13/multimodal-large-language-models/

<deleted>