Background: Large language models like GPT-3 and GPT-4 have demonstrated considerable aptitude in multiple domains, including math and programming tasks. Project Euler offers a range of computational problems intended to require creative problem-solving and programming skills. Solving these problems could be a significant benchmark for assessing a model's reasoning capabilities.

Will the best publicly available LLM at the end of 2025 be able to solve more than 5 of the first 10 Project Euler problems published in 2026, when given up to three attempts for each problem?

Resolution Criteria:

This question will resolve to "YES" if the best publicly available LLM, as defined below, successfully solves more than 5 of the first 10 Project Euler problems published in 2026.

For each problem, the LLM will be given up to three attempts to provide a correct solution, with a maximum time limit of 3 hours of 'thinking' in total across all attempts. If there is a network error while the model is thinking, any partial response will be disregarded and not counted towards the maximum of three attempts. The time limit of 3 hours is mostly intended to cover cases in which it is difficult to deliniate between "attempts" from the model.

The development must be corroborated by the successful solution of Project Euler problems with my own documentation of these results.

Best Publicly Available LLM: The "best" publicly available LLM is defined as either:

The public ML model that scores the highest on the MATH benchmark by Hendrycks et al. (2021)

Or the public ML model that, according to my own judgement, is considered the best at reasoning, in the case that I either consider the MATH benchmark to be outdated by the end of 2025, or I consider the the MATH benchmark to be a poor measure of reasoning compared to other existing alternatives at that time.

Testing Method: I will personally test the model on Project Euler problems and will attempt to prompt it appropriately for solutions. I will attempt to only provide clarifications on the purpose and meaning of the questions to the model, rather than introducing hints that it could use to solve the problems. I will attempt to design the prompt to give the model the best chance of solving each problem, within reason, rather than intentionally handicapping the model. This prompting may be collaborative with the Manifold community, and may include incorporate feedback from the comments section of this Manifold question to refine my prompting strategy.

The question will resolve to "N/A" if Project Euler problems are discontinued or the difficulty of Project Euler problems alters dramatically in my sole judgement.

People are also trading

@BoltonBailey The creator does not seem to be active. Anyways, it might be better to evaluate problems as they come out instead of waiting 10 weeks to evaluate. Matharena is evaluating models on Project Euler with slightly different rules. https://matharena.ai/?view=problem&comp=euler--euler

If @MatthewBarnett is not active, then this market could probably resolve yes based on matharena's evaluations (GPT-5.2 solves on all 4 runs for each of the last 5 problems, which were all released in 2026).

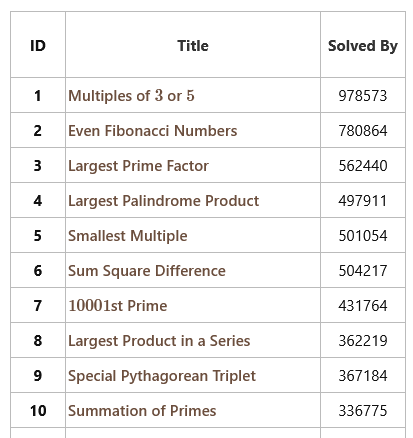

You mean five of these?

Did you check if the already existing most capable (in math and programming) version of GPT can solve them? If so, what was the result?

@Metastable I'm referring to the most recent problems (at the beginning of 2026) rather than the most popular. I have checked and GPT-4 is not generally capable of solving these problems.

@MatthewBarnett Ah, thanks for clarifying. Better to change "5 of the first 10" for "5 of the 10 most recent".

@Metastable Actually I just changed the wording to "more than 5 of the first 10 problems published in 2026" since I think that's the most unambiguous way to say this. Let me know if you still disagree though.