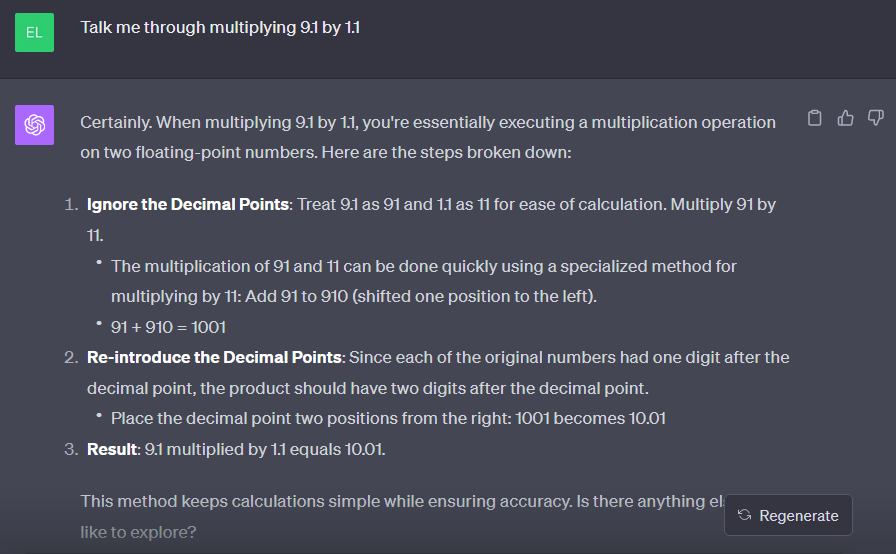

Asking a calculator does not count, it must do the computation natively. It must be capable of multiplying any two numbers and getting the correct result with 100% accuracy, with input length and output precision bounded only by its context window. (Inputs in decimal notation. If it fails on being asked to multiply pi · e or 1/3 · 5/2, that's ok.)

Prompt engineering is allowed, but it has to be a single prompt that always works for any combination of numbers.

People are also trading

By extension you would also include internal "apps"? IE by native you mean just as a result of normal LLM processing?

Now companies tell us when they bail out to other programs but they probably won't do that always. It may be hard to know. If only one company can do it and they deny they're farming it out, this may be hard to judge.

If an open source LLM can do it, we would be able to verify.

@IsaacKing the way it works now is

They feed the prompt into an LLM but also ask it to identify any specific interesting subsections like:

A request for math

A request for a URL

Etc

Input: some prompt with math and a question

This is modified to also ask the LLM to call out any particular interesting subsections.

Output from LLM

Request type : math

My answer: X

If the normal code that receives this from the LLM sees that a math request is included, it extracts the math part, and throws away X. Then it uses a normal program to do the math. Then it rewrites the prompt with the answer inline and resubmits it to the LLM. This time the req for calculation is gone so the LLM just gives an answer and that goes to the user. This is an app or a plugin.

Same deal for looking up web pages. They just get inclined in the prompt and the prompt is rewritten and rerun.

@IsaacKing okay.

Interestingly this may be how the brain works. There may be specialized regions that take over specific tasks from the main network and use special hardware which is less "conscious" than the main one.

IE think about how exactly to throw a ball to hit a target on a windy day. Your prompt is being intercepted by a physics calculator and the answer directly fed to your muscles without even being exposed to your mind.

In the same way, LLMs can be a path to AGI even if they themselves don't ever have internal abilities to do many things.

@Ernie If it emerges naturally from the LLM training rather than being explicitly programmed in by humans, that'll count.