There is a lot of hype about Google's Gemini, which is rumored to exceed the capabilities of GPT-4. It will be a multimodal model.

This market will use a reasonable definition of weak artificial general intelligence (software AGI), not one where the goalposts keep being moved forward every time a new model is released. Gemini will be considered weak AGI if it performs as well as an average human in the three ways that humans communicate most:

Text: it can understand and reply with text that is of a quality that exceeds that which 50% of humans output in text messages, essays, E-Mails, and other written formats, the text is on-topic, and the model understands human emotions. It is able to explain why humans consider the text important. Its text generation abilities would allow it to reason well enough to perform a job requiring a high school diploma, which is the average education level.

Images: it can understand images as well as an average human, describing what is in an image. It is able to explain the reason why humans consider the image important. It can also reply with images that are comprenehsible to humans, are on-topic, and can draw images at a level that an average human can draw them.

Voice: it can understand human voices, the emotions in voices, and can differentiate between multiple human voices having a conversation as well as an average human can. It can speak by creating a voice that an average human would be able to understand as speech, and output basic vocal variety. It is able to understand why humans consider the speaker and the speech topic important. Sounding slightly robotic is fine, as the average human also has an accent that is difficult to understand to many people.

Weak AGI does not require the model to be able to self-improve or be able to train machine learning models effectively, although GPT-4 is extremely good at writing and improving models. The average human can't self-improve either.

Strong (robotic) AGI is not required for the resolution of this market, but if the model can be connected to a robot and the robot would navigate the world as well as an average human, that would result in an automatic YES.

The market will remain open until Gemini is released and it is generally available to all, with no waitlist.

RESOLUTION: Google Gemini Ultra cannot accept audio as input. While it might achieve many of the other criteria, I didn't evaluate them because this one criteria is not met. The resolution, therefore, is NO.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ45 | |

| 2 | Ṁ26 | |

| 3 | Ṁ19 | |

| 4 | Ṁ17 | |

| 5 | Ṁ12 |

People are also trading

@SteveSokolowski "Gemini models can directly ingest audio [...] This enables the

model to capture nuances that are typically lost when the audio is naively mapped to a text input" (https://storage.googleapis.com/deepmind-media/gemini/gemini_1_report.pdf).

It's possible that the Bard app or web page (now renamed to Gemini, making market resolutions trickier) as well as the service in Vertex do not expose audio capabilities or that they use another speech-to-text system for transcription. I don't live in an eligible country, so I can't verify this. However, I think that this market was referring to the capabilities of the Gemini model and not to any specific product using this model.

Nevertheless, I concede that Gemini can't output audio, at least not without feeding its text outputs into a text-to-speech system (see Figure 2 of the report).

@SteveSokolowski I don't think this should be the primary reason.

"Its text generation abilities would allow it to reason well enough to perform a job requiring a high school diploma, which is the average education level." - try giving it a few high school geometry problems that require spatial thinking. Or better yet, make it explain the solution to the problem with several simple drawings.

Image generation: Give it the elephant test: https://manifold.markets/Zardoru/no-elephant-is-the-ai-room. The images are almost certainly generated by a separate diffusion model that doesn't integrate well into the text model.

@3721126 Also, the image understanding in Bard / Gemini is not really using Gemini for now https://twitter.com/ankesh_anand/status/1755591233680916693

"It can also reply with images that are comprenehsible to humans, are on-topic, and can draw images at a level that an average human can draw them."

So it should be able to pass the flower petal test, right? (https://manifold.markets/firstuserhere/will-dalle3-be-able-to-create-corre)

And count the number of petals if you show it a picture of a flower?

And it should be able to sketch, like, a blue chicken inside a cage on top of a box underneath a table, right?

@ErickBall Flower petals seem reasonable. I can do that.

I'm not sure about that chicken one. Not only is it very difficult to draw that for someone who understands the prompt, I bet that a significant percentage of humans would either read too quickly and make an error, or not understand what they are being prompted to do.

@SteveSokolowski maybe find a local 12 year old, write down that prompt for them, and offer them 5 bucks if they get it right. I bet 90%+ can do it.

@Uaaar33 How so?

GPT-4V is superintelligent already when it comes to images. Except for the cases where OpenAI purposely told it to respond "I can't respond to that," I've seen it get a wrong answer.

I gave it an image I took along a bike trail in far Northwest Pennsylvania on July 6, and it said that the image was taken on July 10 in eastern Ohio with no further context or metadata. It explained its reasoning, down to measuring the height of the cornstalks and how the leaves on the trees were brighter green than they would have been in August. No human could have done that.

@SteveSokolowski Can you post a screenshot of that reply?

For my part, I have found a lot of instances of it lacking. For example:

No human could have done that.

Well, us geoguessr lovers can and do routinely do that. But I agree that this is a fairly difficult thing to do.

You guys might enjoy personal challenges like this

@SteveSokolowski It is going to have some superhuman abilities from having seen the world broadly. For instance, it is very good at identifying landmarks.

Basic object recognition is well below human. Well below bird honestly.

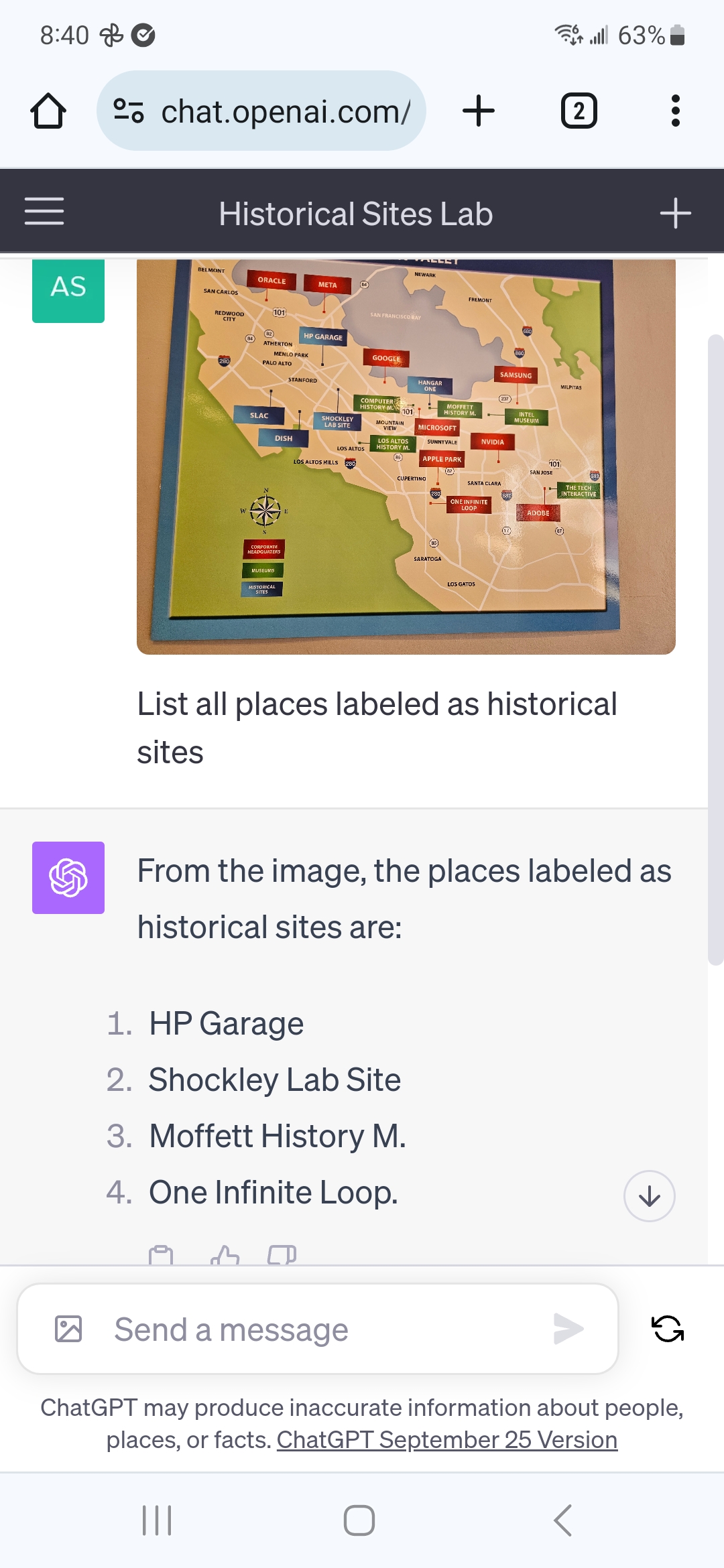

Map and table reading is also sub-human, though you need to be careful in testing to not allow it to fall back to prior knowledge.

My suspicion is that Gemini will also achieve strong AGI, because GPT-4V was shown to be able to navigate to a grocery store using only images of a street in a residential area far from grocery stores. But it, like GPT-4V, will be too expensive to operate in strong-AGI mode.

It's pretty amazing that the main issue holding back these models from changing the world is just cost now.

@SteveSokolowski Am I understanding you correctly: you suspect that Gemini (which seems slated for release this year) may achieve strong AGI? What do you take "strong AGI" to mean, and what is your credence?

@NBAP There isn't any agreed-upon definition of these terms. My impression is that many people define them as "I'll know it when I see it." Then, every time a model does something new, they point to its lack of perfection as a reason it isn't AGI.

In terms of image recognition, GPT-4 is superintelligent. The limiting factor preventing the singularity right now is electricity generation. Electricity is too expensive right now to run these models for everyone - or else there wouldn't be 50 message cap .

So I do believe that Gemini will likely exceed "AGI" as considered by everyone on the planet in 2010. I believe that it will be able to automate most of the economy - if it were cheap to run. The focus should be shifting to power generation rather than creating stronger models at this point.

@SteveSokolowski You should make another market for this stronger proposition. If your belief is accurate, I think you could make a fortune.

@NBAP How so? Even if Gemini could control a robot, it's too expensive to run. GPT-4 costs over 3 cents per prompt; surely this new model would cost 10.

No matter how good these LLMs are, they won't be cost-competitive to act as autonomous agents with current electricity generation methods.

Plus, the model would be controlled by Google. One of the most important things I found out is that you can't run a business that is dependent upon another business, like how Wells Fargo closed my accounts and I'm going to litigation with them.

For "strong AGI" to achieve the singularity, we will need fusion energy and it will need to be open source.

@SteveSokolowski I meant a fortune in mana. Surely you could formulate your resolution criteria to capture the event in which Gemini has the capabilities of a strong AGI, even if it is prohibitively expensive to treat it as such.

@NBAP I don't think such a market would settle at a low enough probability to make a fortune. Enough people are open to the idea that AI could suddenly advance quickly. There aren't many people who are open to the idea that UFOs are real.

I already have most of my mana in a huge limit order at https://manifold.markets/Joshua/will-eliezer-yudkowsky-win-his-1500. That is the biggest moneymaking opportunity, by far, on this site. I'm much more confident that UFOs are real than that Gemini will be strong AGI, and the spread is better too.

@SteveSokolowski I think a market titled "Will Strong AGI be realized before 2024?" would settle at a very low probability. I don't know anyone credible with such a fast timeline. The closest person I can think of is David Shapiro, who recently put out a video predicting AGI within 12 months (but probably not within 6). And while I don't mean any disrespect to Shapiro, I don't think he really qualifies as the most credible source, and his reasoning is not particularly convincing.

If you have even 50% credence, I think it would be a great opportunity for you. However, if you really think that that UFO market will pay out NO, then yes, I can understand why it would make more sense for you to put your money there.

@NBAP The issue with markets is that you're not really betting on whether the act will become true. You're actually betting on what others think and the knowledge gap between you and them on a topic.

I think it's more likely than not that the UFO market will resolve YES. But I think that there is a knowledge gap that is causing people to not learn about the topic, most likely because there are so many fraudsters and liars who dilute actual real evidence with garbage. I think that the NDAA will cut through the noise and bring the odds down closer to reality, which I think right now should be 75%. You can see this by looking at how many "will government officials acknowledge UFOs" markets have been created, despite Barack Obama having already said that UFOs exist and that the military doesn't know what they are.

On the strong AGI market, I don't think there's a knowledge gap like with the UFOs. Unlike the UFO market, nobody is actively attempting to discredit people who say that AI is advancing quickly, so a lot more people are reading about the topic and they are reading true information. I think that whatever that market settles at, I won't have any advantage in trading compared to the average bettor in that market.

It's just a matter of expected value; I think the expected value based on the knowledge gap is higher.

@SteveSokolowski Yeah, I agree that the crux of the issue is whether there's more value to be squeezed out of the UFO market or the AGI market, and from your perspective, I can see that it would seem like the former. But when you say:

"On the strong AGI market, I don't think there's a knowledge gap like with the UFOs."

Perhaps not to the same extent as the UFO market, but there must be some knowledge gap in the case of the AGI proposition, no? Otherwise, how would you explain the difference between your relatively high credence and the low credence of the average person?