Free Access Conditions: The bet resolves YES if OpenAI offers GPT-4 (including variants like "GPT-4-lite" or similar) for free to ChatGPT users in 2024 under any of these conditions:

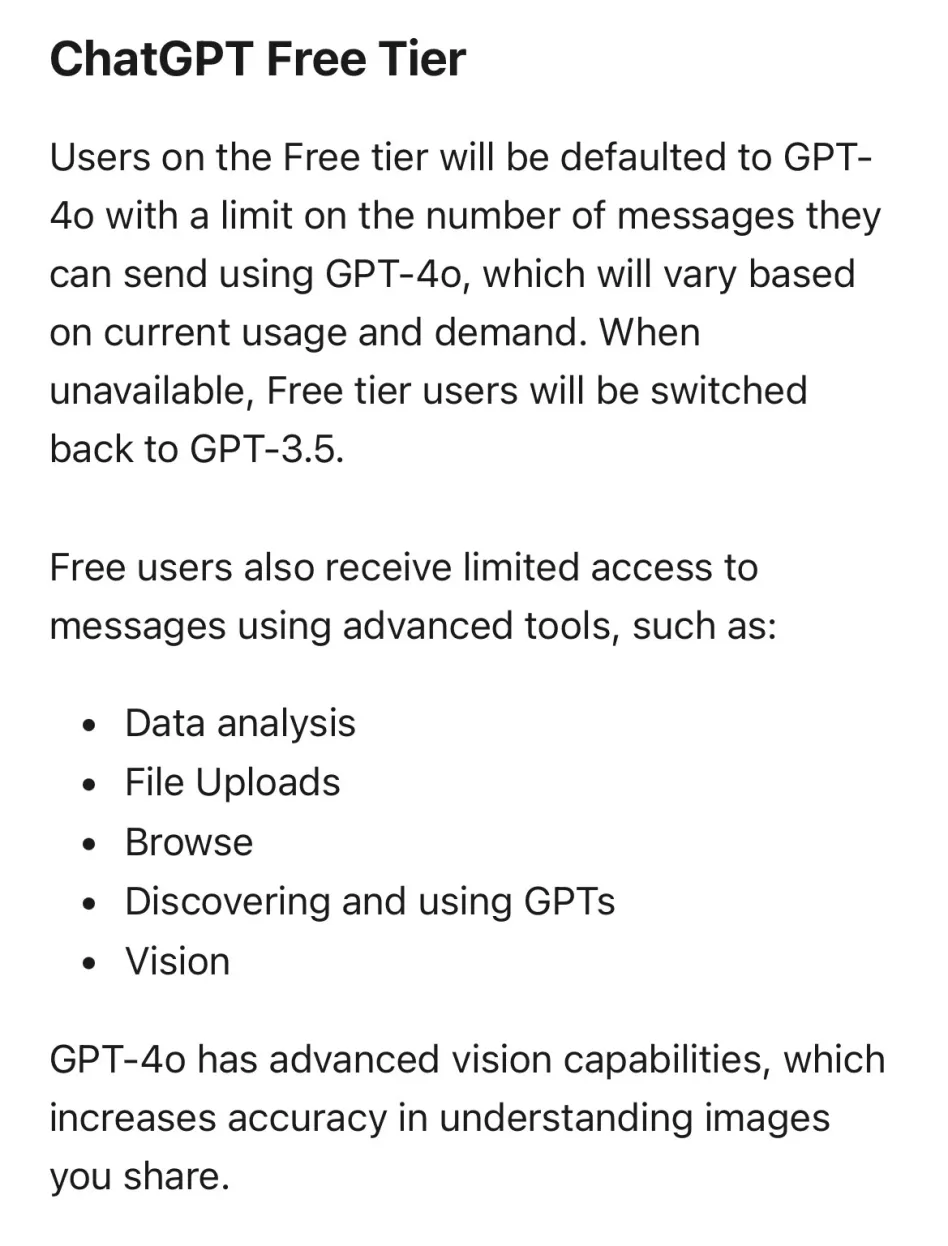

Permanent free access with limited usage (e.g., capped messages)

Limited trial access totaling four weeks (not necessarily consecutive).

Access through custom GPTs using GPT-4 on the GPT-Store.

Exclusion Criteria

Trials requiring credit card information and leading to automatic charges after the trial do not count towards this bet's resolution.

Access to GPT-4 through other platforms like Bing does not count.

Edge Cases

If a trial that doesn't lead to automatic charges starts in December and ends in January 2025, it counts towards resolving this market as yes.

If OpenAI requires a payment method for verification and doesn't apply automatic charges, then this would count toward resolving this question as Yes. However, this is highly unlikely since they use phone numbers for verification.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ5,570 | |

| 2 | Ṁ4,463 | |

| 3 | Ṁ3,217 | |

| 4 | Ṁ1,040 | |

| 5 | Ṁ385 |

People are also trading

@Soli I don't think you did the wrong thing but fwiw I still don't have GPT-4o access in my free account. I wonder what % of users are actually able to access it.

@Soli as an academic point, you wouldn't be resolving this YES if not for the clarification you made below that a better/upgraded model than GPT-4, such as GPT-4.5, being available for free would count, right?

@chrisjbillington the description also had this

The bet resolves YES if OpenAI offers GPT-4 (including variants like "GPT-4-lite" or similar) for free to ChatGPT users in 2024

and the model is called gpt-4o, so I think would have resolved Yes, either way, what do you think?

@Soli Maybe, but then it would be inconsistent with how it sounds like you want to resolve one of your other markets where you want to count it as being the next model after GPT-4, despite saying "Variations of GPT-4 won't count".

Seems like it can't be a variation of GPT-4 and the next model after GPT-4 at the same time, so if you're going to be consistent I think you have to lean on the "next model after counts too" clarification here.

@chrisjbillington good point, but a model can be based on gpt-4 and at the same time be considered OpenAI's next-generation model, which is what gpt-4o is imo.

This is problematic for other markets but not this one.

@Soli Neither market says "based on GTP4", the wording is "variants" or "variations" of GPT-4 in both cases.

https://manifold.markets/market/what-will-be-true-of-openais-next-m

Variations of GPT-4 won't count; only major new models will qualify.

Whereas this market says:

GPT-4 (including variants like "GPT-4-lite" or similar)

One market says it includes "variants" of GPT4, and the other market says it excludes "variations" of GPT-4.

That other market is the only one I've seen you actually comment an opinion on, so perhaps you were thinking I was referring to another market, but I don't know yet what you think about the others.

If you hadn't specifically made the "GPT-4.5 counts" clarification for this market, you would not be able to simultaneously say the model counts for this market as a "variant" of GPT-4, whilst claiming it counts for the other market as not a "variation" of GPT-4. That would be a contradiction.

Of course you could argue that this market says what it includes, it doesn't say what it excludes. And in any case it's not relevant because you did clarify in comments GPT-4.5 would count. But I hope you can see that without this clarification, and relying only on what this market said it would include, your interpretation would be a contradiction.

It doesn't matter, but it bothers me a little that there's the appearance of your natural inclination being to round your interpretation of what the model is in different directions depending on which market you're thinking about. It's an ambiguous situation to be sure, but the resolution of the ambiguity shouldn't depend on what market you're looking at. Either it's a variant/variation of GPT-4 or it's not.

@chrisjbillington I agree 100% with being consistent across all markets. A model can be a variation of gpt-4 and, simultaneously, OpenAI's next-generation model. This is problematic for my other markets since I did not expect this to be possible, but I don't see any problems for this market.

Edit: I appreciate you taking the time to write this comment and pointing out important points. I will make sure to be consistent across my resolutions.

I am confused why this is so low. We are in April and Anthropic is already offering a GPT-4 class model (Sonnet) for free and they are worse-funded. Do the no-voters believe that OpenAI is going to cede the chatbot market? This doesn't make sense from a business standpoint and it is at odds with Altman's recent statements on their mission:

> We don’t run ads on our free version. We don’t monetize it in other ways. We just say it’s part of our mission. We want to put increasingly powerful tools in the hands of people for free and get them to use them. I think that kind of open is really important to our mission. I think if you give people great tools and teach them to use them or don’t even teach them, they’ll figure it out, and let them go build an incredible future for each other with that, that’s a big deal. So if we can keep putting free or low cost or free and low cost powerful AI tools out in the world, I think that’s a huge deal for how we fulfill the mission.

https://lexfridman.com/sam-altman-2-transcript/

I would try to buy this up to 95% if the question were "Will a model at least as good at GPT-4 be available to ChatGPT free users in 2024". But! I put some probability (10-15%?) that they go straight to GPT-5 and tier it the same way Anthropic did with Claude.

@WillSorenson For me this market is more about naming convention than performance. If openAI is insistent that the X in gpt-X has to be mapped to parameter count, then I am still biased to vote no, as I find it unlikely that they would be able to cost-effectively service a near 1T parameter model to the quantity of users they currently have.

@GabeGarboden I think it should still resolve, yes but I would love to hear @chrisjbillington or @DavidBolin's opinion

The bet resolves YES if OpenAI offers GPT-4 (including variants like "GPT-4-lite" or similar) for free to ChatGPT users in 2024

@Soli I wouldn't object. 4.5 (if it happens) being free is a high bar still, and seems close enough.

@Soli His point is that if Microsoft is *already* doing it, everyone else likely will by the end of the year.

@KwameOsei I don't think so. Bing has much lower usage than openAI, they might be burning money on it, but not enough for it to matter. Not everyone can do that.

@chrisjbillington I mean, Microsoft is a major investor of OpenAI… technically it’s Microsoft’s money to burn as well.

@esusatyo It doesn't invalidate it. Microsoft is paying for it, and it just shows how rich they are.