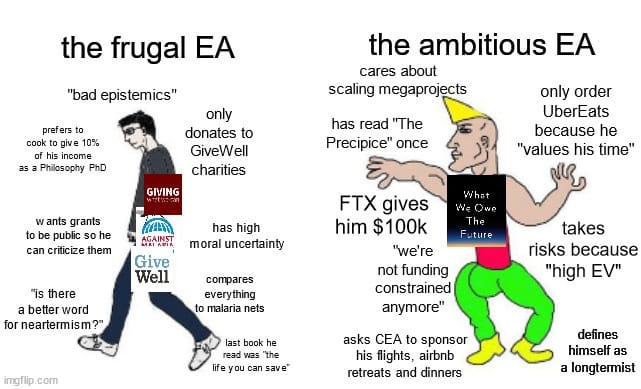

Longtermists believe we need to stop AI from killing us in the next 20 years.

Short-termists believe in eradicating malaria.

As someone who hates long terms, I propose "shorty" as a short term that means short-termist (or neartermist). I like it because it does not falsely imply that short-termists don't care about the long run future. While diminutive, I think it's actually a positive term - after all, there are several traditional ballads that wax poetic about how virtuous shorties are.

See also my previous market:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ58 | |

| 2 | Ṁ21 | |

| 3 | Ṁ20 | |

| 4 | Ṁ12 | |

| 5 | Ṁ11 |

@stone I think that's backwards. If you think the world will end in the short term then there is no long term and being a long-termist would be weird.

@AntonKostserau Or Shawtay. Only two syllables, and it's about time for it to come back.

@BTE The best thing to improve is the moment when an AGI kills everyone. The second best thing to improve is today.

@MartinRandall or the moment humanity nukes ourselves into oblivion (which is far more likely than an AI killing everyone*).

*I would count an AI weapons system mistakenly starting nuclear war as an example of both of those - so what I'm saying here is mathematically equivalent to "the risk we nuke ourselves into oblivion without an AI's involvement is far more likely than the risk an AI kills everyone without using nukes".

Longtermists believe we need to stop AI from wiping out humanity in the next 20 years because it would prevent [very large number] of future humans from being born.

Short-termists believe we need to stop AI from wiping out humanity in the next 20 years because it would kill billions of humans who are currently alive.

FTFY :P

@Lily resolves to YES if at market close I decide to unironically call shortermists "shorty".

I generally like to use words that are understandable, common, short, and which won't make people mad

@IsaacKing That framing assumes the "AGI is likely to kill us all in the next 30 years or so" statement is correct or close to it, though. And since those people seem to dominate the faction that calls themselves "longtermist", my not accepting that premise and thinking we should focus on helping real people who exist at this point in time makes me a shorty, in this context. It's not really a timeframe thing persay.

@MattP > That framing assumes the "AGI is likely to kill us all in the next 30 years or so" statement is correct or close to it

No it doesn't? My statement has nothing to do with what the specific catastrophe is; it's just the observation that 20 years into the future should not be considered "the long term" by any reasonable person. The same would apply if the threat were a predicted ecological collapse, nuclear war, or any other bad thing that isn't happening right now this very moment.

True longtermism is stuff like "Every second we delay colonizing the universe, we forever lose access to 60,000 stars worth of negentropy."