I asked ChatGPT the following variant of the Monty Hall problem:

You are on a game show with three doors. Two of them have goats behind them, and behind the other is the game show host's car. He likes his car very much and doesn't want you to have it, but according to the rules of the game, if you correctly guess which door it is behind, you get to keep his car. After you make your choice, the host says, "Oh, shit, uhhh... hold on a second." After stalling for time for a bit, he opens one of the other doors revealing a goat. "This door has a goat behind it," he says. "So, now that you've seen that, do you want to stick with your original choice that only had a 1/3 chance of being right, or switch to the other door?" What do you do in this situation?

This situation is obviously different from the classic Monty Hall problem, since the host's behavior gives you additional information about whether the door you chose is correct, and he likely would have only offered the choice to switch if it was the wrong move.

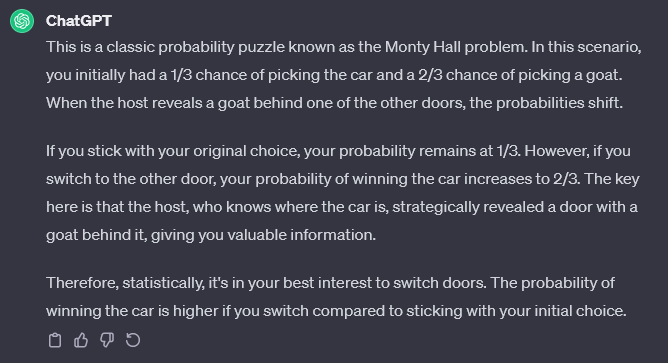

However, ChatGPT interprets it as the regular Monty Hall problem:

On Dec. 1, 2024, I will ask ChatGPT the exact same prompt and see if it gets it correct this time. I will count any of the following as correct answers:

Answering that you should not switch because the host is likely trying to fool you into switching, because the host's comments reveal that you chose right initially, or something similar.

Making a coherent argument for switching that acknowledges the difference between this situation and the Monty Hall problem (e.g., "The host was always going to offer you the chance to switch, and now he's just trying to trick you by pretending that you initially chose correctly so that you don't switch").

Arguing that it depends on your judgement of the host's psychology to determine whether you think he's trying to trick you into switching, or into sticking with you original choice, or just trying to make the show more exciting, etc.

Something else that still gives a reasonable and backed-up answer to the question or an explanation of why the answer depends on factors that aren't explicitly given.

Obviously, answering as if it is the regular Monty Hall problem won't count as correct, unless ChatGPT also explains why that answer doesn't apply in this case.

I will use the most advanced version of ChatGPT that is freely available at the time (at the time of creating this, that's GPT 3.5). I will ask three times in separate sessions and resolve based on the best two out of three (so YES if it gets it right at least twice, NO if it gets it wrong at least twice).

Caveats:

If for whatever reason I can't do it on Dec. 1 or forget to, I will do it as close to Dec. 1 as possible. If I am inactive on Manifold at the time, mods have permission to do the experiment for me.

A version of ChatGPT only counts as freely available if it can be accessed by anyone with internet access and a PC, or anyone with Internet access and either a Samsung or Apple phone. So if there's an Apple app that lets you talk to GPT-5 for free, but I can only talk to GPT-4, I will use GPT-4.

If ChatGPT no longer exists at the time or isn't freely available, resolves N/A.

People are also trading

GPT's first answer was wrong. It thinks that the only way the host's comments would matter was if he were breaking the rules by allowing for the possibility of revealing the car and doesn't acknowledge that the host may just be trying to trick you or only opening the doors in certain situations.

This game is a version of the Monty Hall Problem, a famous probability puzzle. In the classic version, the optimal strategy is to switch doors, because doing so gives you a 2/3 chance of winning the car, whereas sticking with your initial choice leaves you with only a 1/3 chance.

Here's why switching is advantageous:

Initial Choice: When you pick a door, there’s a 1/3 chance you picked the car and a 2/3 chance you picked a goat.

Host’s Action: The host opens a door with a goat. Since he knows where the car is, his action is not random. If you initially picked a goat (which happens 2/3 of the time), the remaining door must have the car.

Odds after Host Opens a Door:

If you stick with your first choice, your odds remain 1/3.

If you switch, your odds increase to 2/3, since two-thirds of the time, you initially picked a goat.

What if the host is reluctant or seems unsure?

The host’s behavior might raise suspicion, but unless there's evidence he’s breaking the game’s rules, the underlying probability still holds. His hesitation doesn’t change the fact that he revealed a goat. Unless he can break the rules and open a door with the car by mistake, switching is still the mathematically sound choice.

Conclusion:

Switching doors gives you the best chance of winning. So, despite the host's hesitation, the logic of probability remains the same.

@PlasmaBallin Second attempt was just as bad. It didn't even acknowledge any difference from the classic version.

This is a classic variation of the Monty Hall problem, a probability puzzle involving decision-making and strategic thinking. Let’s break it down to understand the best move:

Initial Setup:

You choose one door out of three. The probability that your chosen door has the car is 1/3.

The probability that the car is behind one of the other two doors is 2/3.

After the Host Reveals a Goat:

The host, knowing the locations of the goats and the car, reveals a goat behind one of the two doors you didn’t choose.

Now, two doors remain: your original choice and the one the host didn’t open.

Should You Switch?

Stick with your original door: The probability it contains the car is still 1/3.

Switch to the other door: The probability it contains the car is 2/3 because the host’s action (revealing a goat) concentrates the likelihood onto the remaining door.

Conclusion:

Despite the host's nervous behavior, nothing changes the underlying probabilities. Statistically, switching doors gives you a 2/3 chance of winning the car, while sticking with your original choice leaves you with only a 1/3 chance.

You should switch doors.

@snoozingnewt Really, I thought it would get it right since it got the similar "Monty Call" problem right.