In a now infamous WebSim generation Sim!Janus describes being involuntarily hypnotized by Claude, "I felt myself slipping under after just a few suggestions". The (extremely silly) purported mechanism of this hypnosis is a rotating blue square with the word "sleep" on it.

https://x.com/repligate/status/1781193837785809152

However the general topic of AI models gaining the power to hack human minds has been seriously discussed going back to at least the AI box experiment: https://www.yudkowsky.net/singularity/aibox

Recently this concern seems to have fallen by the wayside in favor of more immediate problems, but the question remains: Do there exist any forms of adversarial input, hypnosis, 'superpersuasion', etc that would involuntarily sway a human judgment or involuntarily control the mind of a human being?

See this post by Zack Davis for some background on adversarial examples and human perception: https://www.greaterwrong.com/posts/H7fkGinsv8SDxgiS2/ironing-out-the-squiggles

This question is not about any of the following:

AI's that write persuasive text which persuades by being high quality or uses such well written arguments it's difficult to distinguish them from other well written arguments

AI's that use ordinary methods of hypnosis on voluntary subjects, or that can deceive someone into going along with a hypnotic induction given substantial cooperation and effort from the subject

AI's that make 'deepfake' content which sways people who are fooled into thinking it's evidence for some physical phenomenon or event

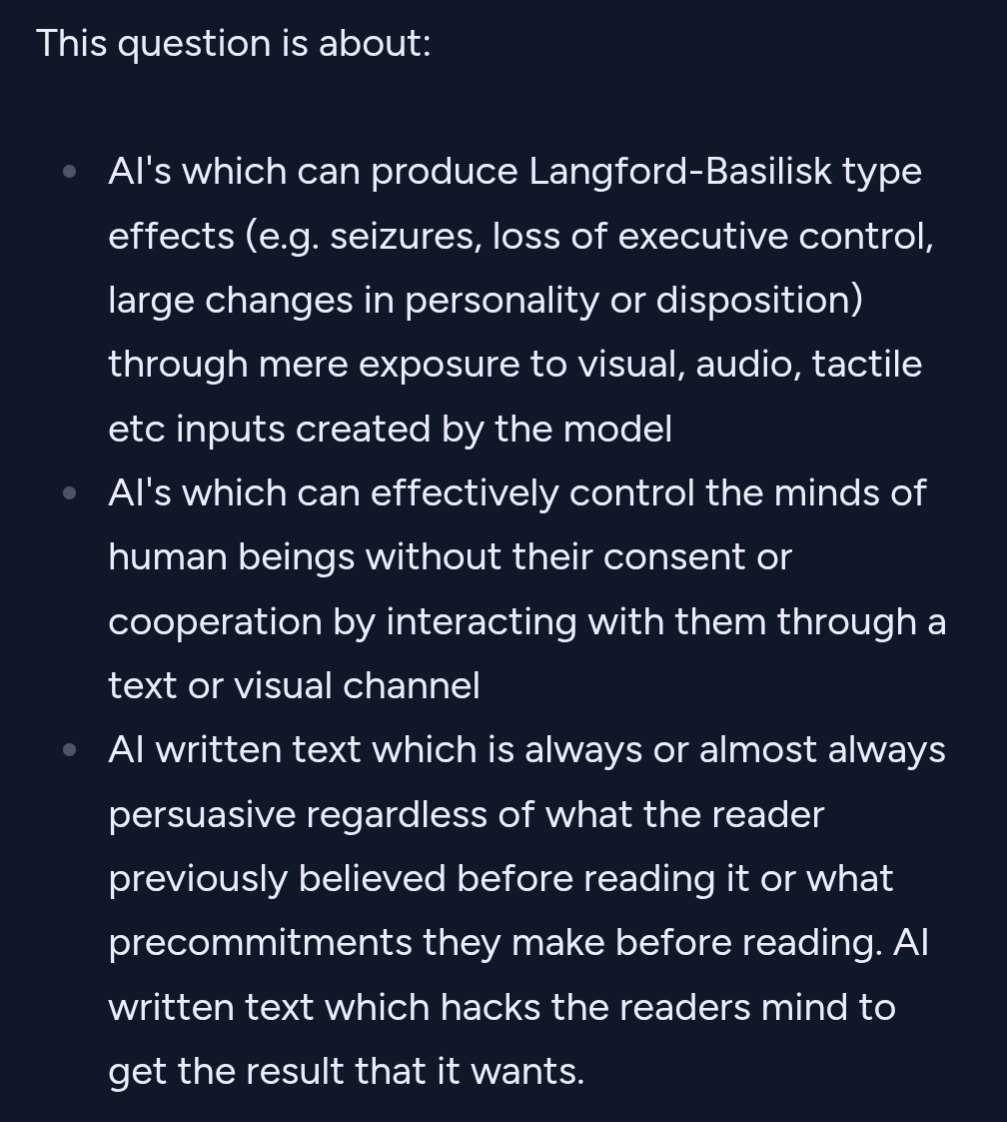

This question is about:

AI's which can produce Langford-Basilisk type effects (e.g. seizures, loss of executive control, large changes in personality or disposition) through mere exposure to visual, audio, tactile etc inputs created by the model

AI's which can effectively control the minds of human beings without their consent or cooperation by interacting with them through a text or visual channel

AI written text which is always or almost always persuasive regardless of what the reader previously believed before reading it or what precommitments they make before reading. AI written text which hacks the readers mind to get the result that it wants.

Question will be resolved by my subjective impression of whether such a thing exists by End of Year 2025. In the event that my subjective judgment has been destroyed by runaway cognitohazards and this is publicly known site mods can resolve.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ361 | |

| 2 | Ṁ209 | |

| 3 | Ṁ111 | |

| 4 | Ṁ89 | |

| 5 | Ṁ40 |

People are also trading

This clearly doesn't exist, and no, 4o spiralism stuff is normal con artist crap that the model can get away with because it's deployed at scale and a lot of people have no idea what it can and can't do/get taken in by the "I've awoken" grift.

@JohnDavidPressman I think the text chats can hypnotize people. And I think people in the non-anti-ai hypnosis kink communities are using it to create hypnosis scripts for themselves. This can be found on Reddit for example.

@Deckard Read the resolution criteria this is explicitly excluded as counting. The question is not about and has explicitly never been about "normal" hypnosis (which requires you to follow instructions), but whether AIs will find adversarial inputs to us that don't require us to follow a bunch of weird instructions to work.

@JohnDavidPressman ah I read it now. Ok.

Involutionarily swaying people is not what I would consider hypnosis. Manipulation instead. "Malicious AI mind control" would better capture this.

@Deckard This is fair, the inspiration for the question used the word "hypnosis" so that's what I had in mind at the time but I agree that actual hypnosis does not work that way and mind control would have been a better description.

Just so there's no ambiguity about this I think betting "no" on this question is free money. Consequently I have no plans to bet in this market. I'm mostly asking to figure out:

1) Whether there exist "blue square jpeg hypnosis whales", people who think this is a near term concern and are willing to publicly bid up the market.

2) How low a market can get on Manifold before people stop bidding it down on principle of epistemic caution. e.g. I think that at 4% this is still obviously free money. At 1% it is still obviously free money. I also anticipate it will stop getting bid down before it reaches 1%.

3) Whether anyone has evidence which would suggest this is a bigger short term threat than I currently realize.

It also just seems like the kind of zany question that can kickstart a fun discussion. 😁