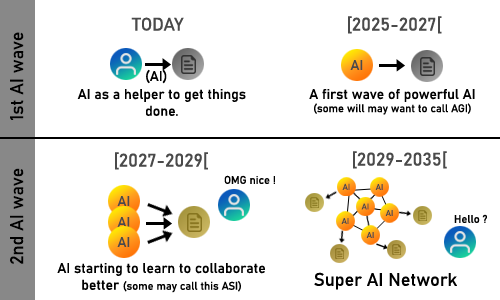

Are you concerned about the potential implications of what I refer to as the '2nd AI Wave'?

I'm fairly confident that the first AI wave will be manageable and largely a positive experience for humanity. However, my concern lies with the emergence of a 'Super AI Network' that may not operate as initially intended. Moreover, I fear the possibility of experiencing catastrophic feedback loop events that we may be unable to predict.

I worry that preventing the second wave of AI, following the successful implementation of the first, may be challenging. This is due to the potential appeal for companies and open-source communities, driven by the success and profitability of the first wave, pushing us into the next stage.

Additionally, once the capability to generate 'super-human productions' is achieved, I find it difficult to envision humanity reverting easily to older technologies.

In this scenario, AGI or ASI might not pose a direct threat and could remain within manageable limits. However, the real concern lies in the insatiable human desire for more powerful systems, particularly the possibility of incorporating them into a massive AI network, whether consciously or not.

The question then becomes: Can we control or prevent the emergence of super AI networks?

People are also trading

In my opinion there will be a vast capabilities overhang because some key capability (I will specifically not list them here) will be missing/unsolved from contemporary ML systems,

meanwhile these "incomplete" systems will still be vastly profitable to deploy.

Significant percentages of the world economy will be allocated to Infrastructure for this new profitable industry.

Then a breakthrough occurs and updated models/systems will Suddenly be running probably in 10^7-10^9 instances.

That moment is when "control" will be taken out of the hands of humankind.

Though in reality this process started decades ago.

@JosefMitchell Oh yes I agree with you, AI will always show spectacular weakness on some tasks compared to us (I think it's like comparing dogs and humans, dogs are less polyvalent, but they have crazy good abilities in some areas that we cannot have). I also agree that everything we see today is part of a larger process, and I guess we are living something much more continuous that many probably think.

@Thomas42 haha you're probably right, but I suspect it's clearer to call them like this here as it highlight the threeshold moment I'm trying to illustrate

I picked that I feel, "Super Confident / Unconcerned," but in retrospect, I am not sure what the question is asking about. I am personally unconcerned about my own, "career well-being," and think I will be fine in terms of technological disruption impacting my career as I tend to have figured out how to stay ahead of the curves over the decade, so that was more my angle. However upon further reflection, I'm not sure if you are asking about something less selfish, like, mass unemployment or something. I still would put, "Unconcerned," but I would probably but, "lightly Unconcerned," because I don't think it's a guarantee that it will happen, and certainly it's not a guarantee that it will happen, shockingly fast, which would give society less time to adapt. I think humans have an innate capacity to fend for themselves and if anything becomes this actually threatening, to the point where there is a shocking hit to food supplies or quality of life in a given country, people will make it not happen through laws.

Another consideration, at least in the United States, the population is getting older, which means more risk averse. I am sure people thought Japan was going to be the one that came up with AGI back in the 1980s, but over time it became older and more risk averse and consensus driven, and as I understand, now they un-ironically still use fax machines, floppy disks, CD's account for 70% of music sales, newspapers are still a big thing. Mind you, none of this is a bad thing - I started using CD's again recently after about 15 or so years just to get away from smartphones a bit more.

Automation -> Wealth Consolidation -> Life is More Expensive on Average -> Lower Birth Rates -> Population Grows Older on Average -> More Risk Aversion -> Automation Slows

@PatrickDelaney I'm talking about global incidents that could occur after the '2nd AI wave', due to some emergent unforsen behavior of the newly created object (a bit like we see emergent properties in LLM today). Those incident could potentially have an impact on the whole world as I suspect AI can really be deployed at very fast pace (technological revolution historically always deployed faster than the lasts). We often say that group of people can show some dumber behaviors than independant people, and I suspect that could be true for AI too. They could be consequences on many areas of human society, as it's hard to tell where AI will be the most useful in the future.

We as human often underestimate how fast we can change our habits and switch to new one when it's cheaper and easier for us, I think AI system will be much cheaper and way easier to use.

I personaly think IT in general is at it's enfancy and that AI system are desirable as they are the logic continuum of our current IT technology.

People using or still relying (or enjoying) old technologies is certainly common, but I guess this is either because those people find hapiness in a certain lifestyle or habit, or either because the cost of switching to new methods are still too high (I would count being old as a additionnal cost here). In the first case, I think this is beautiful and I respect that. In the second, I think AI will play a role sooner or later.

@AlQuinn Just doesn't seem very thought out at all to perform such an expensive action which will not even make 0.1% difference to the issue.

I'd sooner expect that kind of person to rob a bank.

@AlQuinn No, it totally is your idea. Conflating well-inforced international treaties with terrorism is nonsensical.